Generally when someone creates a spreadsheet they have an idea how it should work that goes beyond entering formulas. They know what ranges a cell's value should stay within or they may have an idea what kind of relationships exist between cells, anyway, this is all extra information that a user currently does not have any way of telling to the system. Assertions are our way of letting a user communicate more constraints or information to the system. Assertions are a type of guard that a user can enter on a cell, say on a spreadsheet. As of the current implementation, a user can enter a specific range, say 0 to 10, on a cell that conveys to the system that values in this cell cannot lie outside that range. Dr. Burnett and her group of students are working on implementing other forms of assertions, so, for example, a user can convey a relationship constraint between cells.

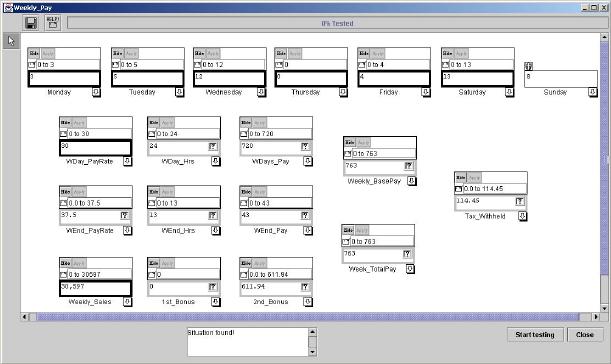

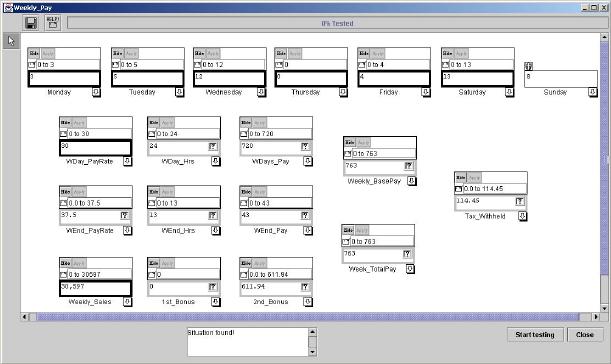

Here is a black and white picture of the interface of Forms/3 (the boxes on top of the cells are Help Me Test assertions- you can also have user and system assertions):

Before I came here for my research experience, Dr. Burnett and Dr. Cook together with their students, conducted a study that was interested in finding out if assertions would prove to be helpful in debugging spreadsheets. They were not interested in finding out whether users would actually use assertions in testing their spreadsheets and if their own assertions would be helpful in debugging. This was an interesting question that we thought should be investigated. So, when I arrived, it was my job to be the the primary designer and administrator of our new experiment into whether end-users would use assertions or guards in a spreadsheet and if so whether they were useful in debugging.

I'm currently writing up a tech report which details the experiment design, participants, tasks and materials, and the tutorial. We are going to combine our report of our experiment with the previous study of assertions because they are closely related into one large tech report.

I don't expect the final copy of the tech report to be finished for awhile, but do I want to share with you some of the major sections of the report that I've written.

Experiment 2

Experiment 1 established that assertions are useful in debugging.

In order to build upon these findings, we wanted to examine whether end-users

would enter assertions and if their own assertions would be helpful in

finding and correcting these errors.

Design and Procedures

Since Experiment 1 established that assertions are helpful for participants

in finding errors in spreadsheets, we wanted to learn if participants would

use assertions without any previous instruction on what they were or how

to enter or edit them. As in Experiment 1, we conducted a controlled

study in six smaller sessions using a Windows computer lab. Subjects

from three sessions worked in the WYSIWYT plus assertions environment previously

described in section (**find out which section Experiment 1's environment

is described) and the subjects from the other three sessions used the same

environment but with the addition of Help Me Test (HMT) assertions.

For each group, the instructor led a 25-minute tutorial on the features

of Forms/3, introducing only basic language and GUI features, while each

subject actively participated by following along and working with several

example spreadsheets. No training on testing theory or test adequacy criteria

was given to either group. To control for amount of exposure to the environment,

both groups of subjects were given identical amounts of training time.

We set up this experiment in a similar manner to the Experiment 1 because

we hoped to be able to compare some of our findings. The design of

our experiment was essentially the same. We asked our subjects to

test two spreadsheets to make sure they worked according to their description.

If participants found any errors they were told to fix them. We used

the same spreadsheets as Experiment 1. To account for user exploration

and learning we increased the allotted time for the experimental tasks.

We collected data during the experiment from post-problem questionnaires

and from electronic transcripts that captured all of the participants'

actions and activities within the Forms/3 environment. At the end of the

session both groups answered questions about their understanding and use

of assertions. The HMT assertion subjects completed a few additional

questions about their understanding and use of HMT assertions.

Before the experiment, we conducted a cognitive walkthrough of

the tutorial and the tasks we wanted our subjects to perform. The

goal of the cognitive walkthrough was to make sure the tutorial gave our

subjects the proper skills in order to complete the experimental tasks.

We stepped through a task to try to figure out what a participant's goal

might be, and then determined what actions a participant will doing at

each step to reach his or her goal. We ran into a few problems

with our tool tips for the features of Forms/3, in most cases they weren't

descriptive enough and didn't tell the participant what they could do with

it. We decided our tool tips needed to be good enough to teach someone

what the features are and how to use them. We hoped that by changing

the tool tips it would be easier for participants to learn though exploration.

Pilots

In order to iron out the creases in our experiment and find an appropriate

cut off time for the experimental tasks we ran a total of four pilot subjects.

One of our pilot subjects was a think-aloud subject. During a think-aloud

study subjects are asked to talk or think out-loud while they do each task.

Usually think-aloud studies are conducted to understand reasons for behaviors.

We gave our pilot subject unlimited time to complete her tasks. We

wanted a think aloud pilot subject because we wanted to know what a typical

user would be thinking and interacting with our system.

The other three pilot subjects were not think aloud. They were

given as much time as they needed to finish a task. We wanted to

get timing data from our pilots to determine what a suitable cut off would

be for the experimental tasks. We believed that learning about assertions

would take time and we wanted to figure out just how much more time it

would take.

Our pilot subjects also helped give us additional insight into our

experiment. As an experienced users of our system, it can be difficult

to remember how it was first learning about it. For example, one

of our pilots ran across the assertion number line and asked "this does

what for me?" at which point we realized our explanations for this tool

was not good enough for people to understand what it was. We had

not expected or really thought about someone opening the number line instead

of writing an assertion in the text box. Our pilot subjects made

us reexamine our tool tips to see if they were giving enough information

for the user to learn what assertions are and how to use them.

Tutorial

Recall that in designing our experiment we wanted to keep everything

as close as possible to Experiment 1 so we could compare our results.

At one point, we thought we might even be able to use the tutorial from

Experiment 1. But in using their tutorial we would have run into

some problems. First, experiment 1 did not use the Help Me Test feature

at all, so we would have had to teach that in the tutorial. But 35

minutes was already too long for the tutorial, adding anything else

would have just made it longer and we did not want that. The longer

a tutorial gets the lower a participant's attention span is going to be.

The lower the attention span, the lower the retention rate and the less

a participant takes away from the tutorial. This can confound the

experiment because someone who does not know what he is doing is not going

to be as effective of a measure as someone who took away everything from

the tutorial. Second, Experiment 1's tutorial specifically taught

assertions. The tutorial covered what purpose assertions served,

how to edit them, what assertion conflicts and value violations were and

what steps a participant could take to get rid of them which could lead

to finding an error in the spreadsheet. But teaching assertions in

the tutorial seemed to go against what information we were trying to collect.

We were worried that if we had taught participants what assertions were

and how to use them, then it would be clear to participants that

we wanted them to use assertions.

To solve the above problems, we made two important changes to

our tutorial.

First we left out any information or discussion about assertions in

our tutorial so that we could be confident that we were not giving our

participants too much information or leading them in any way. Our

tutorial covered all the same topics as the tutorial for Experiment 1 with

the addition of HMT but minus assertions.

Our second change was to alter our tutorial so its strategy focused

more on teaching through exploration. The strategy for Experiment

1 was to present detailed information to participants about Forms/3; our

goal was to guide our participants on their journey though our system by

encouraging exploration and discovery of explanation. We hoped that if

we encouraged exploration of our system, our participants might stumble

into assertions and learn how to use them on their own (in fact, many of

the participants did indeed use exploration to discover assertions and

learn how to use them). We encouraged exploration based learning

and behavior by teaching subjects to hold their mouse over features in

the tutorial and emphasizing that if they needed help and could not remember

what something was all they had to do to remind themselves was to hold

their mouse over the thing and wait for a helpful pop up message to appear.

Participants were also given three exploration periods during the tutorial

in which they were encouraged to learn more.

For the tutorial material that was explicitly presented by the

instructor we used the following techniques. We followed a demand-driven

strategy in providing information; we gave our participants information

on a feature only when we thought they would be curious about it and not

before. There were no separate sections for the discussion

of each feature in the tutorial. We integrated the teaching of each

feature into a whole. If participants were curious about something

in our system they could freely act upon that during one of our exploration

time periods.

Following from our new tutorial strategy, our tutorial shortened to

25 minutes. Even more important, with respect to assertions,

our new strategy eliminated some of the differences between ours and a

real environment by removing the element of the teacher. The participants

only way to learn about assertions was then to dig it out on their own

- just as they would have to do in a real environment.

We also realized that our new strategy put extra pressure on our tool

tips to perform, since they were the only way for participants to learn

what the features of Forms/3 could do for them. We did not give our

participants a reference sheet like in Experiment 1, instead we taught

our subjects to use the tool tips in learning about features and if they

could not remember what something was they could easily go back to the

tool tip for a reminder. In fact, the tutorial strategy played a

critical role in sharpening our vision about the importance of tool tips

not only to the tutorial but to the overall approach to assertions.

Our vision shifted from tool tips as ad hoc helpers to tool tips as assessors

to an organized integrated explanation system.

| about me | mentor | research | journal | final report | summer fun | my roommate and I |