Tracking System for a Street-Crossing Robot

Marbella Duran, Universidad Metropolitana, San Juan, Puerto Rico.

Research Mentors: Professor Holly Yanco, Department of Computer Science, Umass Lowell.

Abstract:

The development of robots in modern times has been limited to safe and supervised areas. As technology has advanced, new goals have been set for robot behavior. Previous investigations and models on Street-crossing Robots have demonstrated that robots need to be able to track multiple vehicles before they decide to cross a street. This behavior has been accomplished through the preparation of an algorithm after calculating and analyzing diverse obstacles that could put the robot in danger.

In this initial work we focused on proving this algorithm in one-way or two-way streets with no more that one lane in either direction, for it is more dangerous and complicated for the robot to attempt to cross a street with multiple lanes.

This investigation is based on the development of an algorithm capable of allowing the robot to track from a safe place when attempting street crossing, the algorithm is based on the calculation of distance, speed, and motion of each vehicle approaching or driving away from the robot.

This calculation assumes that the robot is built with systems and pieces chosen and proven to contribute to an efficient algorithm to guarantee its best and safest possible performance.

From the beginning of robotics, robots have been in constant evolution. This evolution in the construction of robots is a direct consequence of the necessity to adapt to new and more complex tasks. It is clear that research and new work with robots is becoming more interesting and necessary in technological and social activities. This investigation attempts to develop a better tracking system capable of allowing a robot to detect vehicles, obstacles, pedestrians, motions etc. when the robot tries to cross a street.

According to these past investigations, new robots being built have more efficient and complete equipment that makes the task of crossing safely more possible. The efficiency of a tracking system will also depend on other factors including luminosity of the environment, speed of vehicles, creation of shadows, noises, etc. These could pose development problems. For our research we used a vision processing tool named Phission.

Methodology:

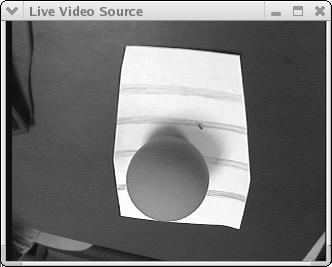

In order to improve a system it is important analyze the frequent problems, including the detection of objects in motion and the development of software based on the system tracking of the robot. A robot detects an object and image by means of cameras and sensors; it defines the shape, colors, size, and motion of the object by processing the data in agreement with the program executing an instruction. Work is now being done on the tracking system based on the image detected, analyzing the color intensity and creating shadows, both of which constitute obstacles in a crossing tracking system in time real.

For capture and processing image we can uses the RGB composition it’s based on three different images of the same object. The difference is that light is filtered through a device before the image can be detected. There are three filters: one for each color (three basic colors- Red, Green and Blue). The images of the same object in different filters are not similar. The RGB assigns each image pixel a value between 0 and 255 that represents the number of tones of the three basic colors. RGB is not a good method for the detection of a tracking system because it may be good for the human eye, but not very good for a camera. One problem with detecting images in RGB is that when there is too much light it is absorbed by the object and this causes distortion in color and changes the value of the pixels of the image detected. It also causes shadows and thus an array of complications in the tracking system.

Histograms are usually better to use in a tracking system, as the car describe the properties of images to low levels, the changes in the light, the angles, among other things. Because the image may be affected, this system uses HSV (Hue Saturation Value) because it is a more efficient composition and a way of keeping independent properties of the color in the image.

Part of the work has included work with the Phission code. Phission is a kit used to create and process subsystems in software. It is written in C and C++ language and is portable, efficient and fast. Basically, Phission has three active components: 1) A Capture class: For capturing images; 2) A Pipeline class: For processing images; and 3) a Display class: For exhibiting images.

The image is recorded from camera in the robot and the individual’s characteristics are based on color and are processed through a code created in Phission. Images are filtered through this code to learn the value of each color in each pixel. The RGB and HSV compositions are used for this. One can also prove how this code works depending on the different values and colors applied to the code and filtered through the camera. The Phission Code is built with a variety of subroutines, equations, and instructions that process the data of the image captured, converting the color value and filtering images with color specifics depending of the values given, finding values in the palette, capturing and checking the input of the values in the image formats. The capture and setting of the average value into all the pixels depends on the width, height, and depth.

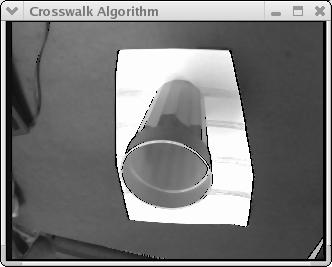

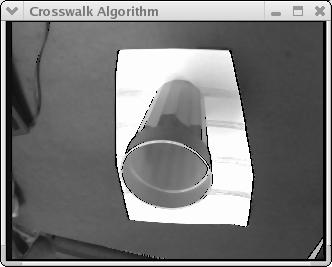

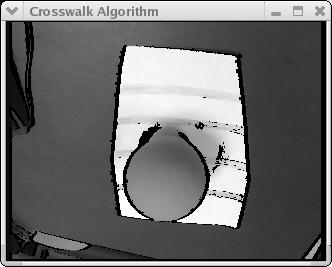

In this tracking system algorithm, this is another function of the Phission code transformer which changes color images into gray scale images.

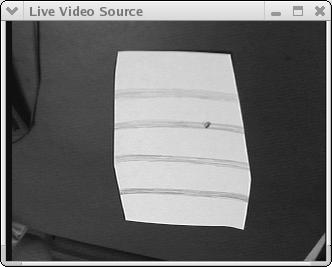

The array of pixels is mined for lines, its values are defined for a number of consecutives pixels. A value for the difference between a pixel and its neighbors is obtained thus intensifying the lines of the pixels that extend to the edges of the crosswalk. This will result in the robot finding its path to crosswalk and move itself between two lines.

SICK Sensors are used to basically measure distance. The sensors reliably sort, count, inspect, measure, recognize and verify the position, size and overall shape of objects. Contrast sensors recognize differences in material brightness and color. Luminescence sensors recognize fluorescent markings, labels, threads, lubricants and adhesives. Color sensors identify and differentiate objects by color.

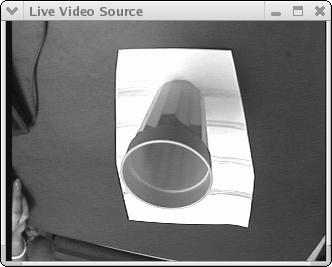

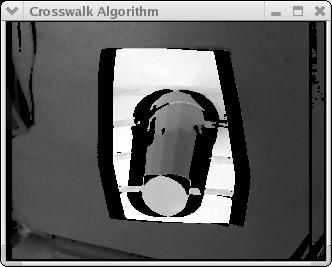

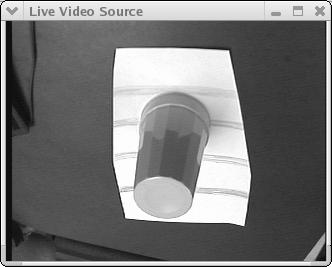

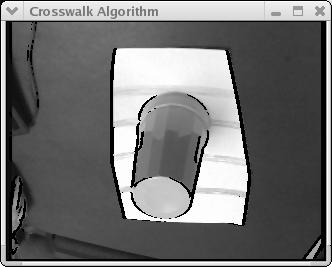

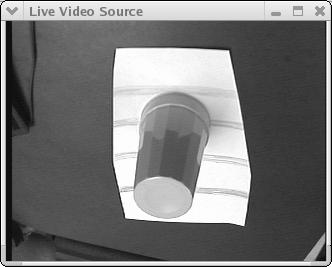

Example of the image of a Threshold of 40 and a value in the color = 1

Example of the image of a threshold with a value = 0

Example of a image with a value threshold = 15 and a value in the color = 3

Example of a image with a value threshold = 40 and a value in the color = 5

Results:

It is clear that the crossing system is working, except for some problems that are necessary to improve before the system is proven in the real world. Some problems with the shadow can be solved. Mori described a “sing pattern” that is very useful for detecting vehicles while ignoring shadows, and there are systems that can remove the shadows. When I test with different values for the edge, these values are registered in the path of the robot, and not just in the line edges of the crosswalk. Other tests can be conducted using a format phImageGREY8 initializing the image to 0 when the threshold is greater that the image. When the camera captures the image and the edge is intensified, very little of the other parts of the image are shown and the program gives an error “input image format unsupported by filter.” The values between the distances of pixels for the edge of the crosswalk can be created using a formula that diminishes the distance or increases the pixels values of depth interfering with the crosswalk path, and not with the resolution and focus of the image caught by the camera of the robot. It is important to consider that illumination is very important when capturing images. The value of the intensity of the pixel due to noises and shadows can change depending on the illumination of the environment. This can cause confusion between the pixels in the edge in the crosswalk with the other pixels in the image.

The Blur filter softens an image by averaging the pixels next to the hard edges of defined lines and shaded areas in an image. The Median, Blur, and Smooth filters remove noise and detail by averaging the colors of adjacent pixels in the image.

Increasing the threshold in the algorithm will preserve more of the low contrast edges. When the threshold is very slow (for example 10), the image captured will have a very marked intensity of pixel as much in the edges of the crosswalk as in the trajectory of crossing and the pixels in the shadow are intensify too. When the threshold increases, the edge in the image is more intense than the path for crossing. If in the code we changed to a value in the color greater that 3, increases the thickness of the edge in the image, a very high value in the threshold can produce defocusing in the image and loss in the image and the intensity of pixels captured in the edges. We can to create a balance between the values threshold and color, with a threshold = 40 and a color = 3 we obtained a better and more clear intensity of pixels in the edges of the image.

Example of image with a threshold = 40 and a color

Acknowledgments:

All the people who gave support in this Summer Project

People from DMP.

Mentor Holly Yanco.

Dr. Juan Arratia.

Dr. Gladys Bonilla.

And the lab people: Mike, Brenden, Bobby, Aaron and Rachel for their patience and constant help.

Home Weekly Current Fellow Project Final Report