Week 1

This being my second summer at the Moses Computational Biological Lab at the University of New Mexico, I came in knowing the ins and outs of the lab. Normally we would arrive the Monday of the starting week, but I decided to arrive the Friday just before the weekend started to settle in to Albuquerque. I stayed in touch with some friends from last summer and let them know that I was going back to UNM again this summer and was offered a place to stay.

My first weekend here consisted of buying all the necessities I would need over the summer, so I could start on Monday with a clear mind. I was also fairly familiar with the UNM campus and really enjoyed the duck pond, so I spent time there on both weekends and took some long naps to clear my mind.

When I came in Monday morning I met up with Dr. Matthew Fricke, who is now the technical lead of the lab and I helped set up the work area for myself as well as the interns who would also be DREU students for the summer. We were the first ones there and throughout the day I saw familiar faces and it was great to be welcomed back. Even though some of the people working in the lab now, weren’t working there last summer, I actually had met most of them last summer at the Robotics Science and Systems (RSS) conference at the University of Michigan in Ann Arbor.

I was put into a 3 person team with Antonio Griego, a student at UNM in the lab who specializes in the Swarmathon GUI as well as Gazebo simulations, and Valarie Sheffey who was new to robotics and this lab, as well as a recent graduate of UNM.

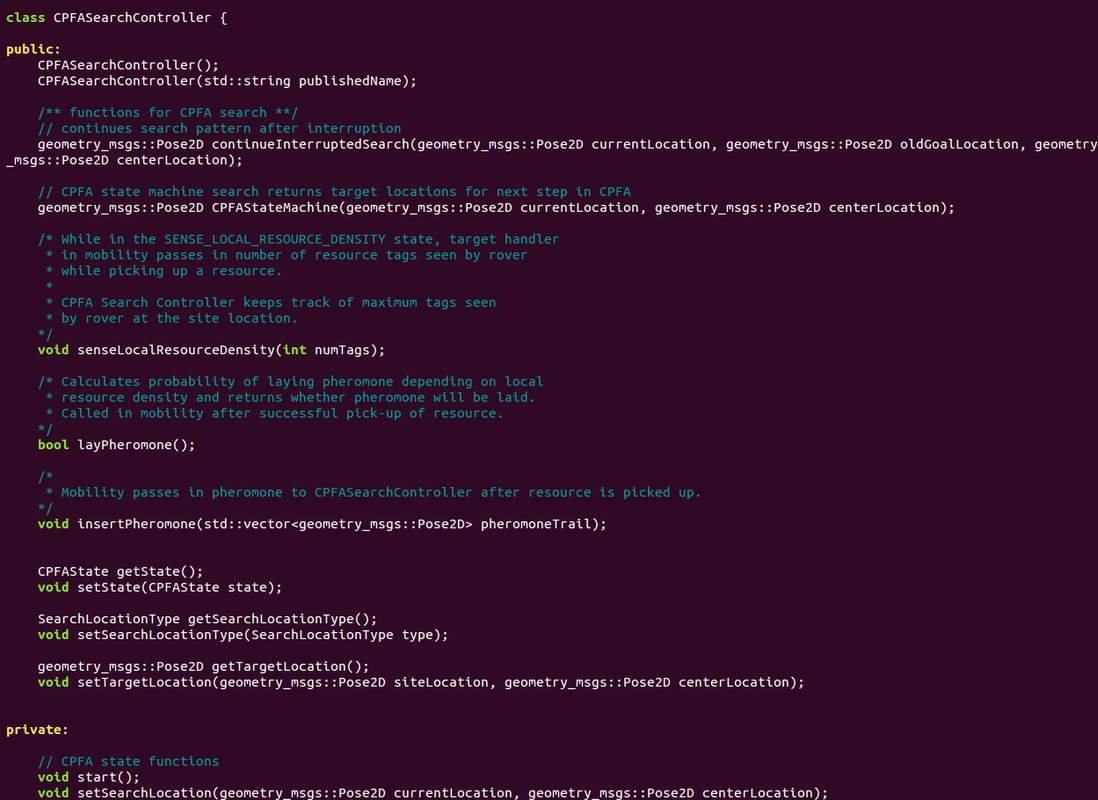

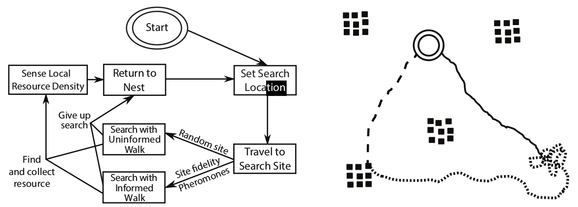

We were tasked with implementing the a central-place foraging algorithm (CPFA) that mimics the foraging behaviors of seed-harvester ants with the Swarmie robots in the lab. Our first job was to read the paper published by Dr. Joshua Hecker and Dr. Melanie Moses to fully understand the algorithm. Having done that, we could had a better understanding of the CPFA. The algorithm had been previously implemented into the ARGoS (Autonomous Robots Go Swarming) simulator. The code base is in C++, so much of the particulars of the algorithm had been implemented, we just had to first convert that logic to work with the Gazebo simulator using the Robotic Operating System (ROS) framework, which also had a codebase of C++.

The complexity for each robot is much higher in ROS. ARGoS has a much higher level control for the swarms and didn’t have to worry too much about the specific communication for each robot, whereas with ROS we have to worry about all the small particulars. I’ve been working with ROS and the Swarmies since January of last year, so I had no issue with starting to implement my portion of the algorithm. I helped out the other DREU students in the lab where I could as well.

My first weekend here consisted of buying all the necessities I would need over the summer, so I could start on Monday with a clear mind. I was also fairly familiar with the UNM campus and really enjoyed the duck pond, so I spent time there on both weekends and took some long naps to clear my mind.

When I came in Monday morning I met up with Dr. Matthew Fricke, who is now the technical lead of the lab and I helped set up the work area for myself as well as the interns who would also be DREU students for the summer. We were the first ones there and throughout the day I saw familiar faces and it was great to be welcomed back. Even though some of the people working in the lab now, weren’t working there last summer, I actually had met most of them last summer at the Robotics Science and Systems (RSS) conference at the University of Michigan in Ann Arbor.

I was put into a 3 person team with Antonio Griego, a student at UNM in the lab who specializes in the Swarmathon GUI as well as Gazebo simulations, and Valarie Sheffey who was new to robotics and this lab, as well as a recent graduate of UNM.

We were tasked with implementing the a central-place foraging algorithm (CPFA) that mimics the foraging behaviors of seed-harvester ants with the Swarmie robots in the lab. Our first job was to read the paper published by Dr. Joshua Hecker and Dr. Melanie Moses to fully understand the algorithm. Having done that, we could had a better understanding of the CPFA. The algorithm had been previously implemented into the ARGoS (Autonomous Robots Go Swarming) simulator. The code base is in C++, so much of the particulars of the algorithm had been implemented, we just had to first convert that logic to work with the Gazebo simulator using the Robotic Operating System (ROS) framework, which also had a codebase of C++.

The complexity for each robot is much higher in ROS. ARGoS has a much higher level control for the swarms and didn’t have to worry too much about the specific communication for each robot, whereas with ROS we have to worry about all the small particulars. I’ve been working with ROS and the Swarmies since January of last year, so I had no issue with starting to implement my portion of the algorithm. I helped out the other DREU students in the lab where I could as well.

Week 2

A visual representation of the CPFA algorithm

A visual representation of the CPFA algorithm

As we proceeded with the programming the CPFA ino ROS/Gazebo I realized that questions kept consistently kept popping up in my mind that were dependent on the feedback by my teammates. As a result it was difficult to progress at a consistent speed. After going back and forth clearing up certain portions of the Algorithm with Antonio and Valarie, we decided to settle in on a pair programming approach where we would have one person be in charge of writing in code while also the other teammates were sitting alongside to discuss how it was implemented.

This approach led to a quicker development time, because the most time consuming aspect of coding is not the actual coding itself, but the discussion of ideas and clearing up miscommunication. We were able to have instant feedback with no little misunderstanding, and we all took turns programming.

By the end of the week we had a fully implemented CPFA that was working fairly well with only one rover, but we still needed to debug a few problems that were related more towards runtime errors rather than logic errors as far as the CPFA goes. We did a few small tests with two rovers that showed promising results, but not everything had gone as expected. We had expected to take multiple weeks to get to this point, so the fact that we were here despite the bugs, means that we had exceed our own expectations. I don’t believe extending the code to multiple rovers will be too much more difficult. Most of the time will more than likely be spent on finding edge cases to debug.

This approach led to a quicker development time, because the most time consuming aspect of coding is not the actual coding itself, but the discussion of ideas and clearing up miscommunication. We were able to have instant feedback with no little misunderstanding, and we all took turns programming.

By the end of the week we had a fully implemented CPFA that was working fairly well with only one rover, but we still needed to debug a few problems that were related more towards runtime errors rather than logic errors as far as the CPFA goes. We did a few small tests with two rovers that showed promising results, but not everything had gone as expected. We had expected to take multiple weeks to get to this point, so the fact that we were here despite the bugs, means that we had exceed our own expectations. I don’t believe extending the code to multiple rovers will be too much more difficult. Most of the time will more than likely be spent on finding edge cases to debug.

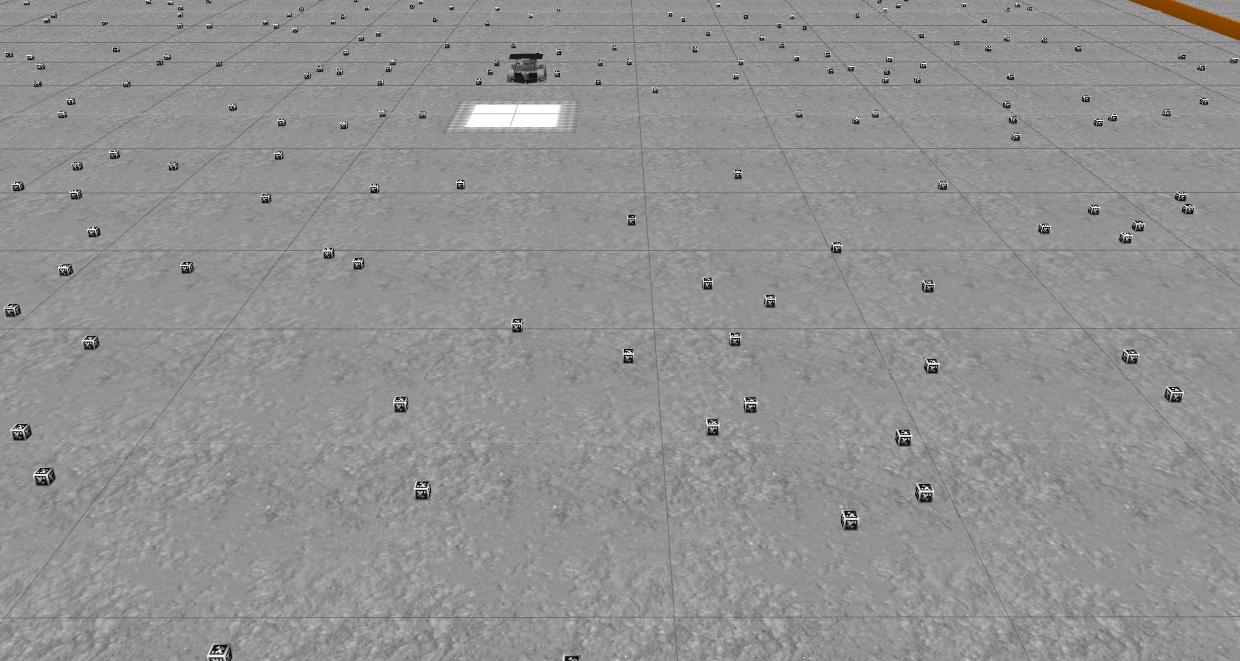

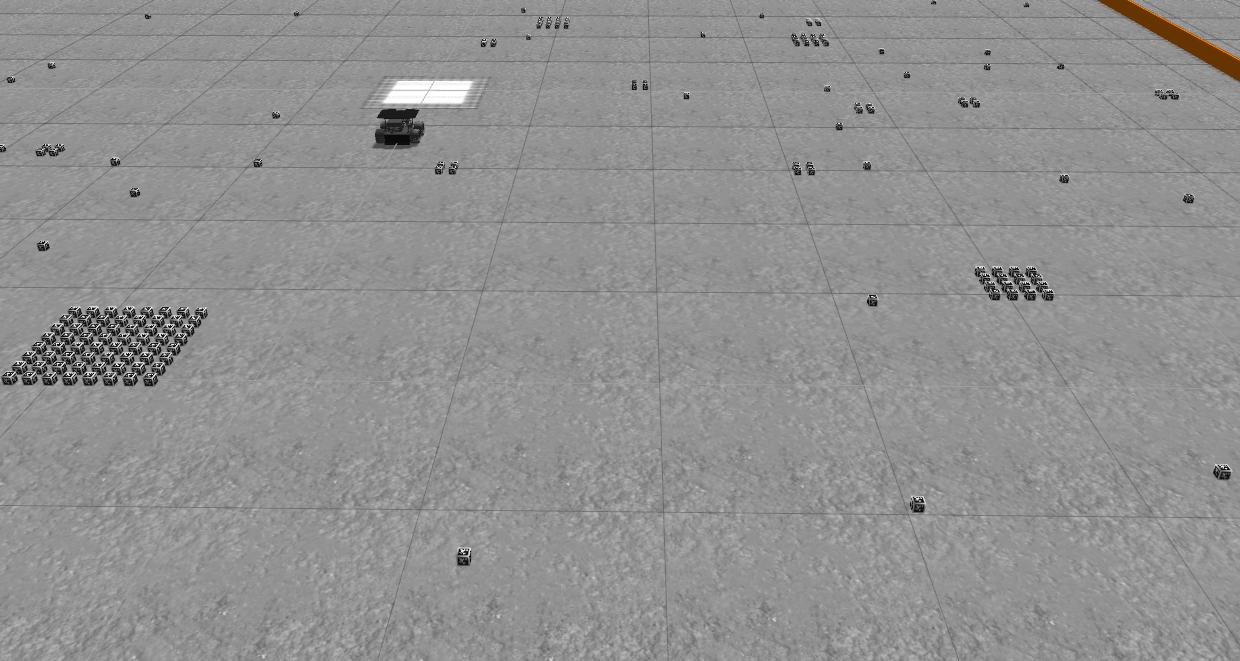

The goal of the CPFA is to maximize resource collection utilizing swarms of robots by tweaking parameters that we set. Meaning that we would have to run many trials of the CPFA to optimize the parameters. There are 3 types of resource distributions that we are concerned with: uniform, clustered, and power law.

A uniform distribution is a distribution of resources in a simulation where all of the April Cubes (resources) are distributed using a uniform distribution between every other April Cube in the arena.

A uniform distribution is a distribution of resources in a simulation where all of the April Cubes (resources) are distributed using a uniform distribution between every other April Cube in the arena.

A clustered distribution of resources is a distribution of resources such that all cubes are divided equally into 4 portions and put together in clusters at random locations inside the arena. So if we have 256 cubes, we would have 4 clusters in random locations in the arena each containing 64 cubes.

A power law distribution is a hybrid where we will single cubes all the way up to large clusters around the arena using powers of 2.

We decided that the best long term approach to do this was to write a ROS node in python that runs parallel to the simulation and collects data, displays it and also stores it for historical data analysis over time. We could run a bash script that starts the simulation and ends it at certain times using cron tabs. Python has many great libraries to help with with data analysis.

Week 3

Initially, the objective for this week was to debug and improve the CPFA algorithm we had written, but as fate would have it I was needed elsewhere. Starting on July 15, 2017 and ending on July 16, 2017, Joshua P. Hecker, Melanie E. Moses, Elizabeth E. Esterly, G. Matthew Fricke will be in charge of running a Swarm Robotics hackathon inspired by the NASA Swarmathon competition at the Robotics Science and Systems (RSS) conference in Cambridge, Massachusetts.

A core challenge in the Swarmathon competition is solving the problem of localization effectively. There are 2 primary methods for the rovers to localize themselves in the competition. The first one is GPS, which we would intuitively think is an effective way for the rovers to localize themselves, but the GPS sensors the rovers are equipped with can have a massive error. The error due to the GPS can be up to a 5 meter radial distance around the rover, and the purpose of the Swarmathon is for the rovers to take April Cubes to the collection zone in the center of the arena which has an area of 1 meter squared. The only way the GPS can find the nest is to rely on luck and hope for the best.

The second method for the rovers to localize themselves is to utilize the IMU along with the odometry (encoders) of the rovers and pass that data in through an Extended Kalman Filter (EKF) to know it’s position in an odometry frame of reference. This turns out to be a really good method for the rovers to localize themselves for short distances, but as the distance increases from the center of the nest, the positional data that the rovers use becomes error prone due to accumulated drift. This means that the further the rover is away from the nest searching, the less reliable this data becomes.

The hackathon will be run inside a large room filled with cameras. These cameras are connected to the ROS network and are running a ROS package to detect April Tags, calculates their positions relative to a center Tag using the standard transform library in ROS and publishes this information to the network for the rovers to freely subscribe to. The positional data is very accurate and as such, the localization problem is, for the most part, solved.

The challenge is to program the rovers to autonomously navigate around obstacles, collect April Cubes, and bring them back to a central collection zone without having to worry about any localization issues. This essentially allows participants to be able to program the rovers in a simulation like environment with real robots.

I was teamed with a few of the other DREU students and our job was to be guinea pigs for the upcoming hackathon. One of the rooms in the basement where our lab was set up with 3 cameras around the room, a central tag that represents the collection zone and rovers equipped with April Tags on top of them for the cameras to localize them.

I worked for a while with Jarett Jones, one of the programmes in the lab, to set up some of the cameras and make sure the computers were set up on the network correctly and have a day of testing and making sure all of the computers could communicate and all of the launch scripts ran correctly, we were able to start.

I like to follow an approach where I make very small steps forward that are robust and tested, and build off of those. First I decided that we should create a border with tape to represent where the camera could see so we could visualize the blind spots for the camera. This would minimize the guesswork as to why a rover would not know it’s position due to being in the camera’s blind spots. To get the rover to navigate around obstacles to pick up an April Cube, the first step was to get the rovers to reliably drive from point A to point B.

The most difficult part of this task was not the programming aspect of it, but getting everyone on board with how to do this. Much of my feedback was not being taken in, so I decided to take a back seat and allow another team member take the lead role. This left me wondering what is the best way to effectively communicate with team members that aren’t open to different ideas from their own.

By the end of the last day of the week, the cohesion among all the team members finally started to improve and my ideas were being listened to. The last couple of hours were among the most productive due to all of us agreeing on the path to take as far as programming goes. We had ideas on how to map the obstacles using an occupancy grid that was updated using SLAM (Simultaneous Localization and Mapping), but the end of day beat us to the punch.

Week 4

At the end of this week Joshua Hecker will be coming to the lab so we can demonstrate the progress we have made on the hackathon, which means that the vast majority of my efforts will go into working on the hackathon. By this point I am burnt out on the hackathon, mainly because I'm naturally introverted and I like to work alone for a while before collaborating with others. I don't mind programming and solving problems in a group, but only for so long. Mainly because I'm one of the more experienced programmers there, so every time I want to implement something I have to justify it and explain it to everyone else, which becomes tiring. I get the most work done when I can freely implement my ideas in solitude, without disruption. It seems that this summer's approach so far is to constantly pair me with other interns, which I'm starting to become less and less fond of as time goes on. I don't agree that throwing all of the interns into one project is a good idea; basically, more programmers is not always better.

We have one demo room with 3 cameras at the moment all running on the computers that are on board a few of the rovers. Most of the work the next few days will consist of installing more cameras and getting all of the software ready for the demo on Friday. At the same time, we have other interns that want to test out their ideas on the rovers. I'm not against this, but I believe that there are two qualifications before testing an idea and using the demo room: 1) The idea should be built on something that already works and the complexity should be low and 2) The idea should work in the simulation first. Trying to test something with a high complexity without testing out the simple components that make it up first is almost guaranteed to fail and will be much harder to debug. The idea should also be implemented and tested in the simulated environment first because this would guarantee that there are no logic errors. If the idea works in the simulation, but not in the real rovers then we can extrapolate that the issue is related to localization or a hardware issue. Whereas if we go straight to testing in the physical rovers, when the logic has not been tested it will take much more effort to debug. Not everyone held this belief and I lost motivation to even try to implement ideas due to resistance.

Most of my effort went into a technical support role as well as writing bash scripts to start up the cameras, basically a system administrator. The default method of starting up the cameras was to SSH (Secure Shell) into the rover’s computer and manually start up cameras attached to them. By this point one of the other interns was installing Ubuntu on dedicated computers for the camera’s used to localize the rovers so that we didn’t have to rely on the rover’s computers to run them. After she installed the operating system on them, I installed and prepared all the software for the demo to run on.

One of the nice things about Linux is that it has its programming language called Bash. With Bash we can write scripts that can simplify tasks, so I wanted to write a script that started up any amount of cameras from a laptop. By the end of the next day the script was written and we could startup all the cameras with a single command, rather than manually running them, which took a long time; especially for the other interns who had very little Linux experience.

As the week went on, the other interns were trying to implement their ideas and repeatedly made the assumption that their code works. My belief is that if it hasn’t been shown to work, even if it compiles, then it doesn’t work. My response to this assumption, after the past 2 weeks was, can the robot loop in a square pattern around the collection zone while running the code? If the rover could effectively do this, then their navigation code would then logically be reliable. The other interns started to adopt this after some resistance and it became the litmus test to see whether their code worked.

Friday came and I finally saw Joshua Hecker, whose main purpose of being here that day was to be on the panel Qi Lu’s, aka “Lukey”, Ph.D. proposal. I really enjoyed working with Josh last summer because he and I think and a very similar manner and he’s also more experienced than I am, so it gave me a chance to work with someone that really acted as my mentor who I can draw experience and knowledge from without being restrained. Soon after everyone arrived we went off to Lukey’s proposal. His proposal was about the MPFA (Multi-Place Foraging Algorithm) for swarms of rovers.

The MPFA is built on two central ideas: 1) Static collection zones and 2) the dynamic collection zones (like dump trucks). The idea behind the MPFA is that it is designed to be scalable and improve upon the CPFA. Essentially, the MPFA is many CPFA’s running simultaneously/recursively. The weakness of the CPFA is as soon as you start to scale up the number of robots collecting, the central collection zone becomes a bottleneck due to such an increase in traffic of robots. By have multiple collection zones, this allows the traffic and collisions to be minimized as the rovers bring resources to a collection zone. The other advantage is that fact that since the collection zone is not centralized, which logically implies that the travel distance to any collection zone will likely be closer than having a centralized collection zone. This means that the time traveling to and from a collection zone will be minimized and more time will be spent searching, thus more effort will be spent into foraging resources and less energy will be spent carrying resources around. With a dynamic collection zone, then the collection zones can move around and position themselves into optimal positions for the robots to collect, thus improving the efficiency of collecting. The actual implementation of the dynamic MPFA still needs to be worked on as we saw weaknesses in the implementation of this by Lukey. He also had problems in communicating and thus emphasized the importance of clear communication as a key factor in research. This was a great learning experience for me.

Following the presentation we headed back to the lab so we could demo the work we have done to Josh. I was not satisfied with our work, but I tend to be self critical and also have high standards for myself. We ended up demoing the rovers navigating in a square shape and collecting blocks for the lab and everyone seemed to be satisfied with it, so I ended up also being satisfied with it if everyone else was.

The following academic year will be the last year for me before I graduate with my B.S. in Computer Science and Engineering. I plan on going to grad school, so unfortunately I haven’t put in as much time to going out and enjoying myself. I’ve been focusing a lot on self improvement, such as working out and staying healthy, and studying/reading. I also do not have a car here and cannot freely enjoy New Mexico as it should be enjoyed. I would ideally like to go on a hike most weekends, but since I flew to Albuquerque I do not have the freedom to drive around.

We have one demo room with 3 cameras at the moment all running on the computers that are on board a few of the rovers. Most of the work the next few days will consist of installing more cameras and getting all of the software ready for the demo on Friday. At the same time, we have other interns that want to test out their ideas on the rovers. I'm not against this, but I believe that there are two qualifications before testing an idea and using the demo room: 1) The idea should be built on something that already works and the complexity should be low and 2) The idea should work in the simulation first. Trying to test something with a high complexity without testing out the simple components that make it up first is almost guaranteed to fail and will be much harder to debug. The idea should also be implemented and tested in the simulated environment first because this would guarantee that there are no logic errors. If the idea works in the simulation, but not in the real rovers then we can extrapolate that the issue is related to localization or a hardware issue. Whereas if we go straight to testing in the physical rovers, when the logic has not been tested it will take much more effort to debug. Not everyone held this belief and I lost motivation to even try to implement ideas due to resistance.

Most of my effort went into a technical support role as well as writing bash scripts to start up the cameras, basically a system administrator. The default method of starting up the cameras was to SSH (Secure Shell) into the rover’s computer and manually start up cameras attached to them. By this point one of the other interns was installing Ubuntu on dedicated computers for the camera’s used to localize the rovers so that we didn’t have to rely on the rover’s computers to run them. After she installed the operating system on them, I installed and prepared all the software for the demo to run on.

One of the nice things about Linux is that it has its programming language called Bash. With Bash we can write scripts that can simplify tasks, so I wanted to write a script that started up any amount of cameras from a laptop. By the end of the next day the script was written and we could startup all the cameras with a single command, rather than manually running them, which took a long time; especially for the other interns who had very little Linux experience.

As the week went on, the other interns were trying to implement their ideas and repeatedly made the assumption that their code works. My belief is that if it hasn’t been shown to work, even if it compiles, then it doesn’t work. My response to this assumption, after the past 2 weeks was, can the robot loop in a square pattern around the collection zone while running the code? If the rover could effectively do this, then their navigation code would then logically be reliable. The other interns started to adopt this after some resistance and it became the litmus test to see whether their code worked.

Friday came and I finally saw Joshua Hecker, whose main purpose of being here that day was to be on the panel Qi Lu’s, aka “Lukey”, Ph.D. proposal. I really enjoyed working with Josh last summer because he and I think and a very similar manner and he’s also more experienced than I am, so it gave me a chance to work with someone that really acted as my mentor who I can draw experience and knowledge from without being restrained. Soon after everyone arrived we went off to Lukey’s proposal. His proposal was about the MPFA (Multi-Place Foraging Algorithm) for swarms of rovers.

The MPFA is built on two central ideas: 1) Static collection zones and 2) the dynamic collection zones (like dump trucks). The idea behind the MPFA is that it is designed to be scalable and improve upon the CPFA. Essentially, the MPFA is many CPFA’s running simultaneously/recursively. The weakness of the CPFA is as soon as you start to scale up the number of robots collecting, the central collection zone becomes a bottleneck due to such an increase in traffic of robots. By have multiple collection zones, this allows the traffic and collisions to be minimized as the rovers bring resources to a collection zone. The other advantage is that fact that since the collection zone is not centralized, which logically implies that the travel distance to any collection zone will likely be closer than having a centralized collection zone. This means that the time traveling to and from a collection zone will be minimized and more time will be spent searching, thus more effort will be spent into foraging resources and less energy will be spent carrying resources around. With a dynamic collection zone, then the collection zones can move around and position themselves into optimal positions for the robots to collect, thus improving the efficiency of collecting. The actual implementation of the dynamic MPFA still needs to be worked on as we saw weaknesses in the implementation of this by Lukey. He also had problems in communicating and thus emphasized the importance of clear communication as a key factor in research. This was a great learning experience for me.

Following the presentation we headed back to the lab so we could demo the work we have done to Josh. I was not satisfied with our work, but I tend to be self critical and also have high standards for myself. We ended up demoing the rovers navigating in a square shape and collecting blocks for the lab and everyone seemed to be satisfied with it, so I ended up also being satisfied with it if everyone else was.

The following academic year will be the last year for me before I graduate with my B.S. in Computer Science and Engineering. I plan on going to grad school, so unfortunately I haven’t put in as much time to going out and enjoying myself. I’ve been focusing a lot on self improvement, such as working out and staying healthy, and studying/reading. I also do not have a car here and cannot freely enjoy New Mexico as it should be enjoyed. I would ideally like to go on a hike most weekends, but since I flew to Albuquerque I do not have the freedom to drive around.

Week 5

This week is the week of Independence Day, so we have a 4 day weekend. During the first 2 days of the weekend I spent my time studying ROS and keeping up my programming. We had Monday and Tuesday off as part of the 4 day weekend package deal and one of my fellow DREU interns, Valarie, invited us to go with her friends to a lake on Independence Day. I accepted and the Monday before I spent the day hanging out with my house mates trying not to think about my work.

That night Valarie invited me to her apartment so we could plan things out with her friends for the upcoming day. We were going up to a place called Nambe Falls, up in Sante Fe, New Mexico. We drank some alcohol and chatted about it for a while and went out to buy all the supplies. Our plan was to grill some patties to make some burgers, grill some chicken, potatoes, corn, and pineapple as well.

The next morning I was picked up and there were about 8 of us going up to Nambe Falls. We get to the destination point and it’s closed; we didn’t know that the location had days and hours of operation. A decision was made and we just went to the nearest lake to us, Santa Cruz Lake. I had not been to a good sized lake in a long time and the sunlight heat was smoldering my skin, so we hurried to take everything to the shade. I was really looking forward to going out to nature for a bit. We set up some music, started up the grill and I started to help prepare the food. The food ended up being very delicious and soon after we decided to get into the water. I grew up swimming quite often as a child, so swimming out into the lake was something I couldn’t wait to do. The day passed by fairly quick and I can say that I had a great time being able to not have worries for a day. It was surprisingly difficult to do nothing for a day that required intense thought.

The following day I got back to work at the lab and I using the new knowledge gained over the weekend from studying, I started the debugging process of the CPFA code we had written. At the same time, we had a lot of packing and preparation to do for the upcoming hackathon, so I was being dragged around everywhere helping out with what I could. By the end of the next day the CPFA was fully debugged of any major issues and we had shipped out all the material for the hackathon.

On Friday I had finished any major work I was working on, so I took care of some small tasks. The first task I worked on was developing parameter files that could easily be modified so that if any user of this code would want to modify CPFA parameters, it would be as easy as modifying the config files. After finding out the best way to do this, I found that ROS already had something like this and I just had to utilize it. ROS uses .yaml (yaml ain’t markup language) files to store parameter information and I could read those files using a .launch file with ROS. After the parameters are loaded into the appropriate namespace, I could them grab them in the CPFA code to have the right settings loaded. This was a much quicker task than I had anticipated, so then with one of the GUI developers in the lab, Antonio, I learned how the Swarmathon GUI worked.

I had never worked with GUI development, so for me the connection process between a GUI and code was something that was behind a veil, so as soon as he showed me how it worked, I was able to connect any new portion of the GUI with the code. We use something called QT for our GUI development and all of the back end is in C++, which I’m fairly familiar with. I made a simple widget that allows allows us to load custom parameters at the click of a button. Which will be extremely useful for future sim running for the CPFA.

Lastly, a style guide had just been released a few weeks ago, but I hadn’t heard of it until about a week before and had been wanting to read through it. Since I was mostly done with everything I wanted to do for the day, I read through the style guide. I finished the day by converting the CPFA code base to match the new style guide.

That night Valarie invited me to her apartment so we could plan things out with her friends for the upcoming day. We were going up to a place called Nambe Falls, up in Sante Fe, New Mexico. We drank some alcohol and chatted about it for a while and went out to buy all the supplies. Our plan was to grill some patties to make some burgers, grill some chicken, potatoes, corn, and pineapple as well.

The next morning I was picked up and there were about 8 of us going up to Nambe Falls. We get to the destination point and it’s closed; we didn’t know that the location had days and hours of operation. A decision was made and we just went to the nearest lake to us, Santa Cruz Lake. I had not been to a good sized lake in a long time and the sunlight heat was smoldering my skin, so we hurried to take everything to the shade. I was really looking forward to going out to nature for a bit. We set up some music, started up the grill and I started to help prepare the food. The food ended up being very delicious and soon after we decided to get into the water. I grew up swimming quite often as a child, so swimming out into the lake was something I couldn’t wait to do. The day passed by fairly quick and I can say that I had a great time being able to not have worries for a day. It was surprisingly difficult to do nothing for a day that required intense thought.

The following day I got back to work at the lab and I using the new knowledge gained over the weekend from studying, I started the debugging process of the CPFA code we had written. At the same time, we had a lot of packing and preparation to do for the upcoming hackathon, so I was being dragged around everywhere helping out with what I could. By the end of the next day the CPFA was fully debugged of any major issues and we had shipped out all the material for the hackathon.

On Friday I had finished any major work I was working on, so I took care of some small tasks. The first task I worked on was developing parameter files that could easily be modified so that if any user of this code would want to modify CPFA parameters, it would be as easy as modifying the config files. After finding out the best way to do this, I found that ROS already had something like this and I just had to utilize it. ROS uses .yaml (yaml ain’t markup language) files to store parameter information and I could read those files using a .launch file with ROS. After the parameters are loaded into the appropriate namespace, I could them grab them in the CPFA code to have the right settings loaded. This was a much quicker task than I had anticipated, so then with one of the GUI developers in the lab, Antonio, I learned how the Swarmathon GUI worked.

I had never worked with GUI development, so for me the connection process between a GUI and code was something that was behind a veil, so as soon as he showed me how it worked, I was able to connect any new portion of the GUI with the code. We use something called QT for our GUI development and all of the back end is in C++, which I’m fairly familiar with. I made a simple widget that allows allows us to load custom parameters at the click of a button. Which will be extremely useful for future sim running for the CPFA.

Lastly, a style guide had just been released a few weeks ago, but I hadn’t heard of it until about a week before and had been wanting to read through it. Since I was mostly done with everything I wanted to do for the day, I read through the style guide. I finished the day by converting the CPFA code base to match the new style guide.

Week 6 & 7

The past 2 weeks have been closely related to each other due to the hackathon, so I’ll merge them into one. Everything before and after the hackathon consisted of preparation and now that week 7 has just about concluded, I am slowly revving up my gears again.

By the time we had reached the final days before the hackathon, most of the lab had traveled to Massachusetts for the RSS conference. They consisted of mainly working on side work that was not of essential importance. Some of these tasks include cleaning up my journal a little bit, starting to work on my presentation for the CPFA work I have done, testing the simulations, etc. The day before we flew out, we all left work early to prepare for the next day.

The flight to Massachusetts the following day was long and arduous, at least mentally. When our final flight landed, I realized I had lost my wallet. It was a sensation I had never felt before, and I was less stressed out that I would have imagined that scenario happening to me. I called all of my banks, cancelled my cards, filed a lost and found report with the airport and moved on. Unfortunately my bank was not in the area, so I didn’t have any access to money during my stay there. I had to rely on the good nature of other people, which I am not used to, so that was something I had to adjust to.

We arrived relatively late to Cambridge; the city gave me the vibe that if I saw a man with a white powdered wig walking around I wouldn’t have been phased. The dorms we were staying in were part of Lesley University and is also the location where the hackathon would be taking place, so nearby MIT, but not exactly in it. I set my alarm to wake up early and I woke up that Saturday feeling like a zombie because my circadian rhythm had been thrown off balance and the overall nature of long flights the day before. I was really looking forward to breakfast and also meeting new people.

I remember last summer when I attended the RSS conference the best thing was meeting new like-minded people whom I could connect with, so I felt a bit excited to meet some new people like me. I won’t go into all the details of the preparation and introducing myself to new people, but I can say that the people I met and worked with during the hackathon have had a profound influence on me. I feel like I matured a little bit. The best feeling is being able to talk with people who are likely me without having to filter my idea and thoughts in a way where I might have to hold back. With the people that I tend to meet at these conference I can speak freely and unfiltered, and I always and up having a lot of questions and learning about new things.

My main job, among being a support staff and organizer, was to be a mentor to the teams that were participating. All of the students participating had previously participated in the NASA Swarmathon and were invited to join this hackathon. There were randomly assigned teams of size 4-5 people. There was a single mentor assigned to each of the teams at any one time, and every few hours we rotated. I found that many of the teams had a high level idea of what they wanted to accomplish, but lacked the experience to break down and convert these high level ideas into discrete algorithms, data structures, pseudocode and flowcharts. I focused mainly on using the socratic method to ask questions in such a way that would force them to come up with the right answer and most teams were able to organically come up with an algorithm to solve the problems they were facing at the time. My main emphasis in mentoring or tutoring people is to make sure they have a fundamental understanding of the problem based on their level of experience and knowledge, and use that to build on. I think this is a slower approach than simply giving them the answer, but I feel like it teaches them to fish rather than handing them over the already caught fish.

By the end of the night I was really satisfied with my work, albeit a bit delirious, but overall satisfied. I had nothing but positive feedback from everyone I worked with and everything was grateful for my help. By this time it was Sunday morning and the preparations for the final runs were being initiated. In the end, only one of the teams was able to successfully pick up blocks and drop them off at the collection zone. It turned out to be a very difficult task, which was a bit unexpected, but not surprising.

Afterwards I went out to lunch with some new friends I had made, as well as some old ones, and had a good time. I was pretty sleep deprived at this point, so the details are hazy, but later that night we ended up going to each at a chinese restaurant and the tea was very delicious in particular. The next morning everyone was going home, and I felt it was a bittersweet experience. In 2 days I was able to connect with people very much, so I really didn’t want it to end. I woke up early the next day and went out to eat breakfast, and as the day went on I said my goodbyes. Valarie, Kelsey and I went on a walk before our flight back. We went to walk around Harvard Square and into the Harvard campus. I guess whenever I imagine a prestigious university and the actual experience of being there are different. I didn’t feel a particular spark with the university for whatever reason. The day ended quickly and we arrived back in Albuquerque near midnight that Monday.

We had the next few days off and now I’ve been working on combining some refactored code from the Swarmathon base to the CPFA code we had written.

By the time we had reached the final days before the hackathon, most of the lab had traveled to Massachusetts for the RSS conference. They consisted of mainly working on side work that was not of essential importance. Some of these tasks include cleaning up my journal a little bit, starting to work on my presentation for the CPFA work I have done, testing the simulations, etc. The day before we flew out, we all left work early to prepare for the next day.

The flight to Massachusetts the following day was long and arduous, at least mentally. When our final flight landed, I realized I had lost my wallet. It was a sensation I had never felt before, and I was less stressed out that I would have imagined that scenario happening to me. I called all of my banks, cancelled my cards, filed a lost and found report with the airport and moved on. Unfortunately my bank was not in the area, so I didn’t have any access to money during my stay there. I had to rely on the good nature of other people, which I am not used to, so that was something I had to adjust to.

We arrived relatively late to Cambridge; the city gave me the vibe that if I saw a man with a white powdered wig walking around I wouldn’t have been phased. The dorms we were staying in were part of Lesley University and is also the location where the hackathon would be taking place, so nearby MIT, but not exactly in it. I set my alarm to wake up early and I woke up that Saturday feeling like a zombie because my circadian rhythm had been thrown off balance and the overall nature of long flights the day before. I was really looking forward to breakfast and also meeting new people.

I remember last summer when I attended the RSS conference the best thing was meeting new like-minded people whom I could connect with, so I felt a bit excited to meet some new people like me. I won’t go into all the details of the preparation and introducing myself to new people, but I can say that the people I met and worked with during the hackathon have had a profound influence on me. I feel like I matured a little bit. The best feeling is being able to talk with people who are likely me without having to filter my idea and thoughts in a way where I might have to hold back. With the people that I tend to meet at these conference I can speak freely and unfiltered, and I always and up having a lot of questions and learning about new things.

My main job, among being a support staff and organizer, was to be a mentor to the teams that were participating. All of the students participating had previously participated in the NASA Swarmathon and were invited to join this hackathon. There were randomly assigned teams of size 4-5 people. There was a single mentor assigned to each of the teams at any one time, and every few hours we rotated. I found that many of the teams had a high level idea of what they wanted to accomplish, but lacked the experience to break down and convert these high level ideas into discrete algorithms, data structures, pseudocode and flowcharts. I focused mainly on using the socratic method to ask questions in such a way that would force them to come up with the right answer and most teams were able to organically come up with an algorithm to solve the problems they were facing at the time. My main emphasis in mentoring or tutoring people is to make sure they have a fundamental understanding of the problem based on their level of experience and knowledge, and use that to build on. I think this is a slower approach than simply giving them the answer, but I feel like it teaches them to fish rather than handing them over the already caught fish.

By the end of the night I was really satisfied with my work, albeit a bit delirious, but overall satisfied. I had nothing but positive feedback from everyone I worked with and everything was grateful for my help. By this time it was Sunday morning and the preparations for the final runs were being initiated. In the end, only one of the teams was able to successfully pick up blocks and drop them off at the collection zone. It turned out to be a very difficult task, which was a bit unexpected, but not surprising.

Afterwards I went out to lunch with some new friends I had made, as well as some old ones, and had a good time. I was pretty sleep deprived at this point, so the details are hazy, but later that night we ended up going to each at a chinese restaurant and the tea was very delicious in particular. The next morning everyone was going home, and I felt it was a bittersweet experience. In 2 days I was able to connect with people very much, so I really didn’t want it to end. I woke up early the next day and went out to eat breakfast, and as the day went on I said my goodbyes. Valarie, Kelsey and I went on a walk before our flight back. We went to walk around Harvard Square and into the Harvard campus. I guess whenever I imagine a prestigious university and the actual experience of being there are different. I didn’t feel a particular spark with the university for whatever reason. The day ended quickly and we arrived back in Albuquerque near midnight that Monday.

We had the next few days off and now I’ve been working on combining some refactored code from the Swarmathon base to the CPFA code we had written.

Week 8, 9 & 10

The final 3 weeks for my internship were all related to finishing up my implementation of the CPFA with the code refactor. By the end of week 9 we needed to have some data collected for our implementations. I only had 2 weeks to read the new code base and move the fully tested CPFA code from the old code base to the new refactored code base in order.

Week 8 was about combing through the code base in fine grain detail. It my chance to make sure I fully comprehend the majority of the code base, at least concerning the controls of the robot. As I was reading through the refactor code base I found a few bugs and Jarett fixed them as he and Kelsey were the ones working on the refactor. As I started to add in print statements and run the simulations over and over I started to see the flow of how they designed their software and was able to add the code in for the CPFA to work by week 9. Merging in the CPFA class that we had designed was the most time consuming part because it didn’t fit into the architecture of the project.

I quickly got the vast majority of the code running and the last 2 days before the demo to Melanie were spent debugging, collecting data and making sure everything ran correctly. By the time the deadline arrived, I still had 1 bug left I hadn’t had time to fix, but it was fairly uncommon and didn’t have to worry about it appearing. I also had to implement my own obstacle avoidance for the robot, which I didn’t have as much time as I wanted to develop it. The result were a fairly clunky obstacle avoidance algorithm, that worked, but was not optimal.

The trials were ran as follows:

3 rovers

30 minutes

256 blocks in a power distribution

The CPFA parameters were untuned

Everything ran beautifully, minus that one bug and when pheromone recruitment was activated on a cluster of resources and rovers started to gather at that cluster site, their obstacle avoidance time went way up and block collection slowed. Especially with the congestion near the central collection zone.

Week 8 was about combing through the code base in fine grain detail. It my chance to make sure I fully comprehend the majority of the code base, at least concerning the controls of the robot. As I was reading through the refactor code base I found a few bugs and Jarett fixed them as he and Kelsey were the ones working on the refactor. As I started to add in print statements and run the simulations over and over I started to see the flow of how they designed their software and was able to add the code in for the CPFA to work by week 9. Merging in the CPFA class that we had designed was the most time consuming part because it didn’t fit into the architecture of the project.

I quickly got the vast majority of the code running and the last 2 days before the demo to Melanie were spent debugging, collecting data and making sure everything ran correctly. By the time the deadline arrived, I still had 1 bug left I hadn’t had time to fix, but it was fairly uncommon and didn’t have to worry about it appearing. I also had to implement my own obstacle avoidance for the robot, which I didn’t have as much time as I wanted to develop it. The result were a fairly clunky obstacle avoidance algorithm, that worked, but was not optimal.

The trials were ran as follows:

3 rovers

30 minutes

256 blocks in a power distribution

The CPFA parameters were untuned

Everything ran beautifully, minus that one bug and when pheromone recruitment was activated on a cluster of resources and rovers started to gather at that cluster site, their obstacle avoidance time went way up and block collection slowed. Especially with the congestion near the central collection zone.

The results gave us an insight into how well the CPFA performed in a simulated environment, with the potential of up to a 50% increase in performance with tuned parameters. Comparing these results to the swarmathon simulated teams told us that it did fairly well considering it wasn’t optimal just yet.

The next step was reworking the code a third time. I wanted to learn what Dr. Fricke really wanted with the refactor code and so we sat down at the conference room and designed the CPFA for the refactor from scratch, how it was meant to be designed without having a deadline.

The refactor code was all about removing and inserting controller classes into the code that were supposed to follow a generalized format of sharing information. Depending on a certain “process state”, different controllers would be running concurrently with each other and would throw interrupts, and if they had a high priority then they would take over for a second where the lowest non negative priority controller ran by default.

I started the implementation process of this code, but never finished it. Looking back at the last few months I feel like I should have sat down with Dr. Fricke earlier and so I would have spent less time rewriting code. This was a lesson learned for me that I still need to work on my communication skills and I should fully understand what my superiors expect from my work before I go all in

Overall I’m satisfied with my work even though it wasn’t as well received as I would have wanted, which is simply my ego and nothing more. I did my best and that’s all I could have done and I learned from my mistakes.

The second to last night we all went out to a bar and had drinks and talked about our futurees and how our experiences was that summer. I just want to say that it was a pleasure working with everyone in the lab this summer and I learned at least a few things from every person there. Dr. Moses has been a tremendous positive force in my life and I can’t thank her enough for the work she has done and how much it was really changed my life for the better. I’m very grateful and appreciative for having been given this opportunity by such great hard working people.

The next step was reworking the code a third time. I wanted to learn what Dr. Fricke really wanted with the refactor code and so we sat down at the conference room and designed the CPFA for the refactor from scratch, how it was meant to be designed without having a deadline.

The refactor code was all about removing and inserting controller classes into the code that were supposed to follow a generalized format of sharing information. Depending on a certain “process state”, different controllers would be running concurrently with each other and would throw interrupts, and if they had a high priority then they would take over for a second where the lowest non negative priority controller ran by default.

I started the implementation process of this code, but never finished it. Looking back at the last few months I feel like I should have sat down with Dr. Fricke earlier and so I would have spent less time rewriting code. This was a lesson learned for me that I still need to work on my communication skills and I should fully understand what my superiors expect from my work before I go all in

Overall I’m satisfied with my work even though it wasn’t as well received as I would have wanted, which is simply my ego and nothing more. I did my best and that’s all I could have done and I learned from my mistakes.

The second to last night we all went out to a bar and had drinks and talked about our futurees and how our experiences was that summer. I just want to say that it was a pleasure working with everyone in the lab this summer and I learned at least a few things from every person there. Dr. Moses has been a tremendous positive force in my life and I can’t thank her enough for the work she has done and how much it was really changed my life for the better. I’m very grateful and appreciative for having been given this opportunity by such great hard working people.