Computer Science Research Internship

| Link To Final Report | |

| File Size: | 692 kb |

| File Type: | |

During the summer of 2015, I participated in a research internship called DREU at the University of Minnesota in Minneapolis, Minnesota. I worked in the Motions Lab under Dr. Stephen Guy. My project included working with graduate student, Nick Sohre, on making Unity graphics appear 3D on a 3D TV. This was my experience:

WEEK 1

Day 1: I arrived at U of M campus and was introduced to some of the faculty and other students. I was then given a tour of the University's East Bank and the Computer Science Building (Keller Hall) that I would be working in. Following the tour, the other REU students and I had lunch with some of the mentors and discussed research projects. At lunch, I met my mentor, Stephen Guy, and my fellow mentee, Martine (also working with Stephen Guy). After this, I was brought to lab I would be working at and met with grad students currently being advised by Stephen. Here, I discussed ongoing research projects with the students and began programming for the first time in Unity.

Day 2: To become more accustomed to Unity, I programmed many games using Unity's online tutorials. In the afternoon, I attended the Big Data Technical Training taught by Amy Larson to learn more about programming in C++ and using GitHub.

Day 3: On Wednesday, I continued to work with Unity; making games and experimenting. When Stephen arrived, I talked with him about possible projects and current research.

Day 4: I worked with developing a mini game on Thursday in Unity and read two conference papers written by Stephen Guy and Vipin Kumar. To prepare for Friday's upcoming workshop, I wrote summaries of the papers and listed their similarities and differences.

Day 5: In the morning, I went the scientific communication workshop led by Amy. There, we discussed formats of quality conference papers and looked at research databases. Afterwards, Martine and I went back to the lab to work on a new tutorial given to us by Stephen for detecting faces in MATLAB.

WEEK 2

Day 6: To start off the week, I attended the second Big Data Technical Training session with the other REU students to learn more about programming in MATLAB. There, we worked on fixing a program that analysed neurological data given to us by one of the University's researchers. Following the session, I went back to the lab to continue working on my face recognition program in MATLAB.

Day 7: For the majority of the day, I worked on face recognition program and explored some of MATLAB's features.

Day 8: I started off the day by reading some of Stephen's conference papers posted on his website. Then, before lunch, Stephen presented some of his research to all of the computer science interns. After that, we had lunch and talked to some of the faculty. To finish off the day, I went back to the lab and learned about some of the projects that the grad students were working on.

Day 9: On Thursday, the REU students and I attended another Big Data Technical Training session with Amy to learn about LaTex. The rest of the day, I read more about crowd simulation and learned more C# programming.

Day 10: In the morning, I worked on a Unity project that Stephen asked me to make that could be viewed on the Panasonic 3D TV we have in the lab. When Stephen came into the lab, I talked to him about displaying one of the grad student's work on the 3D TV and setting up a camera to track a face as they view the 3D image. After doing some research on the internet, I found a Unity downloadable asset that makes 3D Unity projects for 3D TV's.

WEEK 3

Day 11: I worked on making a prefab in Unity that could take in Nick's face game object and make it compatible with a 3D TV. Once I got the game view to show two, slightly spaces cameras side-by-side, I worked on getting the user to be able to move around the object to see different views.

Day 12: Spent most of the morning working on the Unity prefab for the face game object again. I had difficulties getting the two cameras (the two eyes) to be stitched next to each other for the final texture. Once I figured out how to do this (using renderTextures), Nick and I were able to attach my prefabs to his facial animation to make it appear 3-dimensional on the 3D TV. By altering Nick's code, we were able to implement controls that could make the two cameras moves closer or farther to each other and make the camera's flip on the projection screen (made the image either come out of the screen or go into the screen).

Day 13: In the morning, I worked on finding the optimal settings for viewing the face in 3D. In the afternoon, we had a research colloquium by Dr. Dan Knight. After the talk, we ate lunch with some of the faculty and met some of the girls partaking in Maria's high school girl camp for introducing girls to the University's computer science program.

Day 14: On Thursday, I worked with the new TrackIR camera that allowed for face tracking. We wanted to use this camera to track a user's face/position as they physically moved around to view the sides of the 3D face. Before lunch, Martine and I went to the Virtual Reality lab where some of the REU and DREU participants were performing research. While there, we tried walking around in a virtual reality and learned about some of the other student's projects. Following the tour, Martine and I joined some of the other members of the graphics department for lunch at a restaurant on campus. At lunch, we conversed with some of the faculty and students about research and other various things.

Day 15: In the morning, I attended another one of Amy's communication workshops. There, we practiced giving our "elevator talks" about our research. We also worked on writing an abstract for a paper that Amy had previously written. After this, I went back to the lab and continued working with the TrackIR and Unity. After a great deal of internet exploring, I found code that would interpret the camera's data in Unity. Once I had this going, I was able to move the head detector around and my Unity images would move accordingly. So when I moved my head to the left, the image would move to the right and visa versa. Once I had this implemented in my demo project, I added this to the Nick's Unity face.

WEEK 4

Day 16: For the majority of the day, I worked on showing Nick's Unity face on the 3D TV. I had issues with finding the optimal sensitivity for the movement of the TrackIR camera. I wanted to have the image on the screen move naturally as the user moved forward, backward, sideways, up, and down. Another issue that I was having was with finding the detecting range of the TrackIR detectors. Once I found this, I knew the bounds that the user was able to walk in before the the detectors lost their signals. Once all of this was done, the user could move their head (or body) in 3D space to see different angles of the Nick's Unity face.

Day 17: To prepare for presenting my research to Maria's high school girl's camp, I added features to my Unity program to make it more presentable. In the afternoon, we had lunch with the girls, presented our research, and answered some questions.

Day 18: On Wednesday I worked more on calibrating the TrackIR camera. Since the range of the camera was so small, I had to set the camera in a places where it got the best view of the user and the detectors. In the afternoon, we listened to a presentation about Zooniverse (a website for people to volunteer and help with research projects). After having lunch with the girls camp again, I went back to the lab to work more on perfecting the head tracking in Unity (by possibly starting from scratch).

Day 19: In the morning, I worked on changing key commands into GUI commands to make the program more user friendly. For lunch, we had the graphics group get together at a local restaurant to talk about research and logic problems. When I got back to the lab, I continued working on my GUI's.

Day 20: Since we didn't have our Communication workshop with Amy, I went to the lab and continued working on my GUI's. Once I had all of my GUI's working, I built my program and was able to use all of them while I moved my head around. After this, I played with some of TrackIR's features to make the camera track more accurately.

WEEK 5

Day 21: On Friday, Stephen and I talked about having the TrackIR camera movements in Unity proportioned to the user' movements . I could do this by having the TrackIR camera in the one-to-one setting. Another thing we wanted to try was placing the Unity camera at approximately the same distance away from the face as the user was from the TrackIR camera. This would made the face seem like it was an actual face, floating before the screen in front of the user. I got this distance by measuring how far the detectors on my head were from the TrackIR camera.

Day 22: For most of the day, I worked on debugging the face program so it ran more smoothly. Some of the work I did included making the GUI look nicer and adding more, useful buttons. I also looked into what would we needed to do to have the Unity program run on a Mac. What I found was that we needed to get a Mac Developers License, get a Mac computer to build on, and get an iPad to test the program on.

Day 23: On Wednesday, I worked on fixing some of my GUI's and also worked with Nick on better calibrating the TrackIR camera by editing our code. In the afternoon, we had a talk by one of the data scientist on campus that has worked on many projects that predict behavior including ocean currents. After lunch, I went with Stephen and Nick to another professor's lab on campus to test a simple demo project built in Unity on a Mac and then running on an iPad. Fortunately, the project ran successfully on the Mac and the iPad. When we got back to our lab, Nick, Stephen, and I talked about the next steps for the user-study at the State Fair. Some of the things we knew we were going to have to work out included: getting the project onto each of the iPads, finding a way to get the data from the iPads to a cloud or server, having GUI's display information and directions to the viewers, creating a rating system for the users to rate the quality of the simulation, and running videos in Unity to display the animations.

Day 24: In the morning, we had a Career Savvy Workshop with Amy where we listened to two University faculty members talk about their experiences with choosing grad school or industry. When they were done, I went to the graphics lunch again with students and faculty from some of the other labs to discuss our research topics. After lunch, the graphics group all took a tour of one of the labs on campus. There, we got to experience the virtual reality cave, 3D printing, hand-held virtual reality, and many other things.

Day 25: July 3rd, day off.

WEEK 6

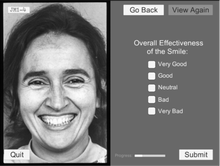

Day 26: I worked on making a new project in Unity for the face to be put into app form. The first thing I tested was getting videos to play in Unity. I wanted to have this so I could include the recorded animation of the face in the project that could be played over and over again. After this, I wanted to get the GUI working. On the GUI, I wanted to display the directions, a reset button, the rating system, and a submit button.

Day 27: I spent most of my day making sample layouts for the user study app. I talked with Nick and Stephen about formats that they thought best suited the purpose of the project. I also experimented with various rating systems by using different GUI objects in Unity. Some of the objects I used included sliders, toggles (check boxes), text boxes, and buttons. Once I had a view designs ready, I shared them with Stephen and Nick for their opinions.

Day 28: On Wednesday, I worked on the buttons of the app. I got all of the buttons to go to the appropriate places for the user to properly navigate the app. I was going to test out the app that I had so far on the iPad but I the person that helped me run the app last time was not in the lab so I decided to wait. Before lunch, we had a talk from one of the professors working with REU student. Once we were done there, we had lunch again the with other computer science interns again. In the afternoon, I worked more on the format of the app and talked to Stephen and Nick about their thoughts.

Day 29: When I got to the lab in the morning, I started working on some of the things Stephen, Nick, and I had talked about on Wednesday. One thing we wanted to incorporate into the app was a questionnaire that asked the user about things such as their age, gender, zip code, and how much alcohol they had recently consumed. Stephen thought it would be a good idea to include this last questions since he thought a person's blood alcohol level could influence their responses. For lunch, the graphics department went to another restaurant near campus to discuss progress.

Day 30: I mostly worked on getting my app to run on the iPad on Friday. To get the Unity project from my PC to the iPad, I had to first transfer the project to a Mac device then run the app on the iPad. Since we were going to have a meeting on Monday with out collaborators, I wanted to have the app working on the iPad so I had something to show them.

WEEK 7

Day 31: To prep for the meeting with the facial reconstructive surgeon, I worked more on getting everything to work on the app. What I mostly worked on was getting the app to iterate through the video and rating slides multiple times depending on the number of videos I wanted to show each user. Fortunately, I was able to get this process to work on the PC I was working on but since I didn't have a Mac to build the project on for the iPad, I wasn't able to build the project and test it. An issue I had while working on the PC was that it was unable to play my video files since they were made for mobile devices only.

Day 32: I worked mostly on all of the tasks we had talked about at the meeting on Monday. The first thing I did was change the orientation of the app to horizontal to fit more information on the screen. Since we decided we wanted the face video to play on the left side of the screen and have the rating system on the right, I needed to figure out a way to play videos on the iPad that would not go full screen like I had them before.

Day 33: What I ended up doing was installing a 3rd party plugin that allowed me to get around Unity's limitations. It took me a while to get their code to do what I wanted. Nick also had brought in his personal iPad 2 for me to work on. Unfortunately, the screen size for the iPad 2 was smaller than the iPad 3 so I needed to change my app to fit to the new screen size. With no Mac computer to get the app on the iPads, I had to wait for someone in the other lab to allow me to work on his computer. Before lunch, we listened to Dan Keefe present his research and talk about the Interactive Visualization Lab (the lab I go to work in occasionally). Then came lunch and some discussion about current research struggles. When I went back to the lab, I incorporated the plugin I downloaded into my app so I could play videos. Before I was able to test this on the iPad, I went to a conference paper discussion group lead by a professor on campus to talk about graphics papers that were to be presented at SIGGRAPH 2015.

Day 34: On Thursday, I worked more on getting one of the face videos to play in the app by using the new plugin. For lunch, I went with the other members of the graphics department to a restaurant near campus to talk about projects and research. After lunch, I met with Stephen to ask him about borrowing another lab's Mac Mini to be able to build apps on our own lab.

Day 35: On Friday, I spent most of the day in the other lab testing and building my app. After many attempts, I was able to get the unity scenes to properly play on the iPad. I also was able to get all of the scenes to display horizontally.

WEEK 8

Day 36: In the morning, I went over to Dan Keefe's lab to borrow their Mac Mini for my remaining 3 weeks at the University. All I had to do was connect the Mac Mini to one of the new monitors that we had just gotten. With the Mac Mini and Nick's iPad2, I didn't have to go to the other lab to borrow their equipment anymore. While I waited for Nick to bring in the iPad, I looked for a SIGGRAPH paper that would be interesting enough to talk about for Wednesday's SIGGRAPH meeting. I ended up finding an interesting paper on Facial Reconstruction (similar to my research) that I chose. The rest of the day, I worked on getting my app to retain it's information after changing scenes (normally deletes this).

Day 37: For the majority of the day, I worked on getting xcode to build my Unity app on Nick's iPad. It took a while to get the app into development mode and updating everything. Once I had the app building to the iPad, I was able to see what things I needed to fix. One thing I changed was the position and size of the screens that played the movies. Before, the videos were in the wrong spot with strange shadows. After some fixing, I got the video to look much nicer. For the next day, I knew I needed to get the app to not just iterate through all of the videos files I had in my folder but also play the videos (only played one video). Another thing I had to do was get the video to replay when the user pressed the View Again button.

Day 38: Before lunch, I mostly read the SIGGRAPH paper for the week and took notes on it since I had to talk about it at the meeting. After lunch, I worked on allowing the app to run in horizontal and vertical orientation on the iPad depending on how the user held the device. In the afternoon, I went to the SIGGRAPH meeting and talked about an interactive virtual surgery simulation that I read about.

Day 39: In the morning, I had a meeting with Stephen, Nick, the plastic surgeon (Sofia), a physiology professor, and some of the people that would be volunteering at the State Fair to run the user study. Just before the meeting, I was able to get the app to replay videos when the user pressed the View Again button and I also got the app to go through all of the videos I had provided it instead of just playing the same video over and over again. At the meeting, we mostly talked about minor changes that needed to be made to the app and signing up for time slots to volunteer at the State Fair. Once that was over, I worked on implementing some of the changes we had talked about at the meeting. Some of the changes included playing the videos in random order for each user. Another change I wanted to make was to have the app reset the toggle boxes and slider ratings every time a new video was played.

Day 40: On Friday, I worked on getting the program to read in still image files to display just like the videos. To do this, I had to edit my code so it knew if the files were video or image files. A tricky part of this process was accessing the files while on the iPad since the file system is different.

WEEK 9

Day 41: Once I figured out the iPad's file system, I was able to get images and videos play in the app for users to rate. This is what the other members of my team wanted to include so users could use well-studied images of facial expressions as references when rating the animated videos.

Day 42: I got a lot of progress done on the app on Tuesday. First of all, I got the navigation to work properly through out the app. I also got the stimuli (videos and still images of faces) to appear in random order for users to view.

Day 43: On Wednesday, I got many things to work in the app. I was able to fix many of the bugs I was having such as information clearing when switching screens, the app going in the wrong order, and videos not loading. I was also able to figure out a way to organize all of the input the user gives so I could send it to a server for storage. Another thing I added was a progress bar at the bottom of the screen to show the user how much they had left. Another change I made (at the request of some of the other members of the team) was to not allow the user to go back and change their responses after they had submitted them. This would prevent the user from going back and second guessing themselves.

Day 44: Right away, I noticed the app was skipping a the screen with the directions on it and replacing it with another screen. I was able to fix this and work on making the app look more presentable. Before heading to lunch, I completed online training required for volunteering at the State Fair.

Day 45: In the morning, I was able to fix an issue I was having with some help from Nick. Once I was done with that, I decided to start working on my final report for the DREU.

WEEK 10

Day 46: I started off the day by working on my final report outline again. After looking up a format for the paper I was able to see what sections I needed to include in my final report. I spent most of the day researching previous work and filling out my outline.

Day 47: On Tuesday, I worked all day rewriting my final paper outline. On Monday, Stephen had replied to me at the end of the day after I sent him my first outline. He had suggested a lot of things for me to consider so I rewrote the majority of my final paper.

Day 48: I worked in the morning on the beginning of my paper to make sure everything flowed together nicely. Before lunch, I went to the last REU research talk given by Chad Myers. When I got back from lunch, I continued working on writing my final report.

Day 49: Thursday, I made progress on my report and occasionally asked Nick and Stephen for advice. I mostly had the paper completed except for some slight alterations that I planned on making after consulting with Nick and Stephen. In order to gain more knowledge about basic facial animation, I read a few online articles and books. They really helped me with setting up the background and previous work of my paper.

Day 50: On my last day, I worked on putting images into my report and finished editing. For lunch, I went out with members of the graphics department to celebration the completion of the program. In the afternoon, we had a boat cruise on the river and dinner with other REU students at the University.

Day 26: I worked on making a new project in Unity for the face to be put into app form. The first thing I tested was getting videos to play in Unity. I wanted to have this so I could include the recorded animation of the face in the project that could be played over and over again. After this, I wanted to get the GUI working. On the GUI, I wanted to display the directions, a reset button, the rating system, and a submit button.

Day 27: I spent most of my day making sample layouts for the user study app. I talked with Nick and Stephen about formats that they thought best suited the purpose of the project. I also experimented with various rating systems by using different GUI objects in Unity. Some of the objects I used included sliders, toggles (check boxes), text boxes, and buttons. Once I had a view designs ready, I shared them with Stephen and Nick for their opinions.

Day 28: On Wednesday, I worked on the buttons of the app. I got all of the buttons to go to the appropriate places for the user to properly navigate the app. I was going to test out the app that I had so far on the iPad but I the person that helped me run the app last time was not in the lab so I decided to wait. Before lunch, we had a talk from one of the professors working with REU student. Once we were done there, we had lunch again the with other computer science interns again. In the afternoon, I worked more on the format of the app and talked to Stephen and Nick about their thoughts.

Day 29: When I got to the lab in the morning, I started working on some of the things Stephen, Nick, and I had talked about on Wednesday. One thing we wanted to incorporate into the app was a questionnaire that asked the user about things such as their age, gender, zip code, and how much alcohol they had recently consumed. Stephen thought it would be a good idea to include this last questions since he thought a person's blood alcohol level could influence their responses. For lunch, the graphics department went to another restaurant near campus to discuss progress.

Day 30: I mostly worked on getting my app to run on the iPad on Friday. To get the Unity project from my PC to the iPad, I had to first transfer the project to a Mac device then run the app on the iPad. Since we were going to have a meeting on Monday with out collaborators, I wanted to have the app working on the iPad so I had something to show them.

WEEK 7

Day 31: To prep for the meeting with the facial reconstructive surgeon, I worked more on getting everything to work on the app. What I mostly worked on was getting the app to iterate through the video and rating slides multiple times depending on the number of videos I wanted to show each user. Fortunately, I was able to get this process to work on the PC I was working on but since I didn't have a Mac to build the project on for the iPad, I wasn't able to build the project and test it. An issue I had while working on the PC was that it was unable to play my video files since they were made for mobile devices only.

Day 32: I worked mostly on all of the tasks we had talked about at the meeting on Monday. The first thing I did was change the orientation of the app to horizontal to fit more information on the screen. Since we decided we wanted the face video to play on the left side of the screen and have the rating system on the right, I needed to figure out a way to play videos on the iPad that would not go full screen like I had them before.

Day 33: What I ended up doing was installing a 3rd party plugin that allowed me to get around Unity's limitations. It took me a while to get their code to do what I wanted. Nick also had brought in his personal iPad 2 for me to work on. Unfortunately, the screen size for the iPad 2 was smaller than the iPad 3 so I needed to change my app to fit to the new screen size. With no Mac computer to get the app on the iPads, I had to wait for someone in the other lab to allow me to work on his computer. Before lunch, we listened to Dan Keefe present his research and talk about the Interactive Visualization Lab (the lab I go to work in occasionally). Then came lunch and some discussion about current research struggles. When I went back to the lab, I incorporated the plugin I downloaded into my app so I could play videos. Before I was able to test this on the iPad, I went to a conference paper discussion group lead by a professor on campus to talk about graphics papers that were to be presented at SIGGRAPH 2015.

Day 34: On Thursday, I worked more on getting one of the face videos to play in the app by using the new plugin. For lunch, I went with the other members of the graphics department to a restaurant near campus to talk about projects and research. After lunch, I met with Stephen to ask him about borrowing another lab's Mac Mini to be able to build apps on our own lab.

Day 35: On Friday, I spent most of the day in the other lab testing and building my app. After many attempts, I was able to get the unity scenes to properly play on the iPad. I also was able to get all of the scenes to display horizontally.

WEEK 8

Day 36: In the morning, I went over to Dan Keefe's lab to borrow their Mac Mini for my remaining 3 weeks at the University. All I had to do was connect the Mac Mini to one of the new monitors that we had just gotten. With the Mac Mini and Nick's iPad2, I didn't have to go to the other lab to borrow their equipment anymore. While I waited for Nick to bring in the iPad, I looked for a SIGGRAPH paper that would be interesting enough to talk about for Wednesday's SIGGRAPH meeting. I ended up finding an interesting paper on Facial Reconstruction (similar to my research) that I chose. The rest of the day, I worked on getting my app to retain it's information after changing scenes (normally deletes this).

Day 37: For the majority of the day, I worked on getting xcode to build my Unity app on Nick's iPad. It took a while to get the app into development mode and updating everything. Once I had the app building to the iPad, I was able to see what things I needed to fix. One thing I changed was the position and size of the screens that played the movies. Before, the videos were in the wrong spot with strange shadows. After some fixing, I got the video to look much nicer. For the next day, I knew I needed to get the app to not just iterate through all of the videos files I had in my folder but also play the videos (only played one video). Another thing I had to do was get the video to replay when the user pressed the View Again button.

Day 38: Before lunch, I mostly read the SIGGRAPH paper for the week and took notes on it since I had to talk about it at the meeting. After lunch, I worked on allowing the app to run in horizontal and vertical orientation on the iPad depending on how the user held the device. In the afternoon, I went to the SIGGRAPH meeting and talked about an interactive virtual surgery simulation that I read about.

Day 39: In the morning, I had a meeting with Stephen, Nick, the plastic surgeon (Sofia), a physiology professor, and some of the people that would be volunteering at the State Fair to run the user study. Just before the meeting, I was able to get the app to replay videos when the user pressed the View Again button and I also got the app to go through all of the videos I had provided it instead of just playing the same video over and over again. At the meeting, we mostly talked about minor changes that needed to be made to the app and signing up for time slots to volunteer at the State Fair. Once that was over, I worked on implementing some of the changes we had talked about at the meeting. Some of the changes included playing the videos in random order for each user. Another change I wanted to make was to have the app reset the toggle boxes and slider ratings every time a new video was played.

Day 40: On Friday, I worked on getting the program to read in still image files to display just like the videos. To do this, I had to edit my code so it knew if the files were video or image files. A tricky part of this process was accessing the files while on the iPad since the file system is different.

WEEK 9

Day 41: Once I figured out the iPad's file system, I was able to get images and videos play in the app for users to rate. This is what the other members of my team wanted to include so users could use well-studied images of facial expressions as references when rating the animated videos.

Day 42: I got a lot of progress done on the app on Tuesday. First of all, I got the navigation to work properly through out the app. I also got the stimuli (videos and still images of faces) to appear in random order for users to view.

Day 43: On Wednesday, I got many things to work in the app. I was able to fix many of the bugs I was having such as information clearing when switching screens, the app going in the wrong order, and videos not loading. I was also able to figure out a way to organize all of the input the user gives so I could send it to a server for storage. Another thing I added was a progress bar at the bottom of the screen to show the user how much they had left. Another change I made (at the request of some of the other members of the team) was to not allow the user to go back and change their responses after they had submitted them. This would prevent the user from going back and second guessing themselves.

Day 44: Right away, I noticed the app was skipping a the screen with the directions on it and replacing it with another screen. I was able to fix this and work on making the app look more presentable. Before heading to lunch, I completed online training required for volunteering at the State Fair.

Day 45: In the morning, I was able to fix an issue I was having with some help from Nick. Once I was done with that, I decided to start working on my final report for the DREU.

WEEK 10

Day 46: I started off the day by working on my final report outline again. After looking up a format for the paper I was able to see what sections I needed to include in my final report. I spent most of the day researching previous work and filling out my outline.

Day 47: On Tuesday, I worked all day rewriting my final paper outline. On Monday, Stephen had replied to me at the end of the day after I sent him my first outline. He had suggested a lot of things for me to consider so I rewrote the majority of my final paper.

Day 48: I worked in the morning on the beginning of my paper to make sure everything flowed together nicely. Before lunch, I went to the last REU research talk given by Chad Myers. When I got back from lunch, I continued working on writing my final report.

Day 49: Thursday, I made progress on my report and occasionally asked Nick and Stephen for advice. I mostly had the paper completed except for some slight alterations that I planned on making after consulting with Nick and Stephen. In order to gain more knowledge about basic facial animation, I read a few online articles and books. They really helped me with setting up the background and previous work of my paper.

Day 50: On my last day, I worked on putting images into my report and finished editing. For lunch, I went out with members of the graphics department to celebration the completion of the program. In the afternoon, we had a boat cruise on the river and dinner with other REU students at the University.

| Link To Final Report | |

| File Size: | 692 kb |

| File Type: | |

ProgramsMATLAB

C++ C Unity C# Java Python |

My Role in the Project and Our Goals

For this project, I am working with a computer science professor, a psychology professor, and grad student to assist a facial reconstruction surgeon with determining an appropriate procedure by displaying facial expressions on computer animated models Part of my job is to display the animated face on a 3D TV that can track a user's movements and allow for 3D viewing. Another part of my job is to retrieve data from a user study that is to be preformed at the Minnesota State Fair this year. With the user study, I hope to acquire data about what makes a smile look more natural to a viewer. I will do this by having an iPad app present animated faces for users to rate.

As I stated earlier, one of the goals of this project was to display any Unity scene on a 3D device. When the object was in a specific format, we wanted to have a face detecting camera track the face of a user as they physically moved around to view different angles of the 3D image.

My job was to make a Unity prefab that took in a game object and gave out the texture (layout) that could be viewed on a 3D TV.

As you can see in the image on the right, I have two very similar images of the same face right next to each other. The image on the left is the face mesh that I got from the grad student, Nick, taken from a camera places slightly to the left. The image on the right is the same as the left but slightly to the left. When I sent this format into the 3D TV on 3D mode, the face appeared 3-dimensional when the user was wearing 3D glasses. Since the signal sent to the TV is a live video, we could alter the faces in real-time and the movements would appear on the TV.

One of the other goals of ours is to retrieve data from actual users on the effectiveness of the animated facial expressions. Since the facial reconstructive surgeon does not have a way to know how the procedure will go before she performs it, she came to us to help her with computer simulated graphics. When we were working on creating the simulator, we realized there was no collective source of data that described what seemingly natural facial expressions looked like. To adjust for this, we decided we needed some form of a user study that asked viewers a series of questions about a simulated expression on its abilities to display emotions. For our user study, we decided to bring our program to the Minnesota State Fair in the form of an iPad app. In the app, the user will be informed about the project and its intent as well as the directions. After the user witnesses the animated face, they are directed to a rating screen where they give their input. Once the user has gone through all of the videos, they are thanked for their participation and the data is sent to us so we may interpret it.

As I stated earlier, one of the goals of this project was to display any Unity scene on a 3D device. When the object was in a specific format, we wanted to have a face detecting camera track the face of a user as they physically moved around to view different angles of the 3D image.

My job was to make a Unity prefab that took in a game object and gave out the texture (layout) that could be viewed on a 3D TV.

As you can see in the image on the right, I have two very similar images of the same face right next to each other. The image on the left is the face mesh that I got from the grad student, Nick, taken from a camera places slightly to the left. The image on the right is the same as the left but slightly to the left. When I sent this format into the 3D TV on 3D mode, the face appeared 3-dimensional when the user was wearing 3D glasses. Since the signal sent to the TV is a live video, we could alter the faces in real-time and the movements would appear on the TV.

One of the other goals of ours is to retrieve data from actual users on the effectiveness of the animated facial expressions. Since the facial reconstructive surgeon does not have a way to know how the procedure will go before she performs it, she came to us to help her with computer simulated graphics. When we were working on creating the simulator, we realized there was no collective source of data that described what seemingly natural facial expressions looked like. To adjust for this, we decided we needed some form of a user study that asked viewers a series of questions about a simulated expression on its abilities to display emotions. For our user study, we decided to bring our program to the Minnesota State Fair in the form of an iPad app. In the app, the user will be informed about the project and its intent as well as the directions. After the user witnesses the animated face, they are directed to a rating screen where they give their input. Once the user has gone through all of the videos, they are thanked for their participation and the data is sent to us so we may interpret it.

Gallery

Thank you for viewing my website!