The back-faced human shell required some tweaking because the more intricate parts like the fingers didn't react too well to the back-facing - bits and pieces of hand and foot would miraculously materialise from time to time. I solved the problem by replacing the hands and feet with ellipsoids, which were much better behaved under backfacing.More progress on the animations for the scaling laws experiments - the general consensus seems to be that the incorrectly scaled blobs don't look incorrect, they just look very tired or very energetic. We tried scaling them more for a different set of motions, with a fixed camera and the different-sized blobs all travelling in the same lne of motion but with varying speeds.

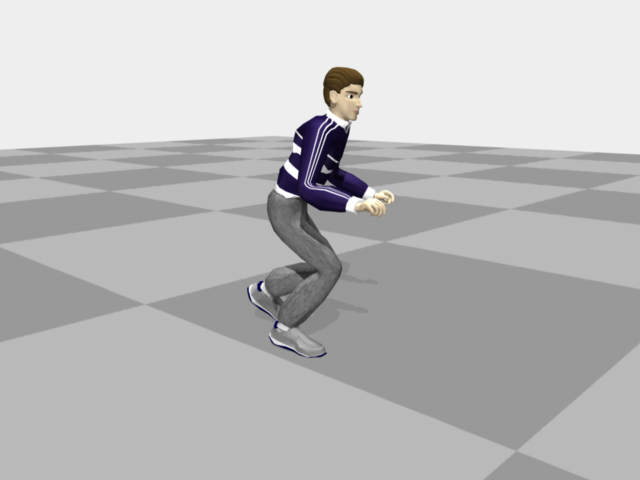

We have so far been shying away from human motion because of all the preconceptions that come with it, but Jessica decided to make a set of human motions and see how they look even if we don't end up running them in an experiment. Elyse, a DMP student working in the MoCap Lab, is working with some emotional walks. We are to get a-hold of one of the more exaggerated ones - she has happy, sad, afraid and confident - and render it with a fixed camera, checkerboard ground plane, and one of the generic models. Jessica said that "confident" would be a good choice, but that motion was not cleaned yet so we did "afraid" (shown below). On Monday we'll go down to the MoCap Lab and clean the "confident" guy up so we can use him.