Rosangel Garcia

Le Moyne College

Information Systems and Software App Development

Undergraduate Class of 2019

Email: Garciro@lemoyne.edu

CRA-W Distributed Research Experience for Undergraduates

University of Virginia, Charlottesville VA

Mentorship Professor: Vicente Ordonez Roman

Website: Vicente's Website

Area of Research: Computer Vision

REU Final Paper: REU Paper 2018

Research Project Description

My project for this summer involves quickly grabbing unstructured data from a dataset of movies and structuring the data. The hope is to find a pattern between a genre of movies.

1. Obtain data of movies and their main ideas into text to get unstructured data. 2. Sort out useful data that is needed from the unstructured data. 3. Analyze unstructured data and structure it to find patterns between genres of movies in the dataset.

Week 1 Summary

I started my REU program here at the University of Virginia in the Department of Computer Science. During this week I was reading and looking at different possibilities of projects that I can involve myself with. A project that was interesting was to deal with unstructured data and structuring it quickly. Therefore, I did more research on what I can focus on because the topic was really broad.

Week 2 Summary

My second week in the University of Virginia involved more research to find out what my project will focus more on. My advisor and I decided to get unstructured data from a dataset of movies. Each movie has a text file that was created to describe what the movie is about. The data is still unstructured so that needs to be done.

Week 3 Summary

During this week I have been exploring with the library nltk from python. I have been doing tutorials on what different things I can do with this library and apply it to text files.

Week 4 Summary

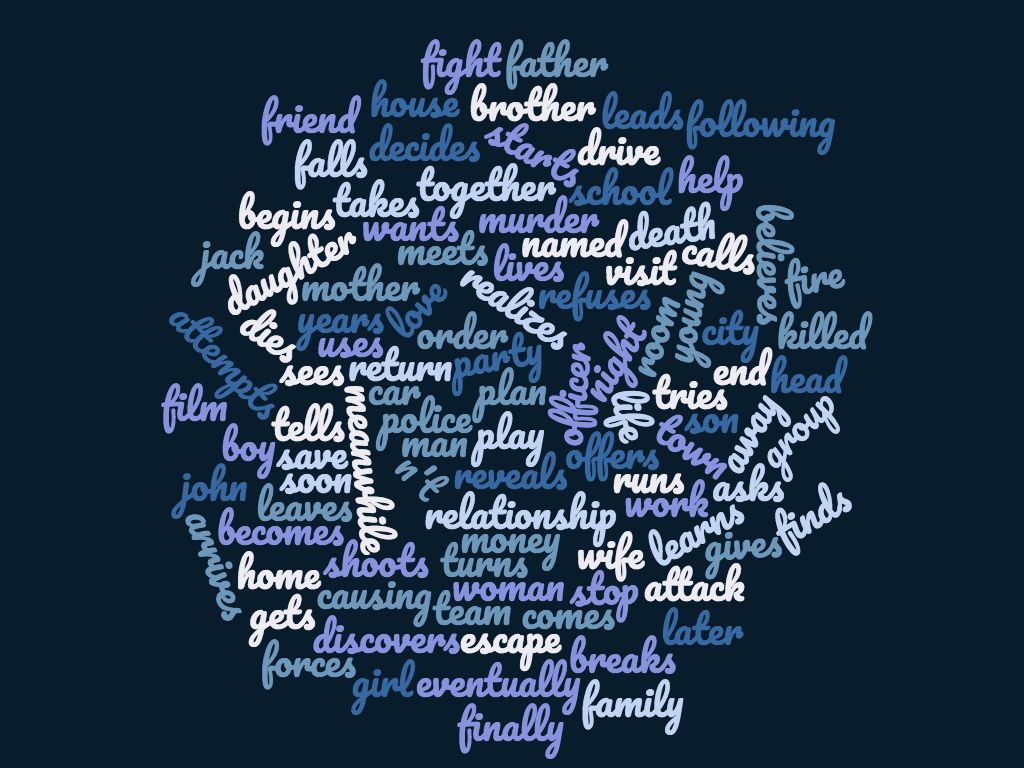

Currently still seeing how we will decide to structure the data. We created a word bank to see what are the most common words in all files and the frequency in which they repeat. However, now the next steps are to get more specific and see what are the most common words in each genre and see if there is a pattern.

Week 5 Summary

This week was able to create a word cloud that included all of the most common words mentioned in the data set total. I have generated an image of the word cloudand how it looks like.

Week 6 Summary

This week I managed to download posters for the first 100 movies in the dataset because it is planned to try and create a website where we can upload the images of the movies and somehow show the common words of each movie individually to try and see some pattern.

Week 7 Summary

This week I am currently trying to create a different set of word clouds where I have a bank for each genre and each movie that has that genre will be included to the word cloud for that genre. Therefore this will create word clouds for each genre.

Week 8 Summary

This week I was able to create all the word clouds for all the movies that I needed by using the library WordCloud. I also was able to create an html that will show the first 100 movies of my data set with the top two characters and their word cloud for that movie. I was also able to successfully use the Name Entity Extraction Library for to be able to extract the top two characters that were mentioned in each plot file.

Week 9 Summary

This week I began exploring sentiment analysis because my mentor and I figured we needed to add more in this project. We used sentiment to analyze if the top two characters of each movie were good or bad characters in each movie. Sentiment analysis would categorize by determining a score of positive or negative. Since we did not have time to train any data we went ahead and used the vader function to automatically do some sentiment analysis to these movie characters based on how they were described or what they were doing in the movie from the written text plot file. After adding sentiment analysis we also made sure to add this information in the html.

Week 10 Summary

This week involved mostly wrapping up all the data and making sure everything was well formatted and that results were being produced correctly without any error. We had some errors come up sometimes which was why some information was delayed in order to continue. After I proceeded into gathering all data making sure I wrote my paper for the REU program.