Week 1 (June 15 - 19):

Arriving to the Midway International Airport in Chicago, IL with my DREU internship partner was a perfect way to begin the summer internship. Weeks before flying out of New Jersey, we had both decided to rent an apartment that was a close walking distance from the University of Chicago as well as traveling together to Chicago. The first couple of days we used to settle in and get the hang of most things, but by the third day we had scheduled to meet with our mentor, Shan Lu. The excitement in meeting with Shan for the first time was escalating as we were walking closer to the Hogwarts-looking campus of the University of Chicago. As we opened the heavy doors of the Ryerson Physical Laboratory, we had seen a group of people standing in the center of the entrance. Shan, her two PhD student, and the CS department administrator, were the group and they all received us with welcoming smiles. Right away we were given a little tour of the building and Johanna and I were each handed a fancy key to our shared office. To make it even more official, the tech staff set up our CS accounts right after the tour. Once that was handled, we all went out to grab some lunch and get to know a bit about each other at Medici on 57th. After lunch, Shan had a meeting to attend, so Johanna and I decided to go and set up our UChicago IDs at the library. This ID was mainly to give us door access to the lab if we ever had to stay after hours in our office.

The next meeting was scheduled the day after our first and this time it was going to be more technical. She mentioned the main research goals and gave us a paper (written by her and four others) that was similar to the project to read over after we left. In the meeting Shan asked both Johanna and I if we had ever written any concurrent software before and the case was that we had not. Therefore, she talked to us a bit about the fundamentals of it and multi-threaded software. Her suggestion for us was to read and practice some code in an online textbook that focused on concurrency. She knew that this would help us familiarize with understanding how writing concurrent programs works. It took me a week longer to grasp the basic concept of it than my partner, but spending all daylight in reading through the textbook was filling me with a better understanding of what I might encounter in the project. One example would be learning some of the effects of different critical section changes that I might come across once I have parsed and analyzed through the source code versions of my assigned software project.

The general objective for this summer research project was to study the change history in an open-source, multi-threaded software project specifically the Apache HTTP Server for Johanna and Mozilla for me. This would help us see what type of changes have been made to condition variable operations (specially signal and wait routines) after creating a scripts and manually inspecting chunks of the source code. For Mozilla, I was able to access its source-code management tool, Mercurial, in order to get hold of all the changes that have been done in its repositories. As I go further in to this study, I will hopefully find the common condition variable operation routines that are implemented in the source code and determine how their change can be inconvenient for developers when building multi-threaded software. The outcome of this research will benefit future researchers to program tools and algorithms for writing better concurrent software so it can be made easier for real life developers to handle over synchronization problems

Week 2 (June 22 - 26):

Through this week, I continued learning more about concurrent programming and concurrency bugs. Johanna and I also met with Shan and went over a couple of things. First, she was willing to clear up any topics that Johanna and I had crossed upon during our background research on concurrency that took us longer to understand. As I heard Johanna share that she had done some extra research on her assigned software project by seeking its libraries' APIs, Shan knew just what to suggest for us next. Since an undergraduate student (Sofia) from the University of Chicago had been working on research very similar to ours, but with a different software project (MySQL), she helped us in contacting her. It would be important to set up a meeting with Sofia so that she could give us any tips and ideas before writing scripts and doing further research. Shan also pointed out that it took Sofia some time to correctly start her project due to the fact that she had forgotten to include wrapper functions for synchronization operations in her script. Once we reached out to Sofia, we decided to gather in our office that same week in an afternoon. It was a super useful meeting because she had shared the challenges/issues she had already gone through and how we can prevent from running into them. One of the various challenges was figuring out that developers who wrote MySQL(in her case) did not always use the POSIX library when implementing signal and wait routines in wrapper functions. By the end of the week, I had used a code browsing tool for Mozilla to begin exploring the APIs of some of Mozilla's concurrency-related modules. Based on my research from this point, I realized that my case study happened to be slightly different from Sofia and Johanna's projects because the developers of Mozilla directly used the POSIX library when implementing wait/signal procedures in wrapper functions.

Week 3 (June 29 - July 3):

During this week, I was feeling more comfortable with applying what I learned from my reading to the actual project. Those several chapters helped build an idea of the distinct critical section changes I would encounter later on when analyzing and parsing Mozilla's source code revisions. Before I could move on to writing my script, I explained to Shan that I had crossed upon a script (written by Rui, a PhD student from Columbia University) she had attached in an email from an older research project that could relate to this one. The one thing that held me back a little was the fact that it was written in Python which I was not familiar with. Nonetheless, I told her that I was up for a challenge in learning basics to Python so I could learn from this student's code as well as get an idea for when it comes to writing my own script. My mentor was supportive in giving me this week to learn Python if I was actually going to apply it. I learned from a website that explained Python in a nutshell for me and another website which took me step by step through coding examples. Haopeng (one of the PhD student from Shan's research team) and I also met that week and decoded Rui's script. As a whole, it basically parsed the diff files generated from a repository (it would be from Mercurial in my case) and the following four lists from diff were created: old version code, new version code, lines that were added, and lines which were removed.

Week 4 (July 6 - 10):

This week was when I began writing my script, now that I had a couple of strategies in mind that I could apply when tracking condition variable operation changes in Mozilla's repository as well as how to parse the difference between versions. One of my objectives this week was to write a script that created an output file with all the differences between revisions. While doing this, my mentor had suggested to try finding how many wait/ signal operations there were for the first 60,000 revisions. We both knew that it would take long to run and test all of Mozilla's versions since it is highly multi-threaded. Thus, she just wanted me to test a small amount first and then share my results with her. I had completed the part of the script that collected all distinct DIFFs amongst versions, but could not share any results yet with my mentor yet because the script was still running. As I waited, I brainstormed on how I was going to go about finding what Shan wanted me to collect. My approach was to manually go through the versions that contained signal and wait and only count the condition variables which appeared as pthread_cond_wait and pthread_cond_signal. There are a couple of command line tools such as grep that can make this easier for me to complete before meeting with my mentor again.

Aside from the research I'd like to mention how wonderful it was to have been able to pass the fourth of July in Chicago. I spent all day with my two lovely roommates, Johanna and Regan, walking downtown and watching the fireworks at the Navy Pier. I will never forget how we would always end up matching or color coordinating with our outfits accidentally. Next time in I'm in Chicago, kayaking under the moon, stars, and a view of the fireworks is a must!

Week 5 (July 13 - 17):

This week was the halfway point of the internship and I needed to plan out what I have left to do as well as what I can do with the amount of time left. In the meeting with Shan, I had informed her that there were only 17 wait and signal operation routines implemented throughout the first 60,000 revisions. Additionally, I realized that I had forgotten to include the information of the commit logs for each revision's output. I told her I would work on adding that to my script before Thursday which is when we scheduled to meet again that same week. Luckily, it was simple to add this to my script because I applied some of Rui's code with my own. The importance of obtaining commit logs for each revision is so that the manual evaluation section of the project such as gathering development history of condition variables throughout Mozilla Browser Suite can be slightly easier to conclude. In the second meeting, I showed Shan how I wrote my script to search for the log info per version and she approved. There was one thing she wanted me to fix and it was to store each revision in its own file instead of collecting all them into one big file. This would also benefit the manual analysis part of the project. Shan said that she had a new task for me, but would discuss it over with me next week and was going to let me change the performance of my output first.

Enhancing my output was a little frustrating just because it involved a lot of trial and error. Until, I finally created an algorithm that did exactly what I was looking and what Shan had had asked for. This algorithm iterated through designated revisions and then opened a new file with its different diff content after every 2 revisions, along with the small detail of saving every new file plus naming it after the version's number automatically. I left my script running through the the first 10,000 revisions to test if it was working well.

Week 6 (July 20 - 24):

This week Shan and I met once again and she had prepared a new aim for me in place of the prior goal. From now until the end, I was responsible for gathering certain statistics through the following questions: Which revisions contain wait/signal changes? How many revisions contain wait/signal changes? How many and which revisions share the same parameter? Slowly but surely this data collection will help compare how the software project I am working with has matured from time to time. Additionally, future researchers working on a similar case study can take what I have collected and use it to design algorithms/tools that will allow developers to fluently handle concurrent programming issues in any multi-threaded software. Now that Shan and I knew my script was running efficiently, we decided to run all versions in Mozilla's repository so that I can begin with my manual evaluation task. The method I used throughout was running the revisions by sets of 64,400 instead of all at once. For instance, I ran the first set of versions (from 1 - 64,317) which took quite a few days. Since I was aware that Mozilla developers used "pthread_cond_wait()" and "pthread_cond_signal()" as their synchronization procedures, I decided to use "grep." This command line tool directed me to where in the source code that these routines were being implemented. Considering that each file was named after the revision number automatically, the revisions that contained these condition-variable operations were found and saved. The versions which were not saved or needed got automatically deleted so that more space could be available for the next set of revisions and it kept the computer from slowing down or crashing. Working with Linux and Python were two of the main things I strongly appreciated because they were both convenient for using Mercurial commands. To sum up, all of week six I ran my script and collected all necessary data so that I could manual inspect and form particular statistics.

Week 7 (July 27 - 31):

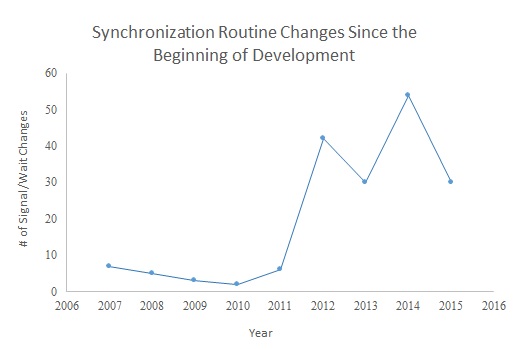

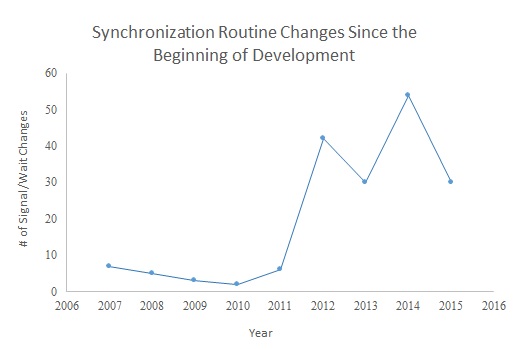

From the beginning of the week, my script was still running but close to reaching its end point. In the meantime, I started going through the first set of files that had been created of versions that my script specifically chose out. I mainly looked at which and how many revisions contained signal/wait changes rather than collecting how many and which changes were within each revision. If I were to have looked for synchronization routine modifications since the beginning of this software project's development, then my manual analysis would have taken much longer than a week. Reason being that categorizing these changes and determining the reasons behind these changes such as race conditions, producer/consumer problems, or any concurrency bug issues would be relatively broad. Therefore, the purpose was to review each set of revisions and find if signal and wait operations were applied properly rather than just as a comment for example. If that were the case, I would go ahead and record the year and revision number in a word document which I saved as Final Results. Once all my data had been gathered for all sets, I decided to create a graph that presented synchronization routine changes since the beginning of Mozilla's development until the summer of 2015 (Shown below). It is safe to say that as time increased so would the changes.

Week 8 (August 3 - 7):

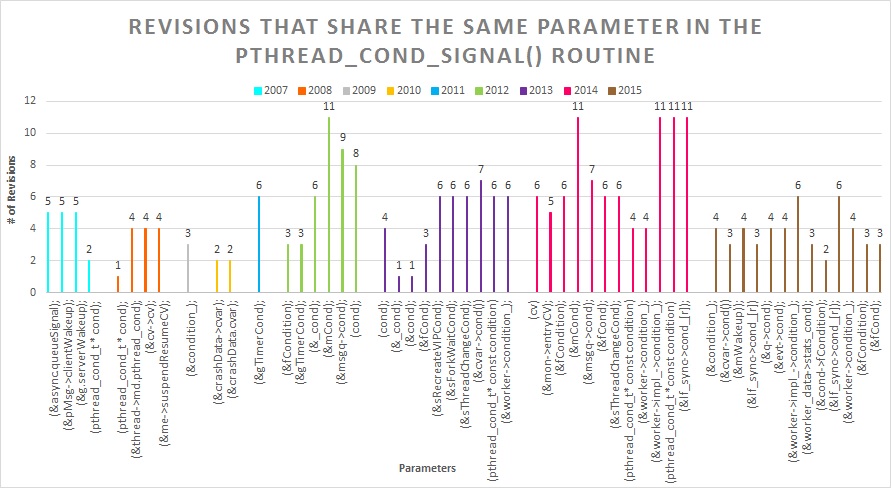

Week eight's mission was to focus in manually finding how many revisions within each year shared the same parameters specifically for the pthread_cond_signal() routine. Working on the first set of versions, I used the command line tool "grep -n" with the keyword: "pthread_cond_signal(" which then directed me to the lines in the version's file in which I can go confirm the condition variable's parameter. All sets of versions were performed the correspondingly. Manually correlating which revisions contained the same parameter got a bit complicated and is where most of the time was spent. The three important things I noted down in a Word document were the year, parameter, and number of revisions sharing the same parameter. All of my statistics were then organized into Excel as well. The graph below displays my results and it is evident that various parameters are used highly at certain periods of time while others are used less at different periods of time.

Week 9 (August 10 - 14):

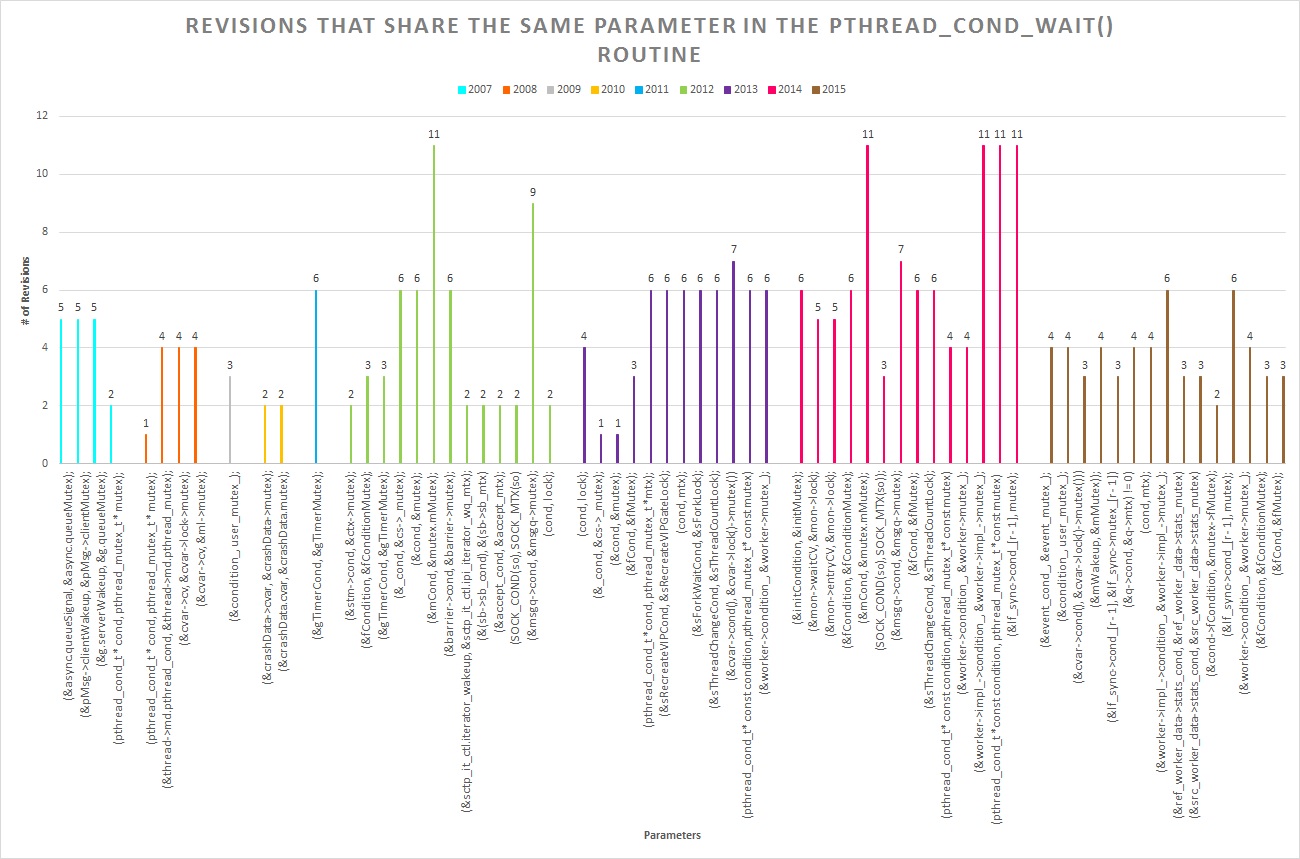

Week nine!!!! Exactly the same things accomplished last week were completed this week too. However, another keyword was used and it was "pthread_cond_wait(." Final results are presented in the graph below!

Week 10 (August 17- 21):

This week I concentrated on organizing my collection of notes, code, and anything that I may need later on. Since I do not attend the University of Chicago, I was asked by Shan and the tech staff to save all valuable research information in a flash drive for future use or else it would get permanently deleted. I also typed up most of the statistics that I had written in a notebook onto a word document so my notes could be more organized. I said my goodbyes to everyone a bit early since my fall semester at the Florida Institute of Technology had already begun. Usually the first week of classes in most institutions do not starting lecturing on the class's subject, but here they jumped straight to the first lesson. Nonetheless, I dedicated many hours of this week to work on my final report as well as my website. Creating this website from scratch was extra fun because it is something I had never tried before. All in all, this summer internship was a blast and I am glad to have learned so much throughout these ten weeks in Chicago.

Copyright 2015. Michelle Tocora. All rights reserved.