Week 9

Week 9

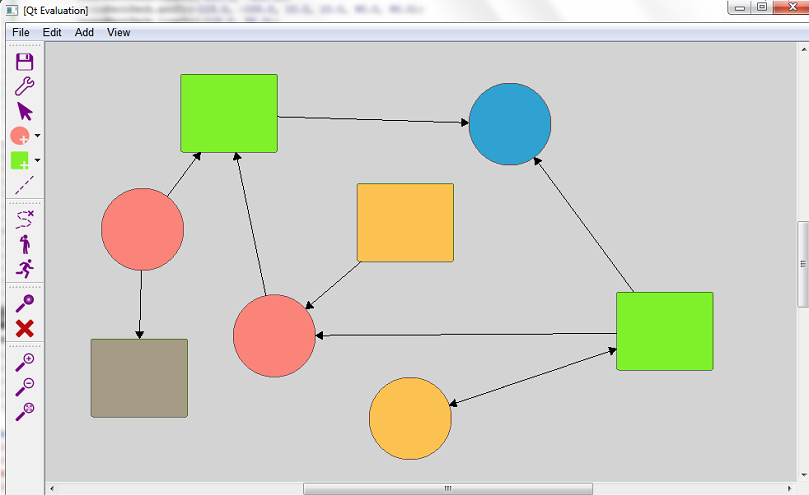

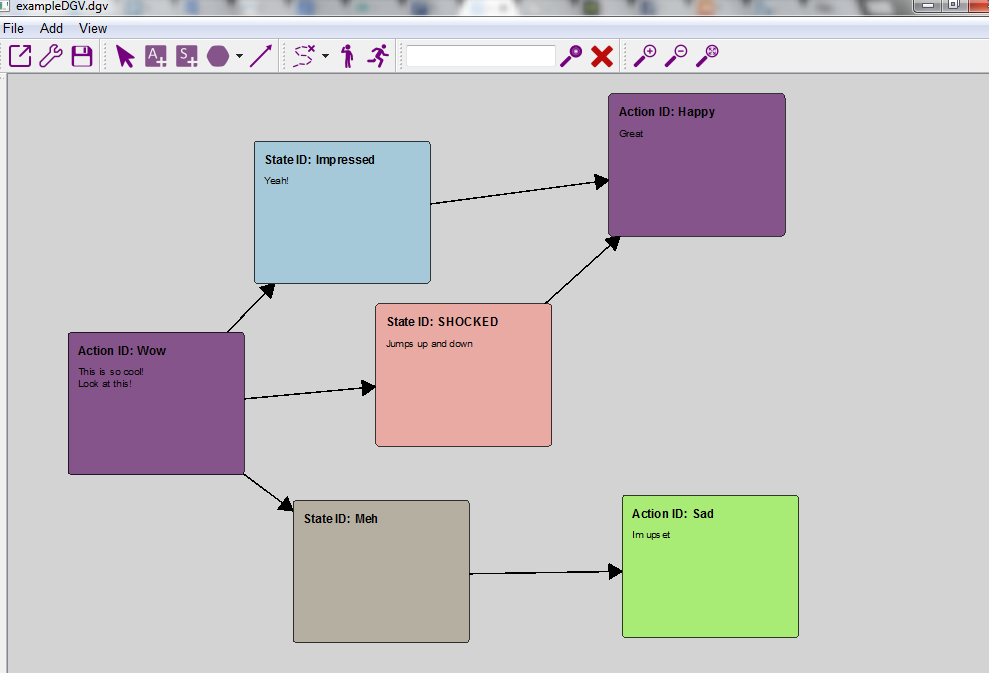

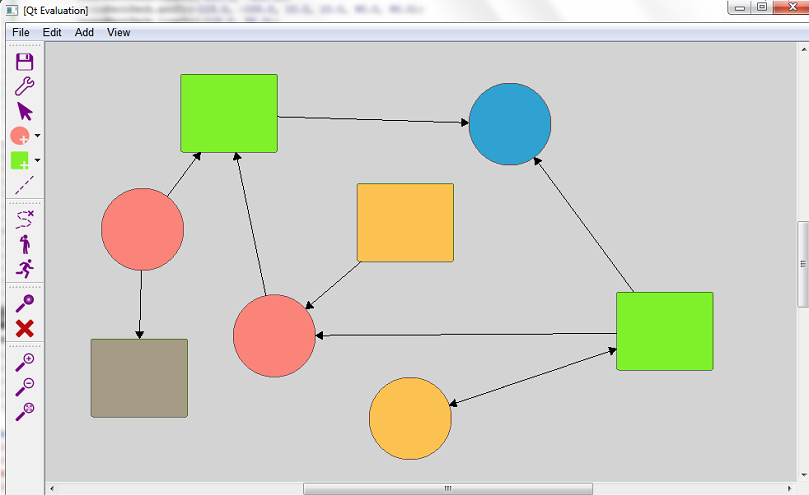

My last week in the lab! This week I was able to complete my project! I demoed it with my mentor and Anagha and then the last couple days of my time were spent changing a few kinks in the system

and adding a few small functionalities at my mentor's suggestion. The system can model data that is fed into it from a .txt file and can also save modeled data into a .txt file. The next step is to make the program work with .yaml files using a new format.

While right now, my front end visualization and Anagha's back end data structure are only 'communicating' through reading and writing from the same .txt files, I have now turned my code over to Anagha so that she can add her necessary classes and files.

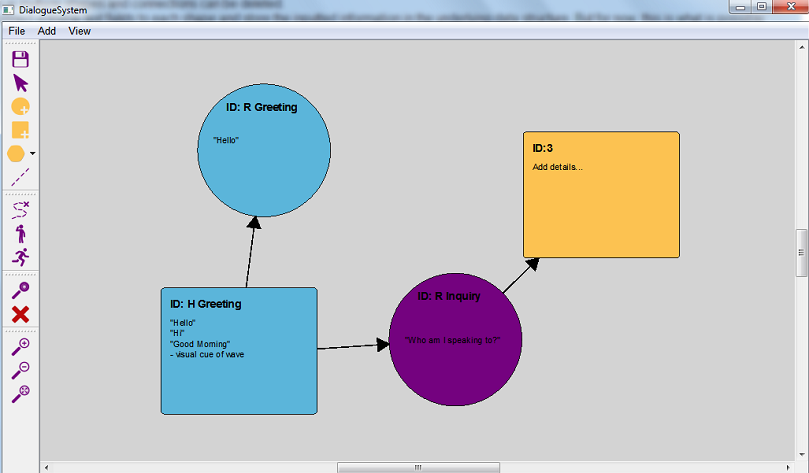

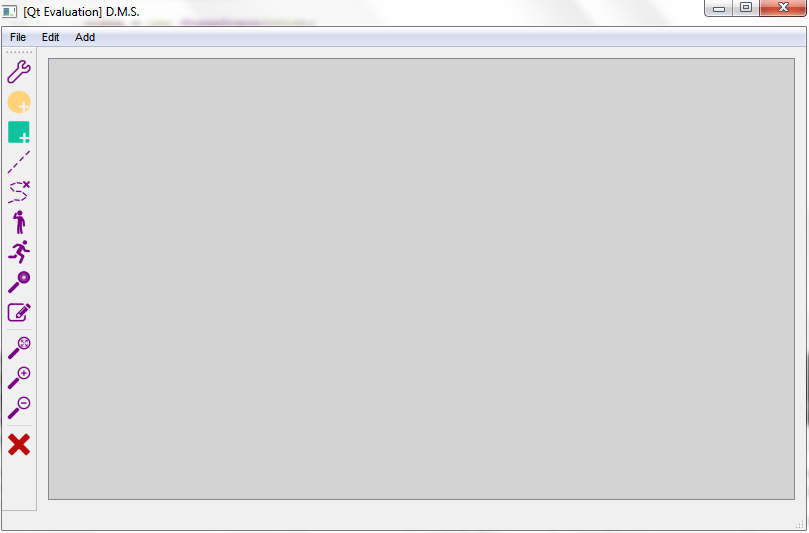

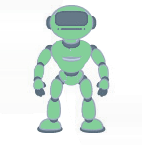

Here are a few screenshots of the final program:

Overall, I am very happy with the final result of my DMP. I think that I learned a lot and gained extremely valuable experience. I owe a huge thank you to my in lab mentor, Ross Mead, as well as Maja Mataric for hosting me this summer.

Week 8

Week 8

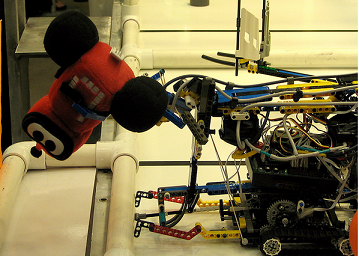

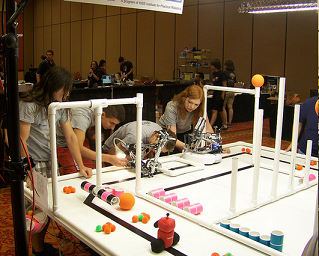

I was only in the lab about half time this week, because the Global Conference on Educational Robotics is currently being hosted by USC! The conference includes a tournament between high school and

middle school robotics teams called Botball. I have volunteered to work on the tournament floor a couple times this week. It has been very fun to see so many students so interested in

robotics and to see all the things their robots are capable of! There are teams attending from all over the world, including Austria, China, and Qatar.

The botball challenges include maneuvering various objects to new positions, picking up and replacing objects, hanging

hangers on rails of different heights, even sorting objects based on color! All the robots used are completely autonomous, activated only by a start light. In addition to the botball

competition, there is an aerial drone contest in which a drone must locate an object in a maze and a robot building day for kindergarten students using legos and basic kits!

My mentor, Ross Mead! My mentor, Ross Mead!

|

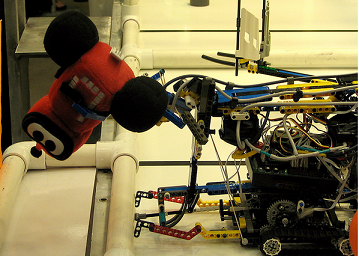

A plush 'botguy' being picked up by a team's robot! A plush 'botguy' being picked up by a team's robot!

|

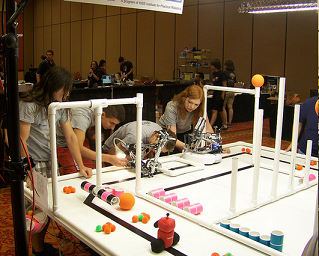

Students prepping their robots! Students prepping their robots!

|

While in the lab I have been working on writing/saving files. Anagha and I have also agreed on the best format for storing the data found in each node in the files used between us.

Now that we are on the same page, I will also start to work on opening/reading from files!

Week 7

Week 7

This week I was able to get text entry and shapes to work together! The shapes now include a title/ID field, as well as a description field, both of which are contained

within the shape object. I redid a good amount of my code to make it more readable and all around neater and more concise. My level of C++ knowledge has definitely grown

greatly since I first began this project, and I noticed a lot of things in my code that could be done better! With only a couple of weeks left, the program is really starting to look

like a real one and I am very excited to complete it!

Now it is possible to create something that looks like this:

Week 6

Week 6

I have run into a lot of trouble with trying to connect text entry areas with the shapes in my diagram. Thankfully, I have figured out how to store entered text, which is more important

in terms of interacting with the back-end data structure and the functionality of the system, but in terms of how it looks to the user, there are lots of glitches.

After getting frustrated with this problem, I have been focusing on the other functionalities in my system as well as meeting with the masters student, Anagha, who is developing the back end of this system.

We have agreed on a basic file format to keep track of data in a way that is useful to both of us. I am also now looking into how to read and write these kind of files to interact

with the part she has done!

Week 5

Week 5

This week I was able to actually add functionality to my buttons! In order to do this, I ended up completely redoing how I set up my UI. I had originally relied mostly on Qt's built-in

form creation, however I changed my approach to adding all elements programatically. This gives me more control over each element as well as makes it more accessible in the code.

But now the view can be zoomed, pop ups are generated on appropriate clicks, and, most importantly, shapes and connections can now be added!

The user can click on the shape they would like to add (circle for a robot action and square for a state observation), choose the color, the click in the view area to add that shape.

Shapes of different types (since an action cannot lead to another action and a state cannot lead to another state) can be connected using arrows. The entire graphic is movable and individual or multiple shapes and connections can be deleted.

The next step will be to connect editable text fields to each shape and store the inputted information in the underlying data structure. But for now, this is what is possible:

Week 4

Week 4

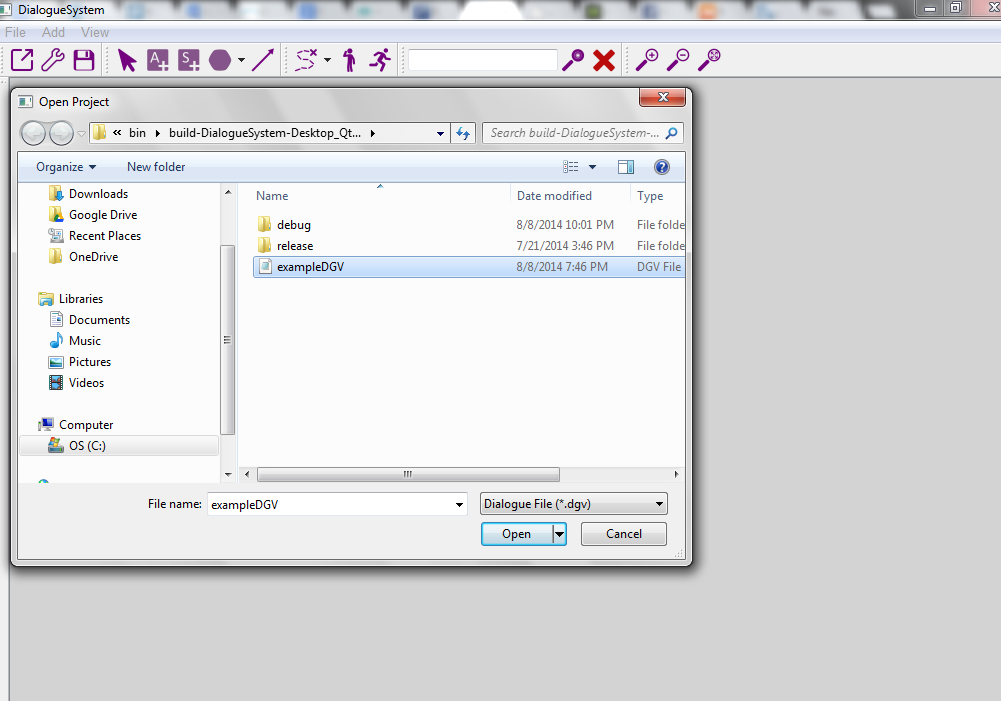

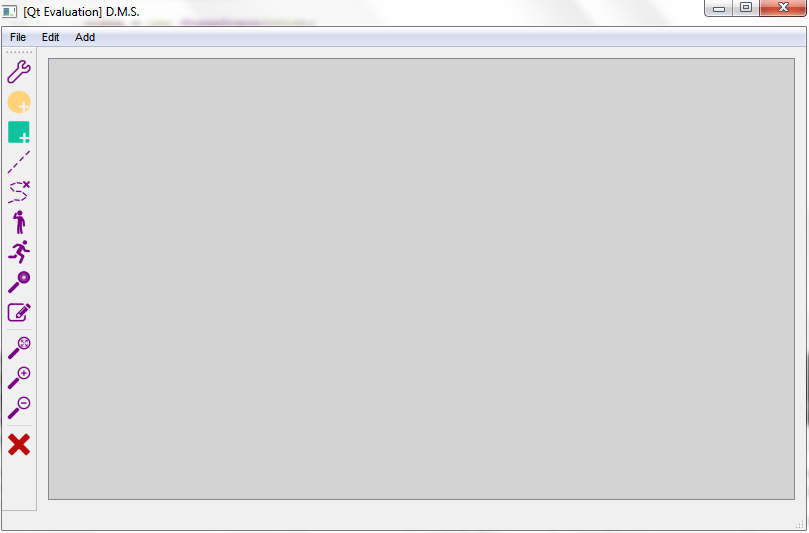

I have been working on building the basic user interface this week.

I have never worked with program design like this, so it has been very interesting for me: choosing color pallets, creating icons and graphics, and just creating the general feel of this system.

So far, the program looks like this:

I am pretty happy with the appearance and did not find much difficulty with setting it up using Qt (both using the built in drag and drop form creation and adding elements programatically).

Right now, the buttons have no functionality aside from printing out statements about what they are *supposed to* and will eventually do and having keyboard shortcuts attached to them.

I am pretty happy with the appearance and did not find much difficulty with setting it up using Qt (both using the built in drag and drop form creation and adding elements programatically).

Right now, the buttons have no functionality aside from printing out statements about what they are *supposed to* and will eventually do and having keyboard shortcuts attached to them.

Week 3

Week 3

This week was focused on figuring out what exactly researchers (the target users of this software) want from the system. At its most basic level, the system will need:

- A way to manually add possible actions the robot may take

- A way to manually add possible states the robot may be in (user actions)

- A way to link between these states and actions and a set of constraints

so that only allowable links can be made

When the user inputs, deletes, or modifies one of these three things, the system will have to update the underlying POMDP model that is being represented.

After these basic things have been implemented, there are several other things that could be added to the system to allow for more functionality.

These things include:

- A way to have a 'player' that is able to trace a path through the POMDP, modeling a possible sequence of interaction

- A way to have a 'planner' that is able to determine the optimal path for getting from one state to another goal state

For now, I will stick to implementing the basic features of the system and setting it up to communicate with the actual data structure models.

Week 2

Week 2

It is very important that the system is very useable and able to be generally applied for all researchers and all robots in the lab.

This week I worked on developing user interfaces using the Qt development framework.

Qt provides an easy development environment for building GUIs, including a live gui form that allows for easy drag and drop addition of elements to a UI.

I am going through the beginning tutorials to gain a feel for what is possible to create using Qt.

Not only am I learning to user the tools Qt offers, I am also learning how to program in C++, the language Qt works with.

Week 1

Week 1

The system I am developing will allow researchers to map between actions a robot takes and actions

a human user takes throughout an interaction or dialogue sequence. It will allow

researchers to examine the paths available in a dialogue between human and robot.

The system will be editable in two ways:

1. The possible states and actions for the human and robot will be input manually by the user, the user

will make connections and sequences themselves.

2. The possible states and actions will be input as a compiled file and the system will automatically

create a representation as a POMDP (a partially observable Markov decision process).

In order for the system to work in the first case, it must update its internal structure, not only the visual representation.

This will mean creating and updating a POMDP with each new line of dialogue or action and its connection(s) that the user adds.

Week 9

Week 9

Week 8

Week 8

My mentor, Ross Mead!

My mentor, Ross Mead!

A plush 'botguy' being picked up by a team's robot!

A plush 'botguy' being picked up by a team's robot!

Students prepping their robots!

Students prepping their robots!

Week 7

Week 7

Week 6

Week 6

Week 5

Week 5

Week 4

Week 4

Week 3

Week 3

Week 2

Week 2

Week 1

Week 1