Computer Science | Multimedia Computing

Senior May 2013 Graduation

Email: karen.c.aragon@gmail.com

Mentor Extraordinaire:

Professor | University of Minnesota

Areas of interest: Artificial Intelligence, Robotics, Intelligent Agents.

Email: gini at cs dot umn dot edu

My research will focus on creating an interactive educational game using a humanoid NAO robot endowed with the ability to display emotions. The aim is to create a comfortable learning environment where the humanoid robot is able to effectively communicate and fascilitate learning with a user.

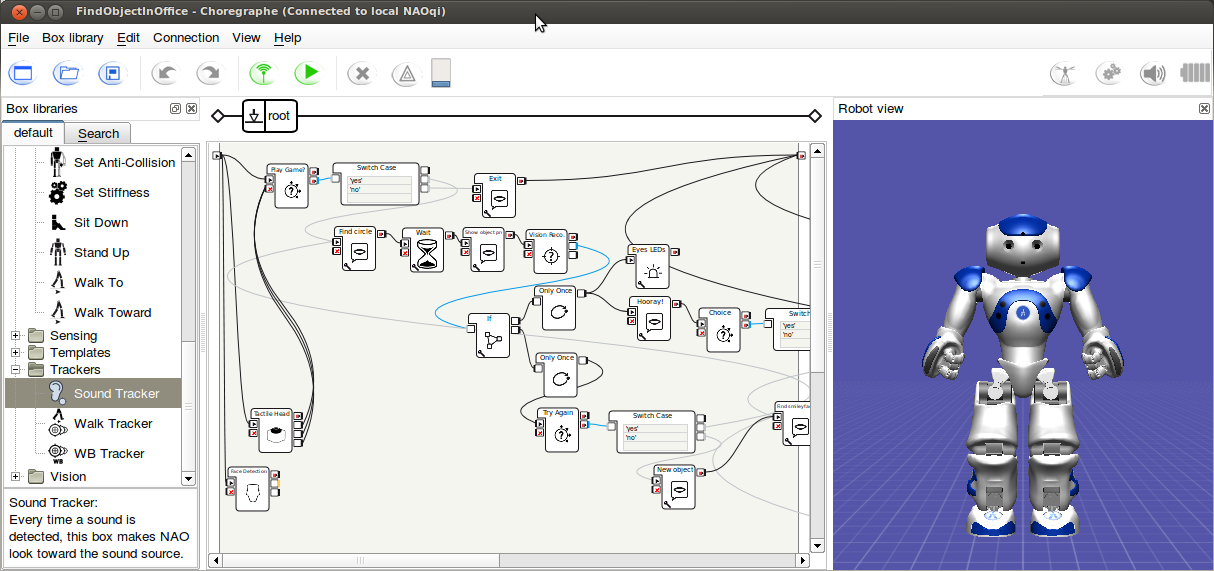

First week consisted of an independent crash course introduction into Choreagraphe, a software interface for the Aldebaran NAO.

DAY 1 Jun 11: Walked through the beautiful Minnesota campus to meet Professor Maria Gini (who I will refer to as Maria) at her office in Kenneth Keller hall. As I approached her office a man bearing a close resemblance to Larry David from Curb Your Enthusiasm quickly dipped in and out and muttered a goodbye. I later found out that the man was Maria's husband, who is also part of the CS department at the University. After a quick introduction and guide through the lab, I sat down and researched the NAO and the Choreagraphe API. But not before playing around with the NAO itself. It's surprisingly heavy, and unsurprisingly anthropomorphicly cute.

DAY 2 Jun 12: Today was spent getting familiar with the Choreagraphe interface. Round of applause to the User Interface Designers for making the GUI interaction extremely novice friendly. I was able to execute very interesting applications on the NAO right out of the box. The first thing I did was to program the NAO to say hello to me and perform a wave gesture with his harms. Then I had the NAO recognize and track my face, and the rest of the day was spent playing ball, so to speak. The NAO was able to walk and follow a red ball I had in my hand. It backed up whenever the ball came to close to it.

DAY 3 Jun 13: Had lunch at a Chinese restaurant with Sam and Ella (two other DREU students who are doing Virtual Reality simulations with a wheelchair, Elizabeth (a PHD student at UofM and Lego enthusiast) and Maria. The big message from Maria was "get your PHD!" I do have plans on going to grad school bute perhaps getting a job first. I spent majority of the day going the Python code from the Choreagraphe examples. The Python convention I went to days earlier proved beneficial.

DAY 4 Jun 14: Today I programmed my first NAO application, though still very buggy. It's essentially an educational game made specifically for kids. First, the robot asks the child if he/she would like to play. If yes, the robot asks the child to find a specific object (a ball, triangle, circle, etc) from a group of flash cards. The child then shows the robot the flash card. If it is the image the robot asks for it says "Hooray!" if not, it apologizes and asks them to try again. In order to implement the game I first had to train the robot by teaching him the set of objects, after the NAO was able to recall them programming the game was essentially very easy.

DAY 5 Jun 15: I fined tuned my program in order to allow the robot to have a more engaging and intuitive conversation with the user. I started with a nice greeting by the NAO and allowed for some hand gestures and head movements similar to the types of movements humans would make when engaged in conversation. The more I model the robot the more I realize that a lot of designing robots stems directly from an understanding of ourselves.

Personal: Having a great time in all this lovely nature! I'm staying in the Lake Calhoun area but soon moving to Dinkytown -a small enclave blocks away from the Walter lab. Went around and did some thrift store shopping, scoped out some co-ops, did a lot of reading, and watched a movie at Lagoon theater.

Making Robots Personal.

DAY 1 Jun 18: In lieu of the educational game I am building, I spent the morning reading papers relating to NAO and education. There's big research going on at Notre Dame regarding use of the NAO to aid children with autism. Because the NAO has very limited and predictable displays of emotion - children with autism find it easier to interact with them. I also was interested in the use of the NAO to help children suffering from ADHD - in most cases, children were more engaged when interacting with the robot. I'm still in the process of formulating my research topic, but definitely think I am headed in the direction of exploring Human Robot Interaction and investigating ways of improving the interaction we have with robots as well as ways the NAO can be used to interact and engage children.

DAY 2 Jun 19: Using Choregraphe's built in vision recognition system I was able to train the robot to recognize shapes, colors, numbers, ABC's, and a few words. I went to the dollar store over the weekend in search of flashcards and found very cute sesame street themed sets.

DAY 3 Jun 20: After some trial and error I found that the NAO's vision recognition system had a much easier time detecting objects on a flashcard than an actual 3D object. For example, the NAO incorrectly identified a circular red ball almost 50% of the time, but was able to detect an image of a red ball on the flashcard. For now, I am sticking to the flashcards until I can dig into the algorithm the NAO API is utilizing. It's coming together very nicely!

DAY 4 Jun 21: Had a short day today because I am actually flying back to NYC until next Thursday. Before I left I had a nice talk with Maria Gini. I explained to her how I wanted to conduct a user study with children to measure their perception of interacting with the robot. However, she explained the difficulties of obtaining an IRB and instead focus on other ideas that do not involve children. I am now focusing on emotion and aspects of social humanoid robots in our every day lives - following the footsteps of Cynthia Breazeal and Brian Scascelleti. Video Above.

DAY 5 Jun 22: Today was spent reading papers on Emotional and Social Robots and figuring out how to apply the methodologies to the NAO. I am also preparing for a presentation where I will be demonstrating the educational game I have developed.

PERSONAL: Always, always, always enjoy being in NYC. This is me walking the Highline.

DAY 1 Jul 2: Spent the day modeling different emotions on the NAO. I first focused on anger and frustration because I thought those were the easiest and most fun to model. Using choregraphe I was easily able to specify movements at different key frames. I later went on to modelling happy and sad.

DAY 2 Jul 3: After spending the day modeling yesterday, which was really enjoyable because it harkened back to my 3D modeling class in Maya, I tested out the motions on an actual now. There were a few hiccups particularly with the robot keeping balance, any sudden movement would have the NAO tilting over ready to fall, but I was there to catch it everytime. I tweaked a lot of the leg positions in order to keep it steady.

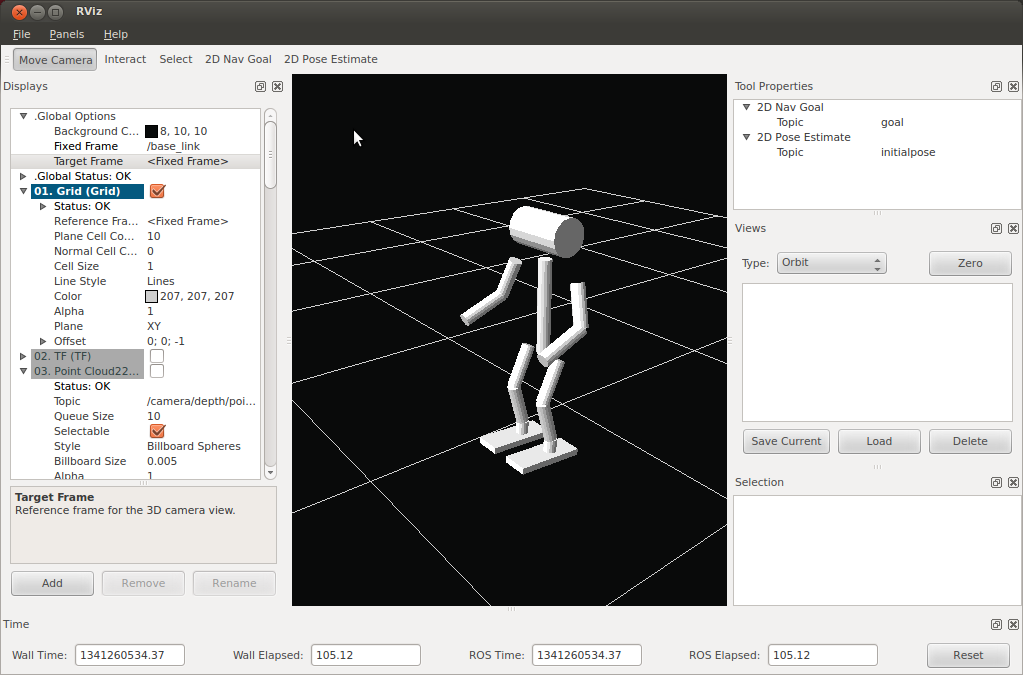

DAY 3 Jul 4 : I focused on getting ROS (robot operating system) and NAOqi working on my laptop running Ubuntu 12.04 it surprisingly went very smoothly, which is often unheard of with the installation process. After about 30 minutes I was able to run simple motor commands to the simulated now in Rviz

DAY 4: After spending the week modeling the emotions in choregraph, I tried them out on the actual NAO. Needs a lot of tweaking!

DAY 5: Went back and evaluated the emotions in Choregraphe using the motion keys. Hardest part was keeping the NAO from losing balance. Special attention was given to the right and left YAW PITCH, Hip PITCH, Knee PITCH, Ankle PITCH.

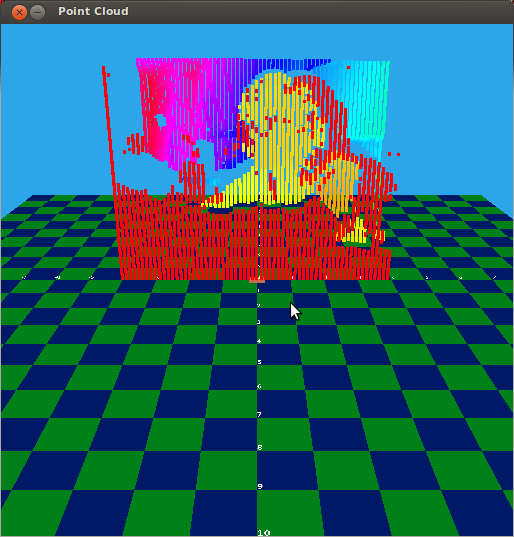

PERSONAL: For my past research project I used the Kinect with the IRobot. -- In my free time I've been programming a couple of applications in Java with the Kinect. Here is an output of the PointCloud image from the Kinect. This is me in my room.

ROS - robot operating system by WILLOW Garage

DAY 1: In preparation for her honors CS class in the fall, Professor Gini asked me to investigate using ROS with the NAO. This scripts shows how to RUN ROS NAOqi and RVIZ, after downloading all the necessary things: *note: run in separate terminals 1. start ros: $ roscore 2. launch NAOqi: $ ~/naoqi/naoqi-sdk-1.12.3-linux32/naoqi --verbose --broker-ip 127.0.0.1 3. start ros_driver package: $ LD_LIBRARY_PATH=~/naoqi/naoqi-sdk-1.12.3-linux32/lib: $LD_LIBRARY_PATH NAO_IP=127.0.0.1 roslaunch nao_driver nao_driver_sim.launch 4. Robot state publisher $ roslaunch nao_description nao_state_publisher.launch 5. RVIZ $ rosrun rviz rviz From another terminal box you are able to send different commands directly to the simulated robot. Note that you can also edit the appearance of the robot. At night I biked to Stone Arch Bridge:

DAY 2: To understand the ROS NAO stack I basically read all the literature here: NAO STACK in ROS http://www.ros.org/wiki/nao_robot NAO_ROBOT - contains nodes to integrate NAO in ROS can be installed on robot should be used with nao_common_stack (for more functionality) * nao_common_stack * humanoid_navigations_stack - contains general packages for humanoid/biped robots NAO_DRIVER - compatible w/ NAO API version 1.6 -connect to simulated NAO by wrapping NAOqi API in python -requires lib directory of Aldebaran SDK to be in PYTHONPATH

DAY 3: ROS commands you should know rospack = ros+pack(age) : provides information related to ROS packages rosstack = ros+stack : provides information related to ROS stacks roscd = ros+cd : changes directory to a ROS package or stack rosls = ros+ls : lists files in a ROS package roscp = ros+cp : copies files from/to a ROS package rosmsg = ros+msg : provides information related to ROS message definitions rossrv = ros+srv : provides information related to ROS service definitions rosmake = ros+make : makes (compiles) a ROS package

DAY 4: ROS Action Library: In any large ROS based system, there are cases when someone would like to send a request to a node to perform some task, and also receive a reply to the request. This can currently be achieved via ROS services.

In some cases, however, if the service takes a long time to execute, the user might want the ability to cancel the request during execution or get periodic feedback about how the request is progressing. The actionlib package provides tools to create servers that execute long-running goals that can be preempted. It also provides a client interface in order to send requests to the server.DAY 5: Took a bit of a side track from ROS to looks at the NAOqi API from aldebaran as well as to learn more python. DEVELOPER'S GUIDE http://developer.aldebaran-robotics.com/doc/1-10/index_doc.html Face Detection http://www.aldebaran-robotics.com/documentation/naoqi/vision/alfacedetection.html

PERSONAL: + Went to Willow State National Park. + Ate the BEST Vietnamese I've ever had. + Biked over the Stone Arch Bridge. + Swam and waded beneath a waterfall. + Moved into new apartment with air conditioner. + Regrettably went to Mall of America. + Had authentic Dim Sum. + Watched a French movie about the Child Protection Police. + Swam in Lake Calhoun and Lake Harriet.

DAY 1: This week was spent getting familiar with NAOqi and Python. Specifically using the Action/Client server in ROS. Writing a Simple Action Server using the Execute Callback (Python): Writing a Simple Action Server using the Execute Callback (Python): Writing a Simple Action Client (Python) Running an Action Client and Server Client-Server Interaction: Action client and Action server communicate via a ROS Action Protocol, which is built on top of ROS messages. Client and Server provide a simple API for users to request goals (on client side) or to execute goals (on server side) via function calls and callbacks In order for the client and server to communicate you need to define the goal, feedback and result messages Goals: sent to an ActionServer via an ActionClient Feedback: gives a way for the server to send messages about the progress to the ActionClient Result: sent from ActionServer to ActionClient when goal is completed ** Important component of action server is the ability to allow an action client to request that the goal be canceled or 'preempted' When a client requests a goal be canceled, the actionserver cancels the goal and calls the set_preempted function

DAY 2: More awesome pythonic things and ROS + NAOqi stuff.. PYTHON STUFF: self spin(): # Wait for messages on topic, go to callback function when new messages arrive. Writing a Simple Action Server using the Goal Callback Method: uses the simple_action_server library to create an averaging action server - demonstrates how to use an action to process or react to incoming data from ros nodes 1. first create the action file which defines the goals, result, feedback messages - generated automatically 2. create the averaging_server.cpp file 3. create executable then rosrun ** realized i was getting an error: undefined reference to 'typeinfo for boost::detail::thread_data_base' This typically indicates that you haven't set up you CMakeLists.txt correctly. http://answers.ros.org/question/37575/how-to-solve-undefined-reference-to-symbol-vtable/ CALLBACK: A callback is a function that you do not call yourself, but define it yourself. Usually you pass the function pointer to another component that will call your function when it seems appropriate. In the ROS setting a callback in most cases is a message handler. You define the message handler function and give it to subscribe. You never call it yourself, but whenever a message arrives ROS will call you message handler and pass it the new message, so you can deal with that. executable code that is passed as a parameter to other code http://en.wikipedia.org/wiki/Callback_(computer_programming)

DAY 3: Writing a Threaded Simple Action Client uses simple_action_library to create an averaging action client spins a thread, creates an action client, and sends a goal to the action server * Boost library C++ : http://www.boost.org/doc/libs/1_43_0/libs/bind/bind.html#with_member_pointers

DAY 4: Running an Action Server and Client with Other Nodes: running the averaging AS and AC with another data node then visualizing the channel output and node graph 1. create data node 2. generate random numbers and publish of the random_number topic Basically the data node publishes random numbers onto the random numbers topic, and the AS subscribes the to same topic

DAY 5: Since my interest lies in advancing Human Robot Interaction I looked into way I could utilize the sound data from the NAO's microphones. One problem is to determine how to access the data to determine the tone of a users voice and to have the NAO act accordingly. For example, if the user sounds bored then NAO and try and motivate the user in a learning setting. Accessing sound data from NAO's microphones Interleaving vs. DeInterleaving: The most generic description I can give that should fit all cases is this: When two or more things are interleaved it means pieces of each thing are kind of shuffled and mixed together, so to de-interleave would be to sort the pieces of two or more things that were mixed and shuffled together so that they are back the way they were, whole and individual. need to write an ActionServer that subscribes to the sound data topic where a sound data node publishes sound data to the same topic

PERSONAL: + Minnehaha Falls

Understanding the Freiburg Stacks, NAOqi API and how to run Python code on the robot directly

** Warning: this week will be very technical as I dive deeper into the NAOqi API ** DAY 1: Using the NAOqi API: (this is taken directly from the Aldebaran site) Making a Python module - Reacting to events Here we want the robot say Hello, you every time it detects a human face. To do this, we need to subscribe to the FaceDetected event, raised by the ALFacedetection module. If you read the documentation of ALMemoryProxy::subscribeToEvent(), you will see you need a module name and the name of a callback. So we need to write a NAOqi module in Python. To create a module in Python, we also need a broker.

DAY 2: Literature on the Freiburg Stack: keywords: NAOqi API, pagkages, modules, proxy, modules, broker Nao is a commercially available humanoid robot built by Aldebaran Robotics. The ROS driver is provided by Freiburg's Humanoid Robots Lab in alufr-ros-pkg, which essentially wraps the needed parts of Aldebaran's NaoQI API and makes it available in ROS. It also provides a complete robot model (URDF). A port of rospy to the Nao is available in brown-ros-pkg. Programming for Nao focuses around modules. There are several default modules available that provide many utilities, like ALMotion (for moving the robot), ALMemory (for safe data storage and interchange), or ALLogger, which is used in the Helloworld example for message output. And of course, you can create your own. Modules communicate between themselves using proxies. A proxy is nothing more than an object that lets you call functions from other modules, passing parameters and such. The other important concept is the broker. A broker is an executable that runs in the robot, listening on a given port for incoming commands (like a server). Brokers can load a list of modules at startup, and then manage the communication procedure. By default, the robot starts a broker called "MainBroker" that listens on port 9559, and loads the list of modules found in autoload.ini. You can create your own broker as well.

DAY 3: Today was spent writing very simple scripts in python to send commands directly to the NAO robot Using NAOqi API. These are instructions for running Python code on the robot: 1. upload the script to your nao directory ex: /home/naoqi/nameoffile.py 2. edit your autoload.ini file so it includes under python /home/naoqi/nameoffile.py

DAY 4: Today I investigated how to gain audio information from the NAO, since the processSound examples are in C++, i had to figure out how to make the examples which wasn't hard at all because I used the GUI interface. Here is a nice excerpt from the users forum at the Aldebaran site that explains using the ALAudioDevice module: Audio Transmitt/Receive: Hi, No, your are not "un peu faux". To retrieve the buffers coming out of ALAudioDevice, you need to make a C++ module that will subscribe to ALAudioDevice and get called regularly by ALAudioDevice (every 170ms by default) on its callback function (the callback of your module) called "processSound" (if your module is on the robot) and "processSoundRemote" if your module runs on your PC. Your c++ module needs moreover for the moment to inherits from ALSoundExtractor. This inheritage "hides" the subscription process of your module to ALAudioDevice. So once you have created a c++ Naoqi module that inherits from ALSoundExtractor you just need to implement the two callbacks "processSound/processSoundRemote". Then to "launch" your module you will have to "subscribe" to your newly created module. (this can be done in python script / Choregraphe). Code: yourproxy = ALProxy("YourModule") yourproxy.subscribe("thenameyouwant") You can find an example of such a module in AldebaranSDK/examples/src/soundbasedreaction

This explains How To Compile C++ Examples in NAO SDK: 1. run cmake-gui 2. go into build/ type make 3. go into bin/ type ./DAY 5: Spent the day getting more familiar with the modules provided by the Aldebaran SDK, mainly going through the audio and visual modules, ALAudioDevice and ALVisionRecognition. I wrote a python script that tries to combine voice recognition and facial recognition. Basically, when the robot detects a face he asks the user if he/she would like to play a game. If the user answers yes it begins a while loop that asks several questions about geometry. Unfortunately I am having problems when the program enters the while loop, it gets trapped, repeating the word previously recognized.

WEEK 7: It has been determined that we are foregoing using ROS for Gini's Honors class and instead, using the Aldebaran NAOqi API directly. DAY 1: BROKER/ MODULE/ PROXY: FAQ Understanding NaoQi and the remote procedure call (RPC) based architecture You definitely need a basic understanding of the Nao software architecture. It allows you to execute code on the Nao, after a request from any other machine on the network (which may be another Nao itself!). If you've done any client-server programming, you just need to get used to the slightly different terminology, namely: A Broker is nothing more than a process. Each broker has an IP address and a port associated with it, where you can call some exposed methods. Brokers can be nested (f.e. you create a module Foo that runs on your machine and has a parent Bar that runs on the Nao. Your Foo can call Bar's methods very easily.). The NaoQi broker runs on the Nao by default and exposes a whole lot of different methods for accessing the hardware. A Module is a class that has something to do with the Nao. Modules live in brokers and implement whatever is exposed. A Proxy exposes some module methods to the rest of the world through a broker. The equivalent of an interface in any old OO language. TODO: + Check web interface of modules and brokers! Which port was used - broker or 80? + Read the Nao documentation. The description of the architecture is mainly in the Red Documentation, section Robot Software.

DAY 2: This is a paper I had particular interest in: MultiModal Robots: http://aiweb.techfak.uni-bielefeld.de/files/lrec2012mmc.pdf In light of this paper, I started thinking about way I could further develop my interactive game to enable the robot to interact in a meaningful and intuitive way for the child user. I am beginning to incorporate a way for the robot to detect certain phrases from the user and react appropriately, For instance the child may say: I dont understand that object and the robot acting as teacher can give more information about the object. Another (extra) modality would be to incorporate emotion gestures during game play, For instance if a child gets a question correct the robot will display a happy gesture, such as a pump of the fist. I am also working on incorporating sound, which is extremely easy with the Choregraphe interface.

DAY 3: Info about NAOqi from the users forum: Actually, naoqi is the program who run on the robot, to control almost everything . If you run it on your computer, it is like simulating a robot. Naoqi is composed of a lot different modules (for controlling motion, video input, audio devices, etc ...) For example if you go to the blue doc, you will see the list of modules installed on naoqi. And what you are trying to do (and succeeded) is to create a new module. This module will be remote, because it is not running on the robot. And the "--pip 127.0.0.1" means that the module must connect to a naoqi at the address 127.0.0.1, which is you computer. If you want to use a real robot, you will have to replace 127.0.0.1 with your robot IP. The error you pointed out occurs when naoqi is not running. In this case you module can't connect to it, and say Details : connect failed ... So now you have your naoqi running, and your new module connected. But you still have to call some function of this module (I forgot this step in my previous posts, I'm sorry ). The easy way is to run the "test_helloworld.py". Make sure that the IP is 127.0.0.1 in the file, but I think it's the default. To run this python script, you can for example run the command "python path-to-sdk/modules/src/examples/helloworld/test_helloworld.py". If you have problems for running this script, you will find mandatory steps in red doc/SDK/writing in python. qiBuild documentation: http://www.aldebaran-robotics.com/documentation/qibuild/

DAY 4: Test module on NAO Test Ros on NAO ** look at Sound Module ** look at Vision Module

DAY 5: AL Speech Recognition: This notes are taken from the Developer Aldebaran site: http://developer.aldebaran-robotics.com/doc/1-10/reddoc/audio_system/tuto_speech_reco.html Excerpt from the users forum at the Aldebaran sit: When speech recognition is launched, every time you pronounce a word the recognition engine try to find the word in the list which best matches with it. So if you pronounce a word which is not in the list you could have a recognized word even if it is not the word you pronounced. To know if the recognized word is the 'good' word, you can check the confidence level. If it is low, there is few chance that the recognized word is good (that is the case in your example where the confidence is 0.003) As it is explain in the red documentation, 'recognized words are sent to the NAOqi shared memory (at the 'WordRecognized' key) [...] The structure of the result placed in the shared memory is an array of this form: [(string) word_1, (float) confidence_1, ..., (string) word_n, (float) confidence_n] where word_i is the ith candidate for a recognized word and confidence_i the associated confidence. The size of the array varies over time. How to run a python script using ALSpeechRecognition: 1. Create a Proxy on the Module from naoqi import ALProxy asr = ALProxy("ALSpeechRecognition", 0.0.0.0, 9559) #asr = al speech recognition 2. Set the Language asr.setLanguage("Dothraki") 3. Set the list of words asr.loadVocabulary(/path/to/.lxd file) neat ways to make vocabulary more robust: http://www.phon.ucl.ac.uk/home/sampa/ #!Language=English #!FSG

=alt("yes" "no" "hello" "goodbye" )alt; =alt( )alt; #!Transcriptions hello h@l@U hello h@ll@U phonetic examples: http://www.phon.ucl.ac.uk/home/sampa/english.htm 4. Fire it up asr.subscribe("SpeechModule") 5. Preempt asr.unsubscribe("SpeechModule") PERSONAL: + Swam in Lake Nokomis + Ate A LOT of junk food, particularly french fries. Happy hours in Mpls are ridiculous! + Watched To Rome With Love - which I enjoyed because of its ability to make Rome feel nostalgic despite never having visited + Took epic bike rides + Went thift store shopping

WEEK 8 DAY 1: So for the past two days i've been running python scripts from the interpreter but always came across this error: Traceback (most recent call last): File "sayHello.py", line 2, in

from naoqi import ALProxy File "/home/karen/nao-1.12.3/naoqi-sdk-1.12.3-linux32/lib/naoqi.py", line 9, in import _inaoqi ImportError: libalcommon.so: cannot open shared object file: No such file or directory As a hack i would just chmod +x the .so file and that always worked However, when i tried to execute the python script and then chmod the .so file i would still get the error. After much research I realized that I needed to set my LD_LIBRARY_PATH in my bash file. export LD_LIBRARY_PATH=/path/to/lib:$LD_LIBRARY_PATH Speech Recognition: http://users.aldebaran-robotics.com/index.php?option=com_kunena&func=view&catid=68&id=3711&Itemid=29 Robot demo for middle school kids DAY 2: Robot Demo for middle school kids. I prepared a simple demo/questionairre that involved the emotions I developed in Choregraphe. The robot displayed a series of 8 different emotional behaviors and asked the children to identify them by circling the correct emotion on the questionairre.

DAY 3: Worked on robotics lab tutorial for Gini's class that explains how to run python scripts directly from the terminal onto the robot itself.

DAY 4: Did another Robot demo for the cute and inquisitive robot summer camp kids.

DAY 5: Kept working on finalizing the Python/NAOqi lab tutorial. Its surprisingly difficult mainly because you have to note every single step. After re-reading kept realizing I was missing vital steps.

PERSONAL: + My bofriend is in town, yay!, so much of the weekend was spent exploring more parts of Minneapolis. + Went bowling, ate at fancy/divey restaurants, ate at a country club thanks to his parents' friends (not something I would do on my own) + Ate another amazing Vietnamese meal + Watched Chinese Take-Away + Walker Art Museum, Weissman Art Museum, Eat Street + Lake street + This is a sculpture garden up in Franconia

Compiling Experiment Results and Final Report

WEEK 9: DAY 1: Had to miss out on lunch with Maria et. al because of sickness. But this gave me time to read up on some papers while I had the energy, think about my final Research Project for school (either geocoding or NLP), and catch up on much needed sleep.

DAY 2: Started compiling a list of references for my final paper. I'm attempting to get it done this week (or atleast a draft) so that Maria can look it over because she is leaving for Italy this Saturday.

DAY 3: Completed a very rough draft of my paper, working title is: "Seeing Signs: Pre-Adolescent Recognition of Social Body Language in Humanoid Robots" Here is the Abstract: "The utilization of humanoid robots, such as Aldebaran's NAO, is expanding in scope most prominently as companions in both therapeutic and educational environments in order to improve the quality of human life. This paper focuses on emulating emotion and expressive behaviors in an anthropomorphic robot in order to foster meaningful and intuitive humanoid-robot interaction, specifically with pre-adolescent children. We utilize non-verbal, full body movements to model the six basic emotions and incorporate these emotions into an interactive and robust educational game. We present the design and experimental evaluation of the emotionally expressive humanoid robot during a socially interactive game of 'Charades'. We also lay the groundwork for an educational game that enables a child user to interact one on one with the robot that incorporates various modalities including the emotions previously mentioned. We then propose future methods and ideas to improve the human-robot interaction in the proposed educational game." I also found out that I will be presenting a poster at the Grace Hopper Conference, the first week of October, in Baltimore.

DAY 4: Fleshed out the rest of my paper including writing about the 'Charades' game that measures the ability for pre-adolescents to identify emotions through physical body language, and writing the technical details of my 'Interactive Educational Game' that is still without a name.

DAY 5: Had a final lunch with Maria and Elizabeth, we dined on chocolate milkshakes, fries, and (veggie)burgers at Annie's Parlour. Maria gave me awesome feedback on my paper. I put the final touches - i.e. graphs, images, results. Just have to add the references and proof read again and again.

PERSONAL: + Rented a car and drove up to the North Shore + Had the BEST food in St. Paul, which is a lot more charming than Minneapolis + Walked around Fort Snelling, which was like a dream + Had dinner and watch Radio Days (which has the best soundtrack) before I said goodbye to boyfriend.

WEEK 10: Final Week! This week was pretty much spent finalizing the last touches to my paper, proof reading the robot lab tutorial for Maria's fall honors class, selling my bike, trying to sell my air conditioner, and getting ready to head back to school. I didn't get everything I set out to do at the beginning of the summer, but I believe these 10 weeks were time well spent. I leave knowing how to write scripts in python, a language I really wanted to learn. I survived being in a city that I actually never gave much though considering NY is the world to me. I swam underneath a water fall, biked more than I ever have in my life, had wonderful food, learned that research takes time to formulate and implement, got to go to Toronto for AAAI. I'm still not 100% convinced research is for me, past friday I did a skype interview for a developer position at a startup company in NY. Though I still am planning on atleast applying so that the option is there when I do decide that there's an interesting enough problem I want to investigate for another 4 years. All in all, it was a positive experience, that I would do again only if it was in NY, or where the people I love and love to hang out with were close.