Weekly Journal

Weekly reports from the front.

Welcome to my weekly DREU journal. My journal entries contain a mix of my lab-work and my experiences hanging out in Minneapolis.

Week 1: June 7-14

I arrived in Minneapolis on June 7th after a 20+ hour, two day drive from Connecticut. I took Sunday to get settled in to my new surroundings, which were very different from those I was used to. The city is many times larger and busier than my rural-suburban hometown and the university huge compared to my tiny school.

Some frustrations presented themselves early: after driving over 1200 miles, my car was now out of commission with a nasty engine oil leak. On Sunday I went on my first errand to buy some kitty litter to try and clean up the spill stains. However, I was also anxious to explore the area so I took my new cat litter for a walk in a nearby park. It turns out that kitty litter is heavy and summer weather in Minnesota hot, so I ended up getting lost and exhausted in a park on the Mississippi river wandering around with a bag of clay which I was planning to use to clean up not after a leaky cat but a leaky car. As far as first days in a new city go, I'd say it was pretty fun.

On Monday I started at the lab. There I met some of the other PhD students working with Prof. Gini as well as Alice, the other DREU student working on this project. I was given a few papers to read by the graduate student (Elizabeth Jensen) Alice and I would be working with. The project pertains to robot dispersion. You can read more about it in the Project section.

The rest of the week was spent installing necessary software and learning about the project in preparation for our future work. Installing new software is invariably frustrating but ultimately gratifying.

A huge difference I have noticed between this HUGE university and my school is the amount of beaucracy one must pass through in order to have access to technological services of any and every kind. I understand why this is necessary, but waiting for authorizations from an unseen office isn't something I'm used to.

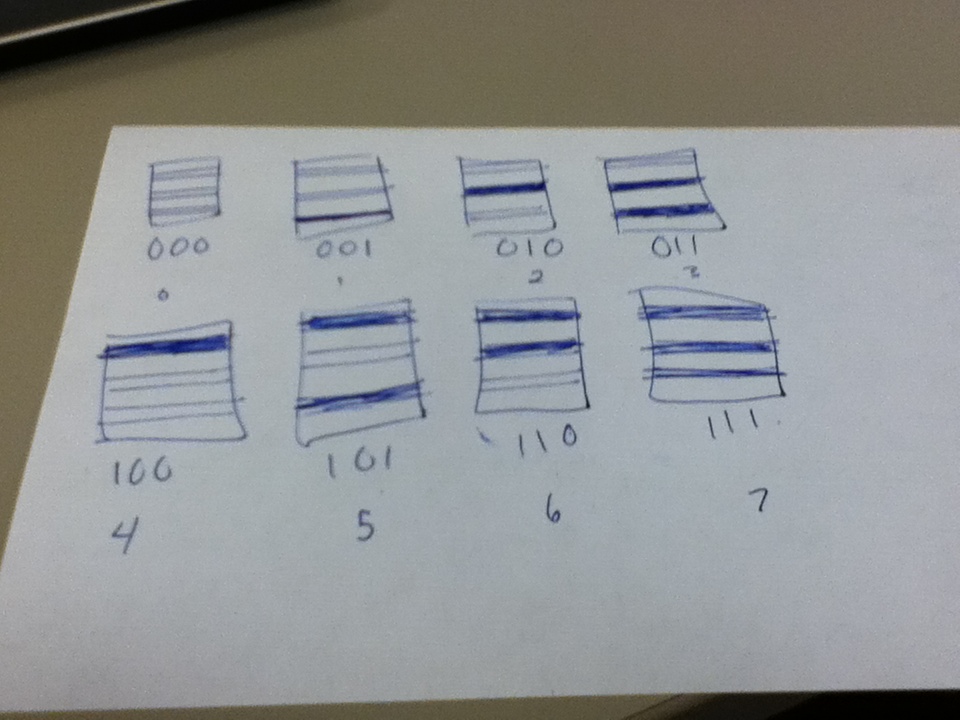

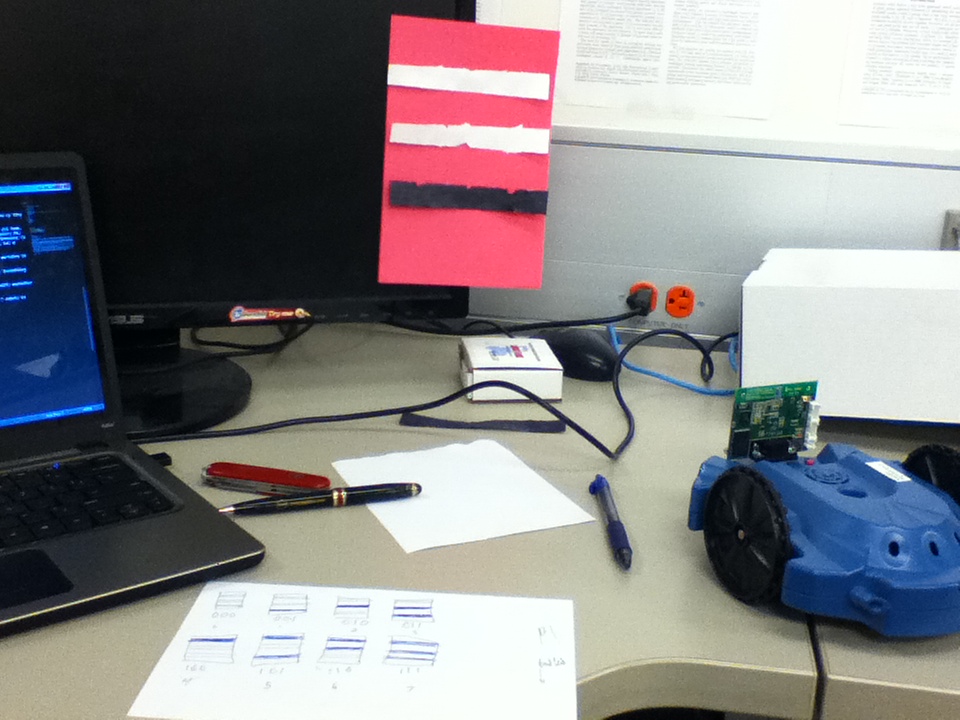

Friday we made our first concrete plan for the next direction of our project. In the simplified version of the algorithm, the robots will be following black lines instead of exploring an entire space, which will allow the simple Scribblers to navigate an area. We designed a scheme to allow the robots to identify one another by looking at the positions of black and white stripes on a colored background. The encoding looks like this:

This 3-bit encoding design will allow 8 different values, as our plan is to start implementing the algorithm with only about 7 robots to keep things simple. Week 2 we will begin to work on getting the Scribblers to read these signs with their cameras.

Saturday all four of the DREU students at UMN took the lightrail to the Mall of America, which is an insane cathedral in which we are free to worship our country's one true god of shameless capitalistic consumption. It was fun and I bought some sweet new socks! (Late August Addendum: One pair of these socks got a hole in them, boo hiss worst mall ever)

I ended my first week by finally checking out the used bookstore a block down from my house. I've sort of been avoiding it because of a terrible addiction to buying cheap used books, but I finally gave in so I would stop salivating every time I walked past the darn thing.

Week 2: June 15-21

On Sunday I meant to go to the festival at the Stone Arch Bridge, but my eternal inability to get out of bed in a timely manner meant I didn't leave the house until 6:30pm. I decided to learn how to ride the bus and took myself out to the movies at Uptown Theater. Finally getting out of Dinkytown made me realize that the entirety of Minneapolis is not, in fact, Dinkytown. I am looking forward to seeing what else there is to do these coming weeks.

This week in the lab I am working with getting the Scribblers to count black and white stripes on colored pieces of paper for our encoding scheme. The stripe identification was easier than I thought. The hard part came when trying to get the robots to search for three different colors. The robot's default color blobbing function works pretty well and searches for pink by default. There is another function that allows you to change what color the robot is looking for, but after a day of trying to get this function to look for yellow or green I gave up with little success. It didn't seem to matter what YUV arguments I was giving it as it misbehaved every time. Alice wrote her own custom blobbing function that will look for whatever RGB range you want it to, so I think that we will be using this as we move ahead.

I was excited to discover that one of the coolest things about living in a city is that some incredible musicians are playing pretty much every day of the week and the venues are just a bus ride away. On Monday I managed to catch a concert at First Avenue, despite the fact that I showed up 45 minutes after doors opened and without a ticket to a sold-out show (thanks, dude selling *slightly* marked-up tickets outside the venue!). Lucky for me the first opener hadn't even started. It was a fun concert and I am looking forward to attending more in the area.

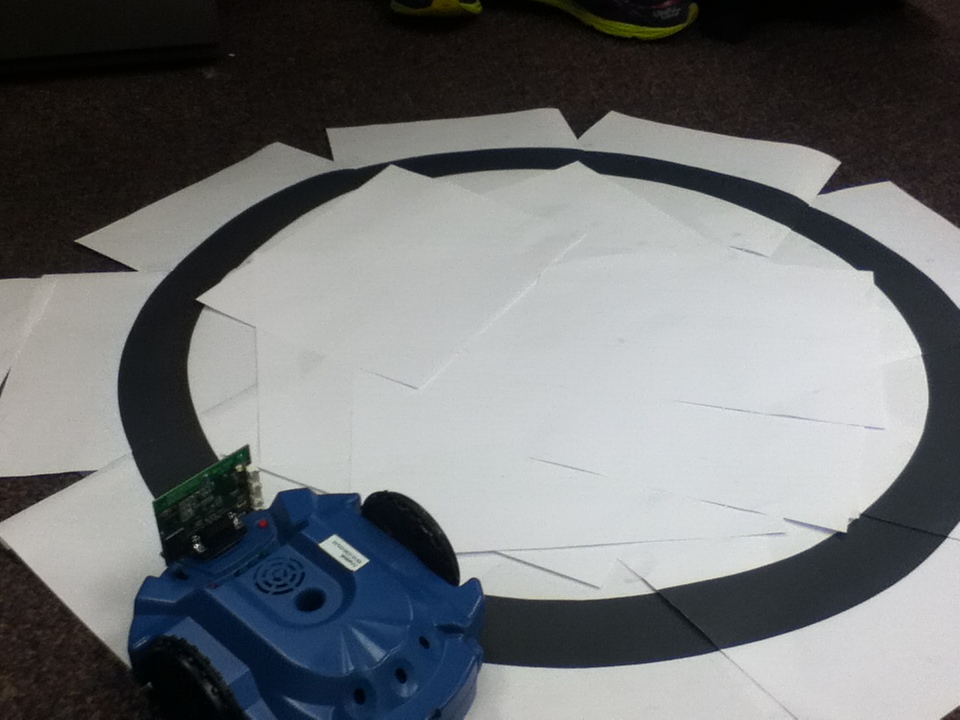

After we got the stripe counting and color blobbing working pretty decently, Alice and I worked to get the Scribblers to follow a black line with their underbelly sensors. This was very, very difficult. The sensors are fickle and the robot moves too fast to get accurate readings. As of now the program just has the robot inching its way across the line and stopping very frequently. I consulted some old code from a previous project that Maria and Elizabeth used the Scribbler's line following function for a few years ago. The code indicated that they had the Scibblers' sensors straddling the edge of the line, which was ultimately what Alice had been testing out and what seemed to be our best bet.

Over the weekend Alice and I went to Nicollet Mall, which is basically a long street downtown with lots of retail stores. There's a famous statue of a lady from an old T.V. show that I've never seen (I'm sure The Mary Tyler Moore Show is very entertaining but it is also what you might call "before my time").

At some point during the week I went The St. Anthony's Main movie theater and saw "Finding Vivian Maier," which was super excellent. Nearby were the St. Anthony's Falls:

Minneapolis has some interesting landmarks!

Week 3: June 22-29

On Monday a few of the lab members participated in a summer camp activity with high school aged girls to teach them about using the Scribbler robots. It was fun to explain how to get the robots to do silly things with a group of people who hadn't used them before (and thus still had within them an idealism uncrushed by the Scribbler's horrendous line following faculties).

I also began looking into the ROS (Robotic Operating System) simulation code for the algorithm. I spent some time getting familiar with ROS itself through online tutorials and then retrieved the project code using a version control system called CVS (all new things for me!). I began to examine the existing ROS code in preparation for porting it over to Calico so our little Scribblers can use it.

HOWEVER:

Towards the end of the week Elizabeth realized the reason the line following wasn't working was due to the lag in the bluetooth communication between the robot and and computer. The robot would get a sensor reading of the line beneath it and send back this information to the computer. The computer would then decide what command to give the robot based on the sensor data and then send the motor command to the robot. This meant that the robot wasn't reacting to accurate, up to date sensor imformation.

Elizabeth has suggested that we stop using Calico and Myro and find a way to install a line following procedure into the scribbler firmware as they did a few years ago for another project. We are trying to connect to and control the robots without Calico and it is incredibly frustrating and not working at all--every attempt to import the bot library is met with missing libraries. I imagine this would work better on Ubuntu but I could never get the Scribbler bluetooth connection to work with it. The eventuality of having to port all of our existing Myro image processing code to a new, undocumented library is something I am not looking forward to. Things are looking grim. At least the weather is nice today.

Over the weekend I went to two museums: The Bell Museum of Natural History (taxidermied animals!) and the Minneapolis Institute of Arts. The 19th century American landscape paintings was my favorite wing.

Week 4: June 30-July 6

Our tentative solution to the problem of firmware is this: Try to change the robot firmware to how it was during the project a few years ago (with the custom line-follow protocol) and try to run the old code. If it works, re-use pieces of the old code and strip away unneeded bits. If it doesn't, find out if the problem is with the custom Blue-tooth code. If we cannot fix the old code at this point, we might try to write our own protocol on top of the firmware byte-code. All is to be determined!

The rest of week 4 was spent becoming familiar with the old patrol code and installing dependencies we will be needing later once the firmware is set up. As we wait for the firmware I spent some time fooling around in C, since I've never tried C before.

Outside of work, I went to go see The Antlers play at Triple Rock Social Club on Tuesday. They were very good live and played a bunch of their new stuff off of the album that came out recently.

Friday was July 4th, and so Alice and I got burgers at Annie's Parlour to celebrate AMERICA. In the evening we met up with the other DREU students to watch some sweet fireworks being shot over the Mississippi river. We watched them from the historic Stone Arch Bridge, which was absolutely bursting with people. I guess cities necessarily mean huge crowds--which is a lot of fun for someone who enjoys people-watching.

Over the weekend I went to The Museum of Russian Art and saw some incredible paintings from the middle of the 20th century and learned all about "socialist realism". Furthermore, I ducked into a nearby Goodwill and found not one but TWO very cheap programming books (C++ and Scheme) and some other fun junk (like a vase made in Japan with a yellow bird on it). Thrift stores are one of my favorite things, and the Goodwill near the Russian art museum is probably the nicest Goodwill I have ever been in.

Aleksei P. Belykh: Young Woodcutters (1961-68)

Week 5: July 7-July 13

This week we helped with another summer camp day where we taught more high-school aged girls how to program the Scribblers.

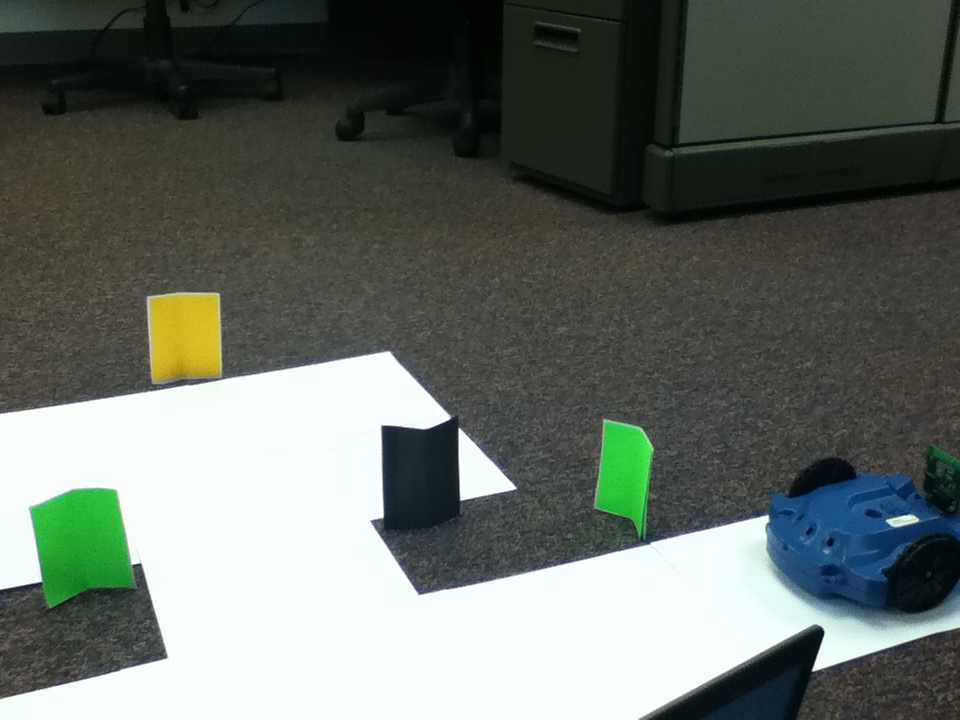

I spent some of week 5 trying out an alternate strategy for our robot navigation that would not require line following at all, just in case we can't get it to work once the firmware is ready. My idea was to have the robot travel down a "corridor" of white paper, so if the robot got off of the paper it would detect everything outside as being black. Thus the underbelly line sensor could be used to detect the "wall" of the corridor. The navigation actually worked pretty well, but getting it to work while also taking pictures was more difficult. I fooled around with this just to see if it perhaps worked better and will keep it in mind in case the line following doesn't work.

Saturday Alice and I went to see "Snowpiercer" at the St. Anthony's Main Theater. It was very violent and strange, but totally rad (by which I mean the movie was radical in every sense of the word, gosh talk about a film with an agenda). Sunday we took the lightrail to Minnehaha Park and saw the falls and some awesome herons.