Week 1

May 28, 2013 to June 2, 2013

I arrived in Pennsylvania just before Memorial Day, and settled into housing in Monroeville a couple of days before the internship began. I spent the first week of my internship getting acquainted with the campus, completing administrative tasks for in-processing, and getting settled into my office space at Smith Hall which can be seen in the picture to the left.

I also spent time in the first week learning about the work that I would be doing and trying to set some goals for the coming weeks. During meetings with Prof. Hodgins, we discussed the extent of the project and how we can make completion within ten weeks feasible. Being an aspiring game developer and having some experience with the Microsoft Kinect, I was very excited about starting work on a gestural interface and learning more about the Kinect and computer graphics in the process. I have very little animation experience, so I definitely look forward to gaining experience in that area as well. I am very enthusiastic about the idea of making a game of this interface that other people can play and create gestures for a humanoid robot that an individual or small team may not come up with.

To begin the research portion, I got my hands on a few papers that described different types of puppeteering implementations for computer animation. I was able to see different ways in which such projects were approached and was able to gather some key insights to keep in mind for my implementation. I also began looking at how different ways in which crowd sourcing has been approached, from free online games, to Amazon's Mechanical Turk. This should help give me an idea of what type of approaches would be best for our system.

Week 2

June 3, 2013 to June 9, 2013

For the first few mornings of the second week, I spent some time in the motion capture (mocap) lab. I first learned about the hardware and software that is used in the lab and how to set some of it up. I also had the chance to work as a subject for a CMU graduate student's research and was able to see how motion capture works from that point of view. When I participated in this mocap session, I also had the opportunity to help with the calibration using a mocap wand. This helps the system get an idea of the volume of the space that it is capturing, as it is very important for all cameras to see the same coordinate system from their different vantage points. To the right, you can see a picture of me suited up for one of the mocap sessions that I participated in. After these mocap sessions, I had the opportunity to sit down and learn about cleaning the data after a capture. This included labeling the points where the markers are on the suit to link them to predefined locations and how to fill gaps in the data. After a tutorial, I was able to clean up a couple of takes from my first mocap session and get a feel for how the software works. This should certainly help me in moving forward to searching these types of files later in the implementation.

During my time away from the mocap lab, I spent time learning about the database and read some papers which implemented efficient ways of searching a mocap database for animation purposes. I also took the opportunity to start getting the Kinect set up and considering which library would be best to use for Kinect data capture. I hope to complete some experiments in capturing different types of motion with the Kinect very soon, to find a sufficient approach for the interface that I am working to create.

Week 3

June 10, 2013 to June 16,2013

This week marked the beginning of the implementation phase of this project. After wrapping up the reading that I set out to do last week, I had a fairly general idea of what successful approaches are to searching a mocap database. Now, it was time to find a good way to approach the tracking portion with the Kinect. I looked into methods that were currently being used by other developers to implement finger tracking with a Kinect, in addition to auditioning different libraries to determine which would be best to move forward with. Because this application is intended for crowdsourcing, I was determined to find the most user-friendly solution that I could. A huge decision that needed to be made was figuring out whether it would be better to distribute the code as a downloadable application or if something browser-based would be better. I looked up many different browser-based options for Kinect and found that there was really no way around the user having to at least download the drivers for the Kinect. It is now time to decide if that means I should just make the entire application downloadable, or if it would still be a good idea to have a browser-based app with the requirement that the user download the drivers. I then looked at some good ways to create applications that a user could simply download and narrowed those options down as well. At the end, I am now left with two good options, one browser-based and one downloadable.

I dug through many different libraries that people have created to allow the Kinect to be used through the internet. Most of them were options that may have caused an end user to have to go through a complicated download process. I was prepared to try to bundle some of these options into something easier for a non-programmer to navigate. I was trying to avoid requiring users to download anything in fears that it would turn them off to using the app. Though, at this point, I started to accept that there would be no way around it. I did, however, find a reasonable in-browser option called zigfu. It is a browser plugin that allows users to play browser-based Kinect games, it offers an easy way to download the plugin and drivers, if needed. Zigfu is only available to Windows and Mac users but since many computer users do own Windows or Mac, I see this as still being a sizable audience. The only major drawback that I see at this point to a browser-based game is that the mocap viewer may be more difficult for me to implement. I was able to find a few readily available mocap viewers written in Java that I could likely use whether or not creating a browser-based app. However, the implementation will likely be more straightforward in a standalone Java app.

For the downloadable applications, I was very attracted to using the Processing IDE because it offers a developer the opportunity to run in Java mode and export pre-built applications that a user can download, double-click, and run. I tried a variety of sample programs with increasing levels of dependencies to see when I ran into problems. Using the SimpleOpenNI library for Processing, I had great success getting the apps to work on two different computers running different versions of Mac OS X (Snow Leopard and Mountain Lion). However, when I tried the program on Ubuntu, and a different machine running Snow Leopard, the app did nothing. My assumptions were confirmed that with this method, the end user would still have to download some form of Kinect drivers for their machine. I then found what I thought was an awesome alternative, in which someone created a library for OpenKinect (aka libfreenect) and I seemed to be able to run apps without having to install additional dependencies. However, reading further into the comments on the page and doing some testing of my own, I found that this wrapper would only work for Mac OS X on 64 bit platforms. One other developer made a version that worked for Linux. Unfortunately, that still only makes up a very small portion of potential users that I would hope to reach with this app. As a result, in the arena of standalone apps, the SimpleOpenNI version was easily my favored method. What made its SimpleOpenNI have even more appeal, was an already implemented FingerTracker add-on that could potentially make it much easier for me to be able to spend more time making the app into a game and working on the core functionality, like getting the videos from the mocab database and displaying those in a mocap viewer.

I look forward to having a decision on Monday, and moving forward with the implementation that Prof. Hodgins and I find to be the best bet.

Week 4

June 17, 2013 to June 23, 2013

The early part of this week was spent finalizing the downloaded app vs. browser-based app decision. For several reasons, I decided to go with implementing the downloadable application. While Zigfu offered a great deal of convenience in an already packaged downloadable browser plug-in with Kinect drivers included, I felt that I had much more control over the implementation with the SimpleOpenNI library. Zigfu's library seemed to be more high-level and it was not as apparent how I would implement the functionality that I am attempting to include. This especially included retrieving, modifying, and doing calculations with the depth data and using the JMocap viewer which I plan to use for plaback of the mocap data from the database.

I spent the majority of the week experimenting with different finger and blob tracking approaches. I found several libraries that had implementations and a few papers that detailed the process. In the end, I found myself wanting more control of the data when I used external libraries. As a result, I began experimenting with my own approaches, building on some of the literature that I found online and using a blob detection library simply named BlobDetection, by V3GA. This library gave me the flexibility that I was looking for and it works well with Processing. In addition, it was very easy to download and did not have any external dependencies. My goal is to see if I can implement the option of allowing a user to perform a motion with their hands or even using a doll for a puppet, to move an on-screen character around, and then use those motions to find similar motions in CMU's mocap database. I began with implementing a simple depth filter that found the minimum non-zero depth value and only captured objects within a certain threshold from that value. To account for users with different hand sizes or preferences, I made the threshold adjustable. The picture above shows an example of the BlobDetection library being used with the depth segmentation implementation on the left-hand side and the real-world view from the Kinect on the right-hand side. The depth segmentation makes it much easier to find the blobs because it isolates the fingertips which is what I am aiming to keep track of. I can then track the blobs between two frames based on the blob's previous position and interpolating to the new position. I can then store the positions for each frame to keep track of a blob's trajectory and have easy access to the data when searching the mocap database to find and show motions like those tracked from the user's input.

Week 5

June 24, 2013 to June 30, 2013

This week, I put a great deal of time into starting to iteratively get work done on each component of the project. First, to make more progress with the tracking, I conducted a small user study in which I had a few family members act out gestures for motions that can be found in the CMU mocap database. I had them approach the gestures in two different ways. The first being using only fingers on one hand to perform a motion. The other approach was to use two hands to act as either hands or feet (or both) depending on the motion type.

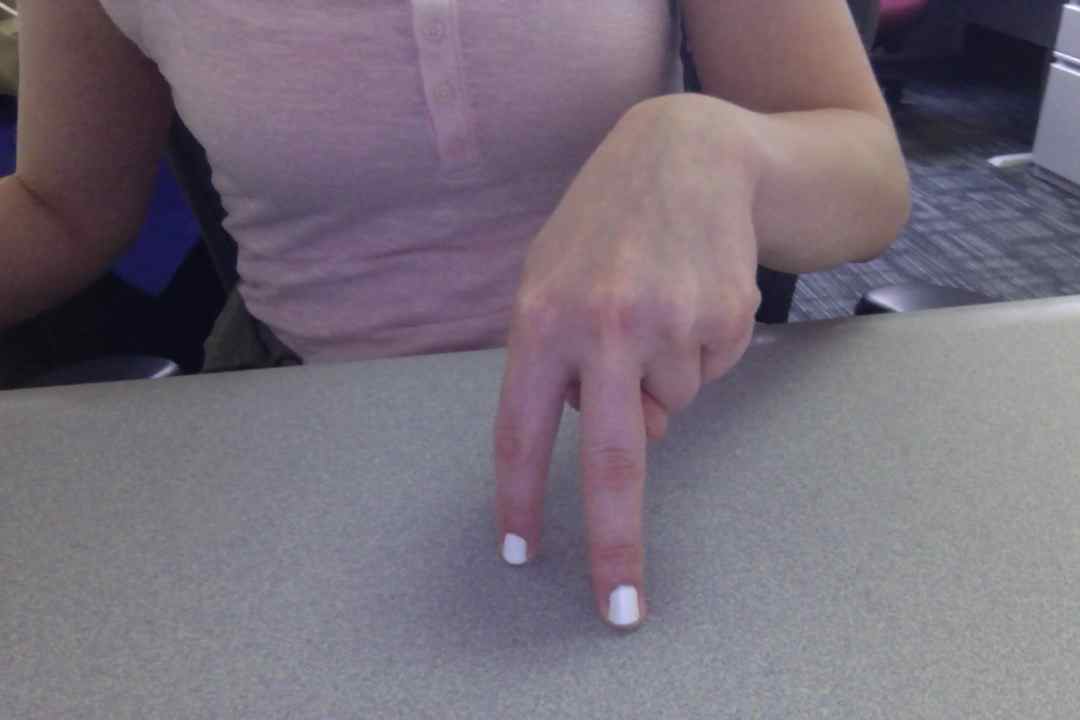

The picture to the left shows an example of acting out a walking motion using two fingers as feet. On the right, an example can be seen of what it may look like when acting out a walking motion with both hands acting as feet.

I obtained some excellent data from the user studies, though I do hope to survey a few more people from different age groups. Among the people that I have surveyed so far, there seemed to be a unanimous preference toward using both hands over using the fingers on one hand. Another thing that I did not expect, was that the subjects did appear to prefer an anchor of some sort for certain actions. They each seemed most comfortable using the surface in front of them when performing walking, running, and other types of foot motions. Having this as a constraint will likely be helpful for calibration techniques. I am hoping that this will assist the system in determining if a user is performing a foot motion versus hand motion. I am now ready to make more progress with the tracking portion of the project and will likely be able to start implementing it in a way that the system will track hands rather than fingertips.

For the other components, I have started designing the graphical user interface (GUI) for the game and began thinking of how the game will flow and how the user will gain points and levels. I have also started brainstorming what modifications I can make to the JMocap viewer to allow it to fit into the GUI and make it more game-like. During one of my meetings with Prof. Hodgins this week, we both agreed that there are changes that can make the display more friendly. We also discussed using motions in the database to have the character performing different motions on the screen until the user gives a gesture. This would keep the game moving and potentially even give the user ideas of things to try. I plan to have a prototype GUI put together by the end of next week so that I can start adding components into the full implementation as they are completed. I will also start tackling the part in which I use the captured data from the user to find the similar motions in the mocap database. For getting started on this portion, I plan to get much more familiar with the .bvh file format and reviewing a couple of the papers that I have read detailing the implementation of motion graphs. Getting the motion graphs started will be good progress toward beginning this part of the project.

Week 6

July 1, 2013 to July 7, 2013

This week was a little short but I still managed to get quite a bit done. I started the week really digging into the JMocap code. My goal was to modify it in a way that I could interface with it like a library rather than the standalone program that it was released as. It took a bit longer than I had hoped, mostly because I was testing after each change. That way, if I broke the program, I would know right away what I did and could remedy it. Eventually, I was able to get the code stripped down and structured in a way that I can integrate it into the GUI that I will be using for the game. Going through the BVH Reader in the JMocap program also gave me some understanding of the architecture of the BVH file format which I will need to be very familiar with as I am now beginning to start the motion graph implementation.

With the Fourth of July holiday, I got a chance to go home to Albuquerque for a few days to celebrate the holiday and a few summer birthdays, including my own. It was a nice chance to recharge but it also gave me the opportunity to get a few more user studies done for the gestural interface. This gave me a wide age range to observe and a really good idea of the types of motions that I should be aiming to look for. For many of the movements, I noticed a pattern. For motions like running, walking, forward jumping, and vertical jumping, all of the subjects did the same basic gesture whether it was with fingers only or two hands. However, when it came to doing punching and forward dribbling, the movements were fairly similar but there was more creativity with some of the subjects, especially when using fingers. The one motion that I asked people to do that seemed to really stump them was a cartwheel. The youngest subject, a seven year-old did a neat wrist turning movement. However, many of the adults seemed to almost get tangled in their own hands. This showed me that I will have to be careful in thinking about these physical limitations and model the gestures in a way that is intuitive but is physically easy for all people to act out as well. Another thing that I noticed was how people positioned their hands during the two handed movement. Everyone preferred to have their hands touching the table when acting out foot motions (walking, running, etc). Some people preferred to keep their hands in fists when acting out motions like walking or running, while others had their hands flat on the table. The subjects did seem to prefer using two hands over just using fingers, especially for motions like dribbling and punching as they felt that they had more flexibility that way. I did this iteration of user studies recording the gestures with the Kinect as the camera so I could see the depth data at the same time. One thing that really stuck out was how much easier it was to see the hand when it was kept in a fist or angled to some extent, rather than flat on the table. This is a data point I will be certain to keep in mind as I finalize the hand tracking implementation and determining what types of gestures the end user will be encouraged to use.

Week 7

July 8, 2013 to July 15, 2013

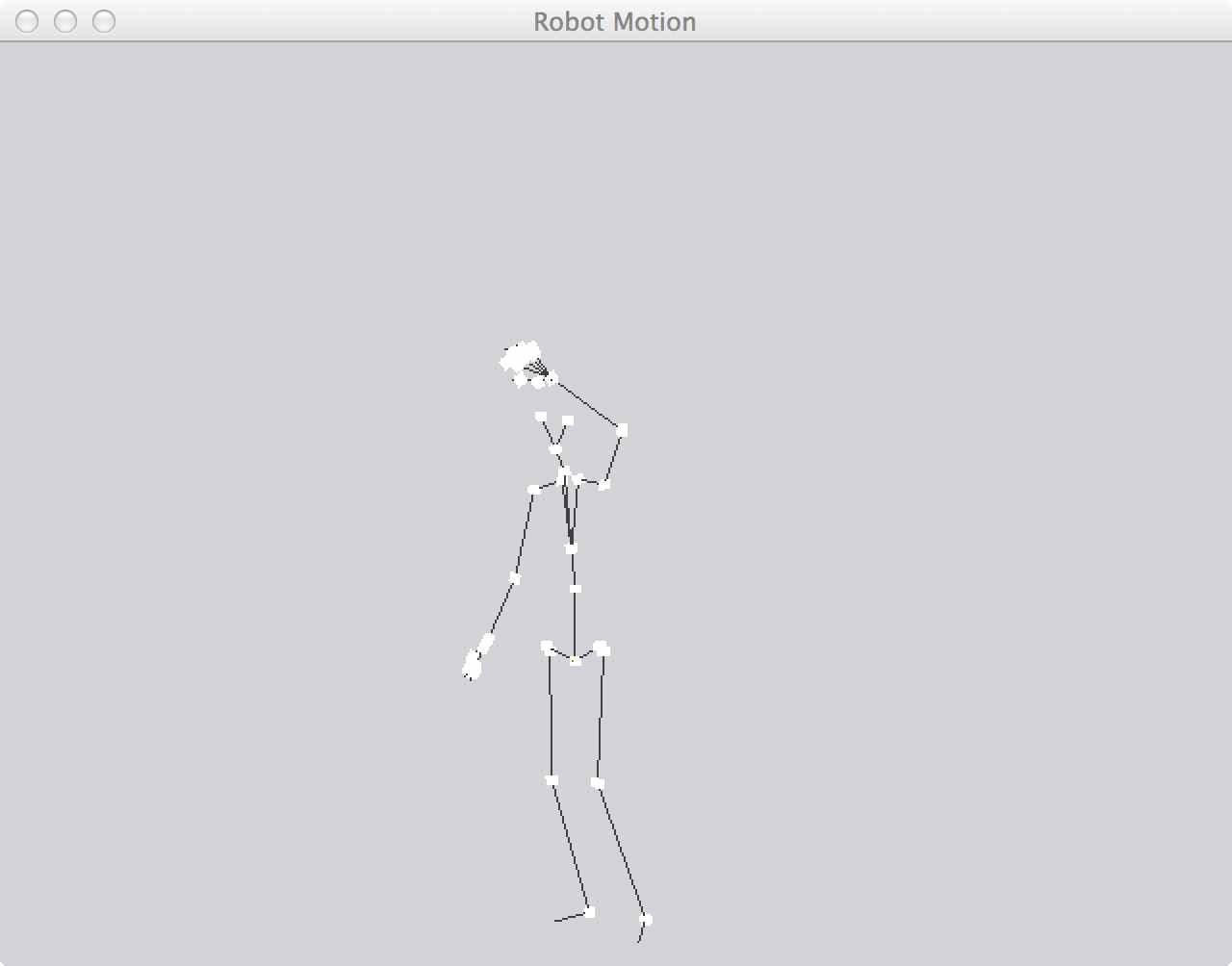

Throughout this week, I have been able to make some progress on all components of the project. I spent some time wrapping up with the JMocap library and getting the GUI set up so that the motion rendered in the GUI would be the main focal point on the screen. The picture to the right shows the current version of the mocap viewer ready to have the remainder of the game GUI built around it. I also made some progress with the hand tracking, though I have been going back and forth a lot with deciding how to implement this. While using the BlobDetection library, I am able to to track short movements but movements that are quick or that leave the field of view can cause the registration of the blob location to get off track. In addition, some small movements cause the detected center of the blob to not move at all which also causes problems in tracking the trajectory of such a point. My main focus now is working on making the tracking implementation more robust by considering the center and edges of the blob. Also, I may try other methods of blob tracking in addition to this one to maybe strengthen the results.

Toward the end of the week, I spent most of my time getting further acquainted with the BVH file format and how to work with it. The modifications that I made to the JMocap library helped with this, but I also looked at documentation of the file format to get an even better idea of what all of those numbers in the bottom half of the file meant. The beginning of the file is the hierarchy section of the file and was relatively intuitive to learn. It contains each joint associated with the figure on screen which includes its offset from the root (usually the hip) and the channels. The channels are the components that correspond to the bottom of the file which give the rotation data in each direction (x, y, and z) for each joint and includes the position for the root as well. Joints also have an "End Site" which give information on the actual length of the bone in the skeleton. Each channel in the hierarchy section corresponds to a number on each line in the motion section in the bottom of the file. Furthermore, each line in the motion section of the file corresponds to one frame of motion. Once I understood how the hierarchy section of the file influenced the bottom motion section, figuring out how to parse the document became much simpler. I was then able to add functionality to the program to automatically load BVH files and send them to the parser to be read and separated by the frames. The program can also organize the hierarchy portion of the file. I am hoping that this will help with my motion graph implementation to link these files together and to help me to implement the gestural part of the interface. Next week, I plan to spend most of my time tying together the components of the program and to be able to recognize gestures performed in front of the Kinect.

Week 8

July 15, 2013 to July 21, 2013

I met with Prof. Hodgins early in the week and we discussed the outline of what is left for the project so I can manage my time accordingly to have a good demo and paper when the tenw eeks are over. We mostly went into detail about how to wrap up the hand tracking so that certain gestures can be recognized. I did show some concern about some of the blobs being lost under certain conditions, but she reassured me that the instructions that I give can constrain the user to a space that prevents this from happening. That made me feel much better about finishing the hand tracking and recognizing gestures, because I had less to worry about that way. To wrap up with the gestural recognition part, I first went back through the videos for all of the user studies that I had and tried to pick out five gestures in which each subject performed the same or very similar motions. Then, I would start with implementing the gesture recognition of these five, based on the shape of the blob identified and the trajectory of the blob. This also gave me an opportunity to revisit my hand tracking implementation to make it more robust and tailor it to the gesture recognition part. After reviewing the user studies and doing some experiments of my own, I did find that the hands tend to be most visible when performing actions when the hands are in a fist or other similar closed position. Because of that, I may consider making this one of the constraints for the gesture detection.

The hand tracking part of the implementation has definitely been one of the harder parts of the project and I have tried approaching it in many different ways. Libraries that I have found like the BlobDetection library have been good at finding blobs, but I have to implement the tracking part myself and did spend some of this week going back and forth about if I should try my own implementation or make modifications to the blob detection library. I did find a paper online that used the BlobDetection library and simply made modifications to it to do the tracking. However, I'm still experimenting with this library and my own implementation of blob detection to try to make a decision. I am mostly torn between the notion that the code in the blob detection library may be more efficient or more robust and the thought that with my own implementation, I can know the code that I'm working with and be able to manipulate it to do what I need. This would prevent me from having to learn what all of the code does in another library, as I did with JMocap. For my implementation, I followed the algorithm described in an image processing article. Both implementations have the same issues, which is many false positive blobs are found in each frame. In both cases, I can approach this by setting a threshold on blob size. However, with my own implementation, I may be able to do more than that by editing the labels in a second pass to consider a larger area of pixels than is described in the article. Because the initial labeling is done parallel with depth segmentation, it did not add any time to the initial runtime. However, even with a second pass, the runtime would still be linear, which I believe is equivalent to that of the BlobDetection library. From there, both implementations have the same problems to be solved as far as tracking is concerned. The main issue that I need to be aware of is the size of the blobs changing between frames which could cause erroneous information being considered in the trajectory of the blob if not handled carefully.

To make some progress in the gesture recognition department, I began with reading a few papers about how others have approached such implementations and Googling the subject to see if I found anything different there. The primary approach that I found was the use of a hidden Markov model (HMM), in conjunction with the Viterbi algorithm, to make observations of the hand movement over time and using the probability that these observations attribute to a particular state to determine which state to select. This makes the hand tracking portion of the project that much more important, because I need to make sure that the correct observations are being made based on the trajectory reported. I did a bit of work on designing my implementation of the HMM and plan to do some unit testing with this portion to ensure that it works correctly before linking it with the hand tracking. I am hoping that this will help in the debugging of both components. I am hoping to be able to tie all of these parts of the project together early next week and be able to finalize the implementation for the rest of the week and some of week ten. I have already begun outlining the paper and getting the parts done that will not possibly be changing over the next two weeks. I am hoping that putting a dent in the paper now will give me more time in these last two weeks to add more to the game element of the implementation and have a great demo in the end.

Week 9

July 22, 2013 to July 28, 2013

It is hard to believe that it has already been nine weeks! While I feel that I have accomplished a great deal while I have been here, the time has certainly flew by. The beginning of this week was used to really tackle and finish the blob tracking and gesture recognition. I opted to go with my own implementation of the blob tracking. At first, it was easier said than done. However, I eventually opted to abandon the algorithm mentioned in last week's post as well. I realized that I was having so much trouble deciding between the two approaches because they were both more complicated than what was needed for this system. I went back to the drawing board and approached the problem in a much simpler way. Because I only really needed to account for the size and location of the blob and not the shape, per se, I was able to approach the detection by doing a sweep across the frame to find maximum and minimum values of blobs. Depending on the horizontal and vertical distance of a pixel from an existing blob, I was able to determine if a pixel was part of a blob or not. Doing this and keeping only two blobs for each frame based on size, I had a good detection method working and was able to start tracking the blobs. I was happy to finally have it working well, though of course, I was wishing that I had initially approached the blob detection this way as it would have given me additional time to work more on other features.

I spent most of the remainder of the week learning about hidden Markov models and algorithms that can be used to train the system on real data. After reading a few papers detailing the process of implementing the HMMs, I felt ready to start working on the implementation and training it. I started with a low number of states and only two gestures to see how accurate the current implementation was. After that, I iteratively started building up numbers on both to get better behavior. Having a little bit done for this part gave me the opportunity to tie all components of the project together. It was a good feeling to see all parts of the pipeline working as they should and having the on-screen avatar start jumping when I did the jump gesture. I also got quite a bit of the paper written during this week. So, I plan to spend this last week wrapping up the training, cleaning up the interface, and wrapping up the paper. If time permits, I would like to finish the motion graph to get better transitions between clips played. Though, if I do not have time to finish that part. What I have should be in a good condition for someone else to take over. Either way, I feel happy with the implementation now and I feel like I can get everything wrapped up well by the end of next week.

Week 10

July 29, 2013 to August 2, 2013

This final week started with getting the remainder of the HMM training finished. The process took much longer than I initially expected. I was able to automate some of the process. It was very interesting seeing the differences of changing the number of states and the sample sizes. Each model required many samples for its respective gesture. This also gave me a lot of data to test with. Having all components of the project put together by the end of last week was helpful because once I started making progress with the HMM training, I felt more comfortable doing smaller tasks like cleaning up the GUI and adding little bits of extra functionality to it. It also gave me more time to wrap up the paper found here. I debated working more on the motion graph for the implementation since I had already complete the BVH file loader and parser. While it was not a fundamental component of the project, it would have given the implementation the ability to have more fluid transitions between clips and could have made it easier to show multiple clips of the same type (ie. running) when a gesture was made. However, I felt that it made more sense to spend the rest of the week getting more results from the HMM testing and cleaning up all of the components of the gestural interface. It was very important to me to leave behind a good foundation for someone else to work with in the future.

I am very happy with the end result of the project. This project was quite large compared to many of the projects that I have implemented before and offered many challenges. Each component of the project tested me in its own way and I was able to get a sense of the amount and quality of work required for success in research. I really look forward to new challenges like this in graduate school and in the research that I will be conducting there. It was very rewarding to work with the Kinect to this extent and to familiarize myself with a piece of hardware that continues to evolve in the gaming industry. I think familiarity with this type of hardware is an important tool to have as an aspiring game developer. I certainly plan many more projects with this device and its successors as motion capture is a part of graphics that has had a growing appeal to me since such devices became popular and affordable.

It was a true privilege to have the opportunity to spend the summer at Carnegie Mellon University working for Dr. Jessica Hodgins. I still remember when I received the news that I would be spending the summer working here. I was overcome with excitement! Now that it is over, I can see how much I got out of it. It is really interesting to look back at the beginning of the summer and what some of my weaknesses were going into the internship. I think that I have definitely improved some of my weaker points. The CMU graphics lab is definitely among the best and I really enjoyed my experience here. Attending the weekly Graphics Lab meetings that took place during my stay really opened my eyes to the evolution of many different aspects of computer graphics. These meetings also helped me to identify parts of graphics research that I am most interested in. Prof. Hodgins was amazing to work for. She gave me a challenging project that offered me numerous new lessons to learn and tools to work with. I had a lot of control over the project, but she was always there to offer advice and steer me in the right direction if I got off course. I will really miss working here and I am truly grateful for this opportunity. I look forward to applying the skills that I picked up this summer in future endeavors.