Journal Entries

Here is where I kept track of everything I did on an almost daily basis. The entries are dated with the top being the most recent entry.

Tuesday, July 30, 2013 7:29 AM

Update: for some reason the video that I uploaded of my "Pre-presentation" is no longer working. I'm currently trying to find a fix for it. This is strange because it was fully functioning earlier this morning. I will continue to update as I fix it. Update: Please email me to request the sound file.Tuesday, July 30, 2013 1:00 AM

Finally! My pre-presentation has finished compressing and is uploaded to YouTube. Sorry about the buzzing in the back ground. I was not able to get rid of all of the excess sound without cutting out my own voice. And I'm happy the file uploaded, because for now some reason the video file is saying that it is corrupted, but the youtube video is working. So i will download the video from youtube just so I can have a copy on my laptop. Please email me to request the video, and I am going to sleep!Monday, July 29, 2013 4:02PM

So a couple of things since the last update. First, I recorded a 20 minute video of me speaking on what I did during this summer. It is a way of practicing for my presentation on Friday. Now, the problem with this is that I used the program Fraps to record it. So, it has come out to be around 30gb. I'm currently in the process of compressing it using movie maker which has shown successful results before. The problem is the amount of time that it takes to do the compressing. So far it is about a fourth of the way done and I started it again around 3 hours ago (I say again because the first time I tried to compress it, movie maker crashed). So, I assume that it will be done sometime tonight, and I will upload it to this blog as soon as it's done. Today I talked to Prof. Remy about how my questions were asked and how it might be better to ask the subjects (not at this time but in the future) the same question on the difficulty of the tasks after the WHOLE experiment is over. This is because something occurred whenever some of the subjects completed the first few tests. They would give the first task a 9 out of 10 on the difficulty scale, and then once they finished the second task, they would want to give the second task a higher rating than 10, which of course they couldn't without skewing the results. Another interesting event that occurred when I was trying to set up a svn repository using code.google.com. When I was trying to commit a file into the repository, I got this error: (404 Method Not Allowed) in response to MKACTIVITY. I used sites ranging from stackoverflow to the Microsoft support site to find a reason as to why this problem was occurring. The common theme seemed to be that Google Chrome got this error and it had to do with http vs https. Until this problem is fixed, I've just been uploading the necessary files to Google Drive. I also noted something today. One of the subjects that I tested was answering a question about what genre of games they play. Their answer was this, "I play driving games, but not the kind with a wheel, I use a controller. And I don't play driving games like Midnight Club but more like Need for speed. So that kind of driving scheme." As someone who doesn't play driving games, I had no idea that there was a difference between Midnight Club and Need for Speed. So while I wait for these videos to compress (and pray that it doesn't crash again) I will go ahead and upload another subject's results here:Name: Ash

Age: 19

Pre-Questions

1. On a scale of 1 - 5, how much do you consider yourself to be a "gamer"? 5 being the biggest gamer and 1 being not a gamer at all.

- 4

2. What type of gaming controllers are you most accustomed to using, if any? (Gamepad, Arcade Stick, Keyboard, Joystick, Free-Hand, Mobile Phone, None, Other)

- keyboard, freehand, mobile

3. What type of game genre(s) do you normally partake in? (Platform, FPS, Adventure, RTS, MOBA, Driving, Puzzle, Fighting, Strategy, Other)

- puzzle

4. Do you have any impairment that may prohibit you from navigating an object through an obstacle course? (sight / dexterity limitations)

-no

5. Have you seen the environments that I am about to ask you to navigate through any time before this test?

- no

Test 1:

Time (min:sec:milisec) - 05:07:02

Time off Track (min:sec:milisec) - 00:23:45

Constraints: None

Post-Test 1

1. On a scale of 1 - 10, how difficult was this test?

- 1

2. What parts in particular were difficult, if any?

-None

Test 2:

Time (min:sec:milisec) - 07:05:50

Time off Track (min:sec:milisec) - 03:29:67

Constraints - A visual obstruction between the rover and the task

Post-Test 2

1. On a scale of 1 - 10, how difficult was this test?

- 2

2. What parts in particular were difficult, if any?

-Backing up

Test 3:

Time (min:sec:milisec) - 04:52:02

Time off Track (min:sec:milisec) -00:28:92

Constraints - A visual obstruction between the camera or EVA crew member position and the task

Post-Test 3:

1. On a scale of 1 - 10, how difficult was this test?

- 1

2. What parts in particular were difficult, if any?

- none

Test 4:

Time (min:sec:milisec) - 07:27:07

Time off Track (min:sec:milisec) - :05:49:29

Constraints - Both Constraints

Post-Test 4:

1. On a scale of 1 - 10, how difficult was this test?

- 5

2. What parts in particular were difficult, if any?

- There were too many objects for me to navigate around

Wednesday, July 24, 2013 8:29 PM

So today's update has two parts. The first part is a couple of changes to the project / next steps and the second part is an update for another subject who went completed that Path tests today.First:

I have changed how the questions that I ask the subjects before each experiment, affect the experiment. I decided that I wont be changing the results based on the preemptive questions. For one, there's no way to accurately measure how much to change the results. Also, changing the results at all would skew the results. So, I will instead be using the questions as a way to see the difference between the types of people and the results garnered.

Also, tomorrow I will be recording a 20 minute oral presentation for practice of next Friday's presentations. I am to speak for 20 minutes and STOP when the 20 minutes is up.

Second:

Here is another subject's experiment results:

Name: Nicole C.

Age: 23

Pre-Questions

1. On a scale of 1 – 5, how much do you consider yourself to be a “gamer”? 5 being the biggest gamer and 1 being not a gamer at all.

-3

2. What type of gaming controllers are you most accustomed to using, if any? (Gamepad, Arcade Stick, Keyboard, Joystick, Free-Hand, Mobile Phone, None, Other)

-Keyboard

3. What type of game genre(s) do you normally partake in? (Platform, FPS, Adventure, RTS, MOBA, Driving, Puzzle, Fighting, Strategy, Other)

- Strategy, Platform

4. Do you have any impairment that may prohibit you from navigating an object through an obstacle course? (sight / dexterity limitations)

-None

5. Have you seen the environments that I am about to ask you to navigate through any time before this test?

-No

Test 1:

Time (min:sec:milisec) - 07:11:04

Time off Track (min:sec:milisec) - 00:02:03

Constraints - None

Post-Test 1

1. On a scale of 1 - 10, how difficult was this test?

- 7.5

2. What parts in particular were difficult, if any?

- The turns really threw me off

Test 2:

Time (min:sec:milisec) - 8:53:02

Time off Track (min:sec:milisec) - 3:31:07

Constraints - A visual obstruction between the rover and the task

Post-Test 2

1. On a scale of 1 -10, how difficult was this test?

- 8

2. What parts in particular were difficult, if any?

- The walls- I didn't like straying from the path

Test 3:

Time (min:sec:milisec) - 6:22:07

Time off Track (min:sec:milisec) - 1:06:84

Constraints - A visual obstruction between the camera or EVA crew member position and the task

Post-Test 3

1. On a scale of 1 - 10, how difficult was this test?

- 8.5

2. What parts in particular were difficult, if any?

- The wall in the beginning

Test 4:

Time (min:sec:milisec) - 8:02:07

Time off Track (min:sec:milisec) - 4:01:37

Constraints - Both Constraints

Post-Test 4

1. On a scale of 1 - 10, how difficult was this test?

- 9

2. What parts in particular were difficult, if any?

- WALLS

Wednesday, July 24, 2013 11:47 AM

Today you are going to navigate a simulated robot along a pathway. Stay on the pathway as much as possible, only diverging from it if there is an obstacle prohibiting you from continuing. The obstacles in the environment that you are about to see range from small low rocks to boulders to walls. Navigate around these obstacles as quickly as possible and find your way back to the path. The test ends when your robot enters into the finish block. You have 15 minutes per test. Good Luck.Name: Yerika Jimenez

Age: 20

Pre-Questions

1. On a scale of 1 - 5, how much do you consider yourself to be a "gamer"? 5 being the biggest gamer and 1 being not a gamer at all.

- 1

2. What type of gaming controllers are you most accustomed to using? (Gamepad, Arcade Stick, Keyboard, Joystick, Free-Hand, Mobile Phone, Other)

- keyboard, mobile phone

3. What type of game genre(s) do you normally partake in? (Platform, FPS, Adventure, RTS, MOBA, Driving, Puzzle, Fighting, Other)

- Platform, Driving, puzzle

4. Do you have any impairment that may prohibit you from navigating an object through an obstacle course? (sight / dexterity limitations)

- no

5. Have you seen the environments that I am about to ask you to navigate through any time before this test? (yes / no)

- no

Test 1:

Time (min:sec:milisec) - 6:05:03

Time off Track (min:sec:milisec) - 0:12:30

Constraints - None

Post-Test 1

1. On a scale of 1 - 10, how difficult was this test?

- 3

2. What parts in particular were difficult, if any?

- none

Test 2:

Time (min:sec:milisec) - 8:28:07

Time off Track (min:sec:milisec) - 0:02:07

Constraints - A visual obstruction between the rover and the task

Post-Test 2

1. On a scale of 1 - 10, how difficult was this test?

- 9

2. What parts in particular were difficult, if any?

- Walls and getting off the track

Test 3:

Time (min:sec:milisec) - 6:06:34

Time off Track (min:sec:milisec) - 0:39:45

Constraints - A visual obstruction between the camera or EVA crew member position and the task

Post-Test 3

1. On a scale of 1 10, how difficult was this test?

- 2

2. What parts in particular were difficult, if any?

- nothing

Test 4:

Time (min:sec:milisec) - 8:55:07

Time off Track (min:sec:milisec) - 4:16:03

Constraints - Both Constraints

Post-Test 4

1. On a scale of 1 - 10, how difficult was this test?

- 10

2. What parts in particular were difficult, if any?

- the most difficult part was finding my way back to the track

Tuesday, July 23, 2013 7:39 AM

I didn't get as many tests done as I wanted to yesterday, so today i will finish the ones that I wanted to do. Also, I need to tweak the EVA POV a bit, because currently the way the controls are doesn't represent what I'm trying to simulate very well. I'm also having a problem with the repositrory telling me to continuously verify my email account. So, if that doesn't get sorted out, I will just find another repository.So that is basically what today is for.

Monday, July 22, 2013 3:26 PM

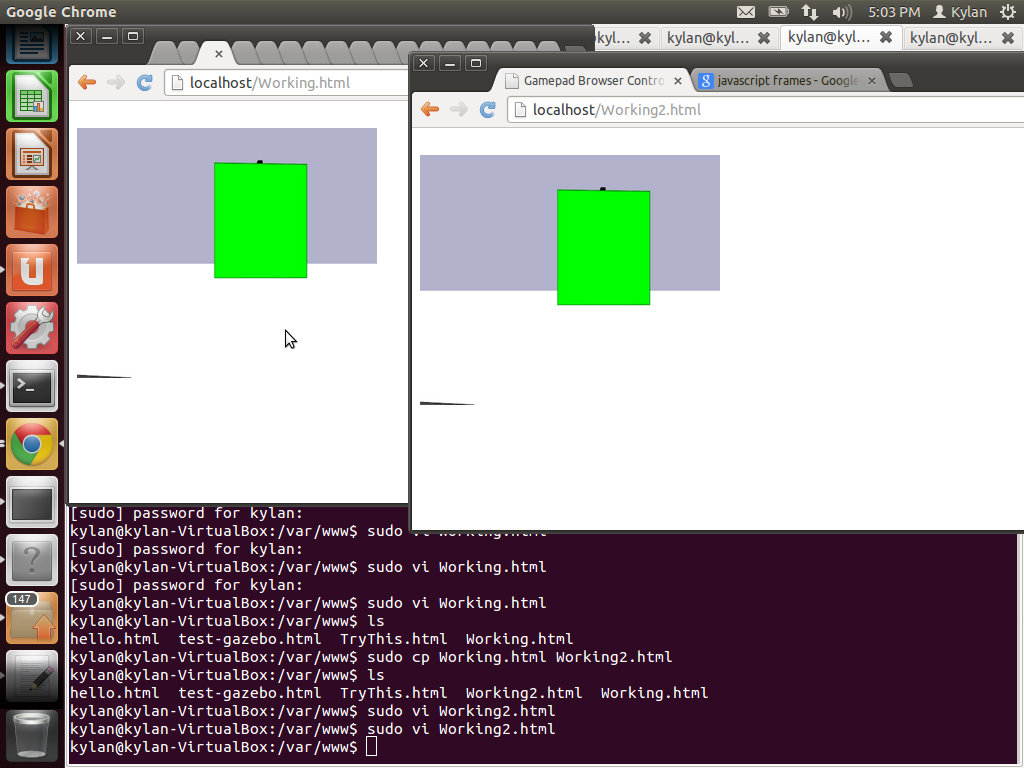

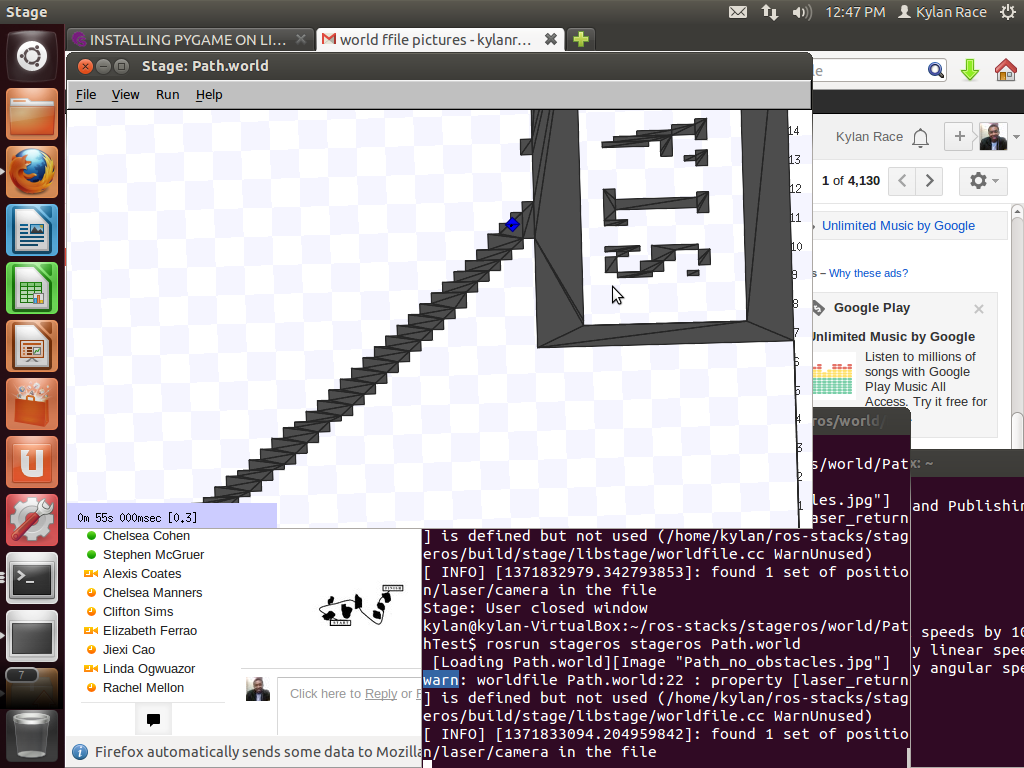

Update time! And quite an update it is.So, for the past while, I have been working to get my simulations up and running inside of the web browser. This leads more into one of the main points of the project which was hosting it through the cloud. I started with looking into Apache, but it turned out that I wouldn't even need it. This is because at this point I just wanted to get the simulation working on my localhost. I started with the On Board Camera POV which only has one robot running so it was the more simple of the two POVs that I was going to be implementing. It took a while to get the feed for the camera image because I was actually pointing it to the wrong port. The code was correct for the camera except for the fact that I was pointing it in the wrong direction. So once that was fixed, I started to head towards what I considered to be the more difficult aspect of the process which was having the navigation controls work from the browser. Getting the basic controls went well, but the problems started emerging when I saw that the robot would only take the equivalent of "one step" at a time. So, if I would hold the up arrow key, instead of continuously going forward, it would stop after moving one step. I knew that I needed to reset the variable that held the inputs, but I actually had extra brackets inside of the code. So I kept putting the line that reset the variable in the wrong place. Since it was resetting at the wrong time, the commands were bleeding into each other. So if you pressed forward..then pressed left.. instead of going "forward" and then "left," it would go diagonally. Eventually, I found the correct place and it worked. Next I had to set up the EVA POV. This one was a bit more difficult because there were two robots inside the world. This meant that both of these had to be controlled at the same time. I mapped one of the robots to the directional keys and the other to W A S D. I first approached this by using the same HTML file and replicated the code for the directional keys for W A S D. I then opened up another ros_web_server and pointed it to an open port and the 2nd robot's cmd_vel. This actually turned out not to work because there was a function inside of ros_web_server to account for multiple robots (as pointed out to me by Prof. Remy). As soon as this was accounted for, the 2nd robot started responding to my commands. So, what I've done is open two different HTML files in two different windows. One handles the directional key controls while the other handles W A S D. Here is a picture of that:

The window on the right is being controlled by W A S D and the left the directional keys. So next up, I need to make sure that both windows can be controlled at the same time, and I must test people! I'm pretty excited right now. More will come later after I run some tests.

Monday, July 15, 2013 7:23 AM

So just a quick update!Like the calendar says, I want to really bare down on how I measure efficiency. I also got a better look at the topography of the MDRS landscape over the weekend. This means I can finally change the constraints to be more accurate, something that I've been wanted to do for some time. That is just some of what I'll be doing today. As I said, this is a "quick update," so more will come later.

Thursday, July 11, 2013 6:31 AM

So recently I got to look at Troy's (my roommate's) JavaScript. In then end, what I do with my simulated robots will be running inside of his template. The first great thing that i noticed was that the JavaScript that handles the arrow key button presses is almost exactly like my python code for controlling the robot. This "revelation" means a couple of things.First, it adds another thing to the growing list of items that I am going to put less attention towards. That "thing" is continuously tweaking the control scheme for the robots. All i need to do is to be able to simulate all of the tasks as indicated. So, anything more than that is extra. Even the problem that I ran into earlier with not being able to run the two controls at the same time. This allows me to focus on the more pertinent things.

The revelation also means that once I finally transfer everything that is my simulation to the cloud, running it should not be a problem because of how similar the JavaScript is.

JavaScript even has clear documentation for a on-key-held-down event, unlike Python. This will save a lot of searching time.

Tuesday, July 9, 2013 8:46 AM

So quick update before I get into today's work. Yesterday Prof. Remy watched my video of my presentation to the two "off-campus" people, and he pointed out that one of their questions may not be as "extra" as I thought it was. He sent me the script that would allow me to give voice commands and the computer would then output what was said. I did, however need to make sure that things could be taken out of the calendar before I got to adding more things to it.This brings me to the Third Person Camera View test. So far, it seems that simulation of that an accurate simulation of that test would not be very possible. I have been able to get some results, but that was only when i skewed the parameters for the experiment. So if I can not figure out how to simulate this today, I think that it may have to go on the cutting board.

Today I also found a new "notification" that I have to tell the subjects before they begin. Users can not see if they crash. I am mentioning this because this exact thing happened to me when I thought that I had still had room in a narrow walkway, but it turned out that I had crashed awhile ago. This wasted time that I could have been using to complete the task.

Finally, I learned about another visual problem that I have to account for, and that's Parallax. I had not even thought about it until yesterday. Just one more thing to simulate in!

Tuesday, July 2, 2013 6:35 AM

Follow-up

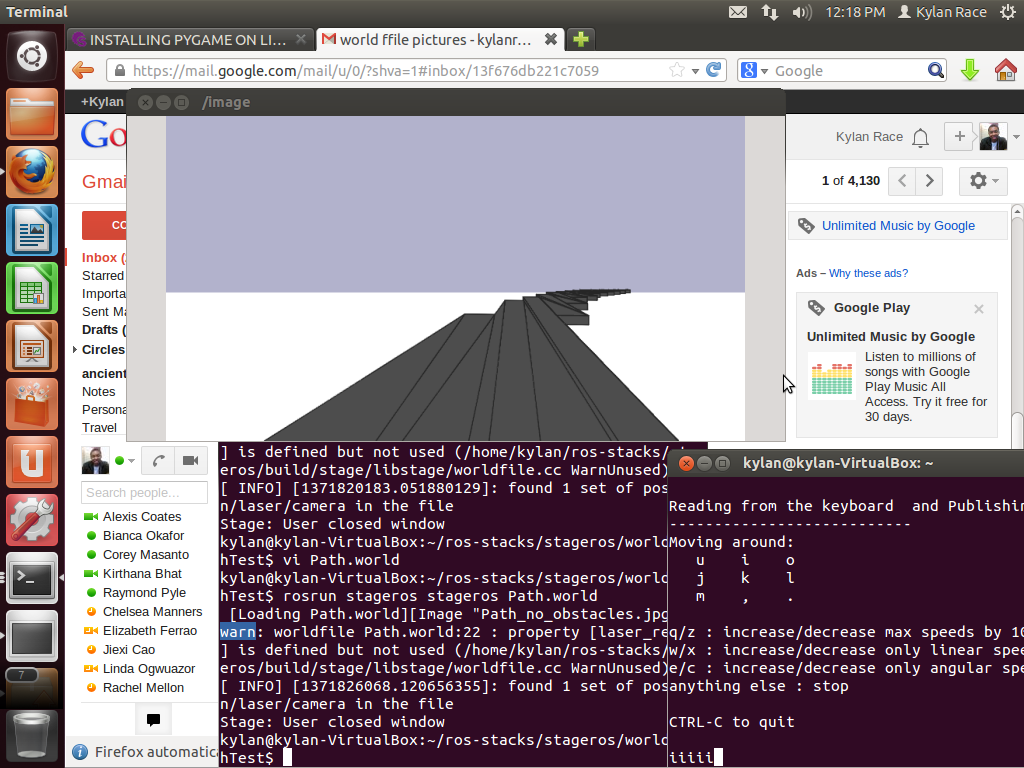

So like I said in the previous post, here are the results to the Path task with the OnBaord camera POV and all 4 constraints. If you would like to see the videos for this, please email me:Task: Path

POV: OnBoard

Constraints: None

Speed: 20 Clicks (3.36374997466 6.72749994933)

Time: 3:35

Task: Path

POV: OnBoard

Constraints: A visual obstruction between the rover and the task

Speed: 20 Clicks (3.36374997466 6.72749994933)

Time: 5:12

Task: Path

POV: OnBoard

Constraints: A visual obstruction between the camera or EVA crew member position and the task

Speed: 20 Clicks (3.36374997466 6.72749994933)

Time: 3:43

Task: Path

POV: OnBoard

Constraints: Both visual obstructions

Speed: 20 Clicks (3.36374997466 6.72749994933)

Time: 5:01

Tuesday, July 2, 2013 1:03 AM

Apparently my browser crashed when trying to process the videos that I included in this post. So, I am now going to redo it.Just to get this news out of the way, on Thursday I broke my foot. However, before that happened, I was given the opportunity to listen to and ask questions of Dr. Taylor. She conducted research at the MDRS in Utah and also took part in the simulation that I am doing a simulation of. I asked a couple of questions (Exactly what type of camera did you use? How difficult was it to use the controller that they provided you with while wearing the simulation space gloves? Did you prepare a terrain for the experiment or did you just use the natural terrain? etc.) which she answered to the best of her ability. Her answers are extremely useful in accurately recreating the environment that their experiment took place in.

I also encountered two errors in the past couple of days. The first error actually had not as much to do with Stage, but more so to do with vi. For some reason, the directional keys kept outputting letters instead of moving in a direction. Along with this was the fact that the backspace key was completely not working. I was able to temporarily solve these problems with ":set no compatible" for the directional keys and ":set backspace=indent,eol,start" for backspace. The problem is that these commands had to be inputted into vi every time I opened up a vi window. So, as to not waste time, for now I will be using vim instead of vi.

The second error actually had to do with me trying to start my guest computer from virtual box. For some reason, everything on the guest had been wiped. It was as if the computer had just installed Ubuntu and nothing else. I had taken a snapshot beforehand so I had the ability to restore it. However, I was curious what all what needed to fully install stage and everything else that is needed to build, manage, and execute some of the tasks on a computer. Here are the steps that I documented:

1. sudo apt-get install gthumb

2. sudo apt-get install python-webpy

3. sudo apt-get install python-setuptools

4. https://code.google.com/p/remy-robotics-tools/source/browse/trunk/ros_gazebo/install.sh

5. https://sites.google.com/site/sekouremycu/courses/cpsc-481-681/ros-web-service

6. svn co https://brown-ros-pkg.googlecode.com/svn/trunk/distribution/brown_remotelab controls

The steps that are only links mean for the reader to go to those links and follow their instructions.

Finally, here are four videos of the Path Task with the On-board camera POV and all of the constraints being tested by me. Or I would if it seemed that they weren't too big to upload to blogger. So I will let them finish being sent to YouTube, and I will upload the videos separately.

Wednesday, June 26, 2013 9:16 PM

So today was interesting. It started off interesting mainly because I went to work in the morning without remembering that we have a meeting every Wednesday. So I was sitting in the Lab / Computer Lab just typing away when Troy saw me and said that we were meeting.So besides making more environments that had the corrected obstacle collision, I also took a couple of videos since I got VLC's desktop recording working. Please email me to request them.

I also uploaded all of my files that needed to be transferred into Google Drive. This is just temporary until I figure out why I cannot upload my files/folders to the Google Sites that I created. I'm sure that I'm just missing a step.

I also spent today thinking up a couple of questions that I thought I should ask the researchers that had worked at the MDRS. Things like the exact size of their working area, how did the visors affect vision, etc.

Professor Remy also told us today that instead of a meeting next Wednesday, we will be presenting our progress up to that point to two persons, with at least one of them tuning in remotely. We need to record the presentation. He also said that by Wednesday, he wants us to take our answers to what we had thought the project was about (that we answered before we even started the project) and revise them with what we now know.

Also a fun thing that I noticed today, dragging and pulling things in the stage environment will make changes in the code if you choose to save them. THAT is very useful.

Tuesday, June 25, 2013 5:20 AM

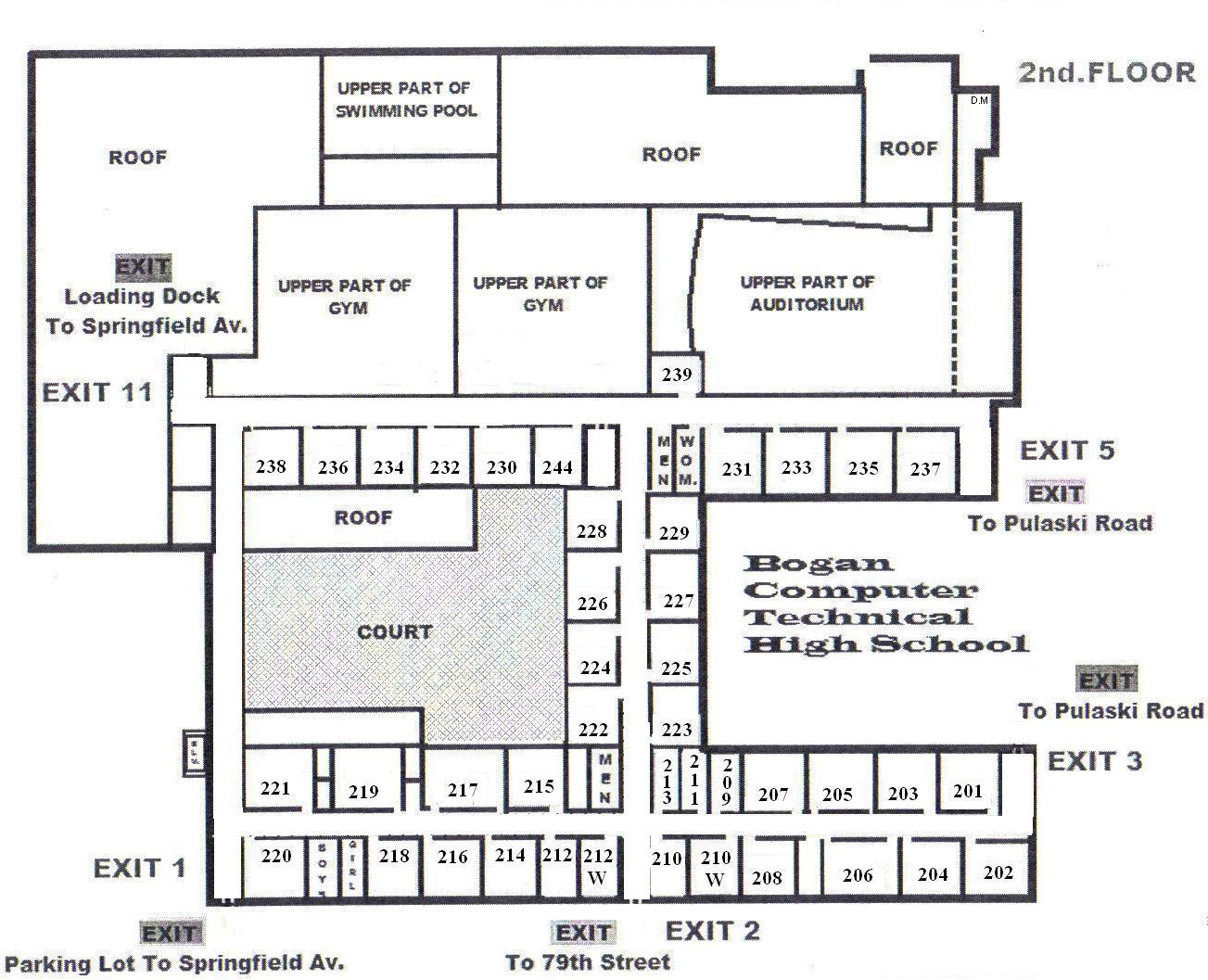

Ok so the post today is actually for yesterday. It is coming today because I decided to work through the night, and I wanted to make sure that everything I did was included in the post. So first things first... I found and cleaned up a floor plan of a school that I found online, as per what Professor Remy asked me to do. Her it is before and after the clean up:

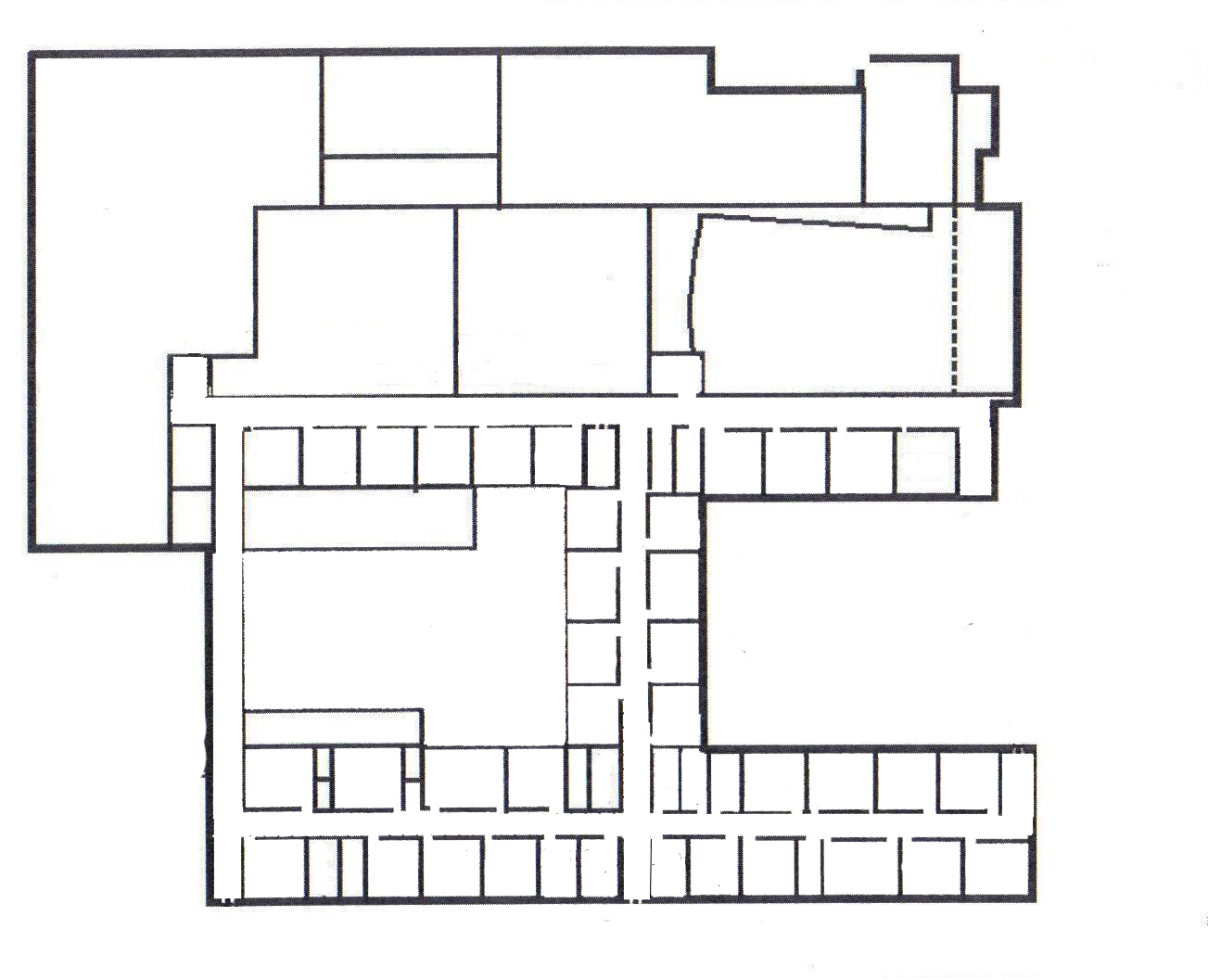

So I navigated the robot a small amount through that map plan with no problems. I did however encounter some problems with pygame. I'm not sure as to why (I don't believe i changed anything), but it is not responding to my button clicks anymore. I will look into that today. I did, however, take a couple of screenshots before pygame freaked out. So in these, pygame is fully working and I'm able to control the robot as someone doing the experiment would:

I also now have the questions that I believe I want to ask. I'm still narrowing down a couple, and I know that I will still need help making the questions sound "correct," but I will continue fine tuning that today as well. I have re-installed Ubuntu on my laptop, and I will go through the process of putting stage on it this morning. After that, I want to try to transfer everything from the Desktop to the Laptop. I will still keep the files on the desktop, but I just wanna see the difference in how fast stage runs on the different machines. So Tuesday so far: -Continue fixing up the Pre-experiment questions -Put Stage on laptop -Fix Pygame -Make a couple more world files for the On-board and Third Person POVs -Some other things that I don't quite remember at this moment, but are in my notes somewhere.

Wednesday, June 19, 2013 10:21 AM

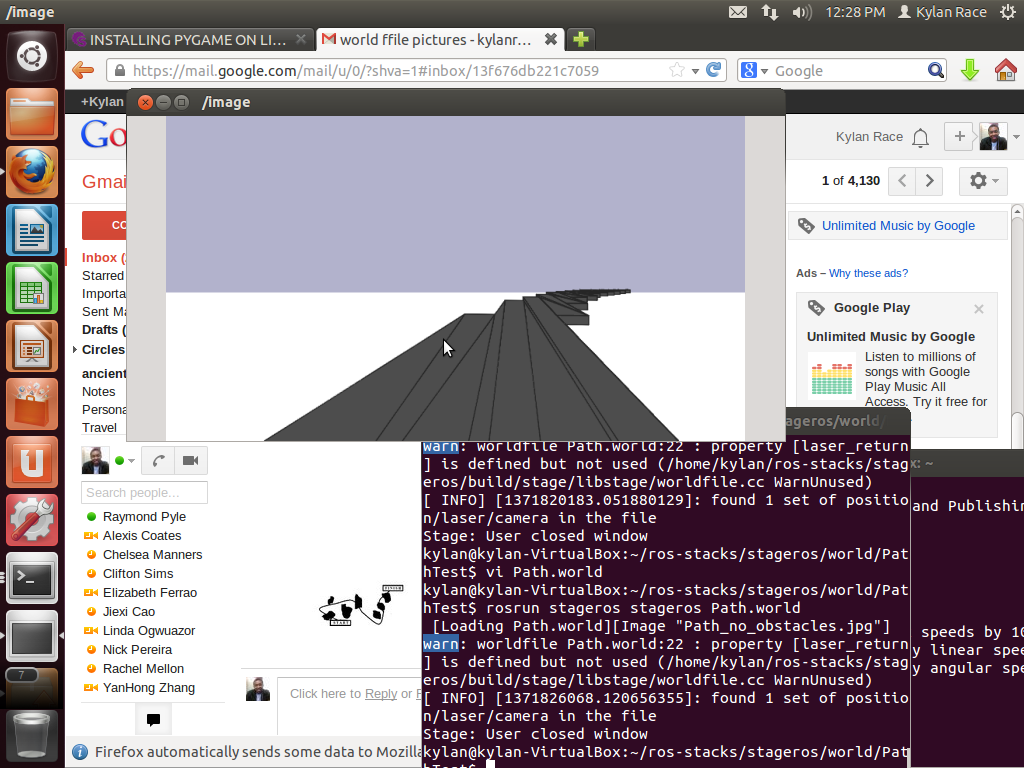

OK so this is the post that I said was coming last night. This is mainly because I worked all last night and I also had a presentation this morning. I did not want to post what I had learned if I was going to present it the next day. So, here is my update. I am now fully into stage. I have made a couple of world files as well as a couple of .jpg files to be used in the .world files. I will end up needing around 18 of these .jpg files. This is because all of the Tasks must be completed under each POV, and all of the constraints must be considered under each task. Also I got the ros method of control working and I will be also finish the non-ros method of robot navigation today. This, as well as the environments, should be finished by tomorrow. More will be posted this evening.Tuesday, June 18, 2013 5:13 PM

Today's blog post is mainly about what I did today and yesterday. Yesterday, through the advice of Professor Remy, I began to move all of my files from my laptop to my office desktop. This will be done until I can figure out / re-install Ubuntu to fix the memory problem. I will post the rest tomorrow morning as I will be working through tonight.Friday, June 14, 2013 8:46 PM

Today's post is short because it can be described in a couple of tasks that I did today. I worked on more environments, made the control scheme faster operate faster, and deleted unneeded files along the way as to free up space. That is what happened throughout the day. I will be working over the weekend to catch up the time that I lost on the day that the Ubuntu Guest's memory "disappeared."Also today, we were invited to view a group of middle schoolers' games. They were quite creative.

Thursday, June 13, 2013 7:40 PM

Today I planned on making more of the 12 environments that would be needed for the experiment. Like I mentioned in the last post, I wanted to be sure that all the constraint environments for each task were completed as to see how different environmental obstacles could prohibit certain methods of completing each task. I completed some of them and then decided to switch gears for a bit (as working on 12 environments in a row can become monotonous) and try to fix the control scheme problem that occurred yesterday. At that moment Professor Remy called and explained to me that it would be easier (and more preferable) to simply have the user input the robot's controls through a Python script running parallel to the stage environment.But at this point I encountered a problem that I am still trying to resolve. I've tried multiple solutions and asked different people, but none of them can seem to find why 5 GB of memory are missing from my Ubuntu Guest. This is causing me to not be able to save anything, which means no environments / scripts can be completed. I will continue to work on that problem through tonight as it is really bothering me.

Wednesday, June 12, 2013 7:22 PM

Today a couple of things happened. The first of which was meeting with Professor Remy and Troy Hill to present my findings up until that point. After I had finished my presentation, Professor Remy cleared for me my understanding of what was controlling the robots. So along with creating the environments (I will need one for each task and then for each constraint for each task..), I started aiming towards using the keyboard to control the robot. I did, however, encounter a problem with one of the packages not installing correctly. I will fix that up tomorrow.I also updated this blog with a couple of more pages as to make it more in-line with what the DREU requires of a website. The last thing i dd today was finish making all 4 stages for the 1st task. The 4 constraints are included in the files.

First thing tomorrow is looking up how exactly to start up a .launch file the correct way.

Monday, Junr 10, 2013 7:46 PM

-There will be a presentation on Wednesday to the public. I and Troy will be presenting our findings up to that point. I can use whatever medium I want to convey what I have learned. This needs to be organized and planned out tonight and updated through Wednesday.-First thing done today…installing gThumb as a way to open up the .pgm file that was used in the creation of the sample stage world file.

-Then I moved the .pgm file over to my host machine and downloaded Gimp so that I could attempt to make my own .pgm file. I was going to use Photoshop, however, the sample .pgm file indicated that it had been created using Gimp. So that is the program that I will use as well.

-I made a couple of world files and at this time (12:49 p.m.) I was going through each line of another world file’s code to see what each function did. A lot of them are actually pretty self-explanatory.

-Finally had the realization that the image (.pgm) file that is used during the creation and running of .world files can be as much as a line drawing in Gimp. At first, I thought that the image file had to be just like the sample image file was which was grey-scale and had what seemed to be random white lines in it. I went ahead and outline hat every line in the sample world file means, so that I know exactly what each function does.

As in line with the calendar, tomorrow more time will be in creating the world files needed for the project.

Friday, June 7, 2013 11:08 PM

Finally found my laptop charger so I can upload this post. It will still be short since I spent today mainly doing one thing.Today I spent the majority of the day teaching myself have to decipher and write world files. I wanted to have the world files that I use be better suited for the project's experiments than the one that we currently have. I will be using python for the world files, and I have already made a couple of functioning world files. I really spent the whole day figure out and coming up with these world files.

Today I also picked which helmet/glove type would be best for the EVA POV. I ended up picking the helmet with the largest field of vision. That way, the EVA subject's strain can be reduced.

Thursday, June 6, 2013

So there were a couple new things that happened today. First of all, i got to meet my new roommate in person. He came around 1 a.m. this morning. Also, I got stage working correctly on the desktop computer in my office space as well. This will greatly aid me during the project as run different experiments at the same time, reducing the time it will take. Here is the time log that I took during the installation. It is separated by the time spent running each line of commands:1: N/A

2: 1 second

3: 1 second

4: 3 seconds

5: 11 seconds

6: 8 minutes 23 seconds

7: 1 minute 39 seconds

8: 59 seconds

9: 16 seconds

10: 1 second

11: N/A

12: 1 second

13: 5 seconds

14: N/A

15: 1 second

16: 1 second

17: 1 second

18: N/A

19: 3 seconds

20: N/A

21: N/A

22: N/A

23: 1 second

24: 1 second

25: 1 second

26: 1 second

27: 1 second

28 - 45: N/A

46: 1 second

47: 8 seconds

48: N/A

49: 1 second

50: 2 seconds

51: 1 second

52: 3 minutes 2 seconds

53: N/A

54: 1 second

55: N/A

56 - 61 (Gazebo + Ros | Gazebo isn't needed so Ros install alone is fine):

56: N/A

57: 2 minutes 2 seconds

58: 1 minute 53 seconds

59: 1 second

60: 28 seconds

61: Does not finish (2 hours in)

62: N/A

63: N/A

54: 1 second

65: 3 seconds

66: 1 second

67: 32 Seconds

Total Stage Installation Time ( W/o Gazebo): 15 minutes 44 seconds

There were no errors throughout the entire installation except in the section that would allow the installation of Gazebo + Ros, however, that section is not needed for the purposes of this project since Gazebo will not be being used.

Today I also spent a good amount of time studying the "world" files. I went to a couple of different technology forums and started comparing the difference in the stage files and how those differences affected the 2d environment. I also "fiddled" with some of them to note how my changes affected the worlds. I will be continuing to study them tonight and, in line with the calendar, will be using python to begin the process of making worlds to match up to the MDRS environment.

P.S. I will also be going on another trek to buy groceries tomorrow. This time I will not get lost.

Wednesday, June 5, 2013 11:24 AM

Today I focused on thinking about the experiments and what was needed to be accomplished by stage to carry out those experiments. I also had a google calendar made that documents my timeline, but then I realized that my timeline is off due to my end date being wrong, so I will quickly reconfigure that. Also, I have some sketches of astronaut suits to upload.What different types of setups and parameters need to be simulated for the experiment?

Extravehicular Activity Direct Operation (teleoperated control of a robot by an EVA astronaut in immediate proximity to the robot with direct view) - To simulate this particular POV, I will need two mobile robots. One will represent the robot doing the work while the other will represent the human (who would be wearing the space suit) controlling the robot. The simulated human body will follow the simulated robot while it is completing its tasks.

Support Vehicle Direct Observation (teleoperated control of a robot in a shirt sleeve environment from a support vehicle in immediate proximity to the robot with direct view) - This simulation will also utilize two mobile simulated robots. One robot will yet again be the robot doing work. However, this time the other robot will not be exactly following the lead robot. It will still be in proximity of the lead robot, but it will be able to move around the lead robot as a vehicle following another vehicle would be able to do. This means that the simulated robot will need to be able to circle and navigate around the lead robot as it completes the experiment.

Third Person Camera View (teleoperated control of a robot in a shirt sleeve environment from a remote workstation using a camera mounted onboard the robot) - This POV is a bit different from the previous ones. There will still be a lead robot doing work however the vantage point is from a subject that is monitoring the operation from a stationary workstation near the experiment. I have to do some more research on the capabilities of Stage but I believe that it has an option that can function as a stationary camera. A stationary camera placed in proximity to the simulated robot could simulate the third person camera view.

Onboard Camera View (teleoperated control of a robot in a shirt sleeve environment from a remote workstation using a camera mounted onboard the robot) - This will be completed using only one simulated robot. It will be using Stage's function to see the objects around it and to react according to the information that it sees. In this case, however, I will need to see whether or not the onboard camera has a linear line of view versus rotating.

Monday, June 3, 2013 6:05 PM

Today's post will encompass Friday, May 31 and Today. This is mainly because I thought I had hit the publish button on Friday's update, but apparently I did not... So it is being moved to today.Friday, May 31, 2013:

Today was a very hectic day as I thought it would be. In the morning we had a mandatory meeting for all of the DREU and iAAMCS students. It mainly focused on "dress etiquette" and some of the events that will be happening during the summer.

After, the meeting, Professor Remy gave me a link that had the documented Stage installation instructions. I was to follow the instructions, document any errors found during installation, and time the overall process of installing stage. Along with going through the installation, I was still troubleshooting for the reason as to why my Ubuntu was not working on the Desktop. By the time that the evening cookout party came around, I thought that I had finished the installation (I would find out tomorrow that I did not).

Today:

It turned out that stage had not installed correctly, so today was mainly focused on getting it completely working. As for the errors in the documentation, there were a couple of lines missing that made it seem that line 21 was incorrect. I also encountered errors during my installation of "Gazebo," however Professor Remy informed me that Gazebo did not need to be installed. With Professor Remy's help and the some edits to the installation documentation, I had stage successfully installed. Soon after, I set up a server using "Ros" on the guest OS. Also, through about an hour and a half of updating (and Professor Remy's aid), I finally got Ubuntu working on the OS. Tomorrow I will install stage using the updated documentation on the Desktop. That way, I can see if the documentation is completely correct now. I also plan on finishing connecting to my guest client from my host machine. I started today, but I'm having a problem ssh'ing into the guest.

Interesting note: I played Ultimate Frisbee with some of the REU participants. It's a very interesting and fun sport. Tomorrow is karaoke.

Thursday, May 30, 2013 7:50 PM

Today I actually got less done than I wanted to. I had to complete the CITI training and I took longer to finish it than I had expected to. I also got lost on the bus route trying to buy a "dish" for the cookout Friday evening but that is another story. However, today I received administrator privileges on the computer at my station so now I can install things (which means I can install stage!). This also means that I begin the sub-phase of the project in which I document second by second the installation of stage using instructions that will be given to me by Professor Remy (I believe). That way, I can map the process of installing stage and find where they may be anything that isn't updated in its documentation or any installation problems. I will then send these findings to Everett. I also got my laptop in the mail which I have been missing ever so much. Once Stage is successfully installed on the desktop at my station I will try to use the updated Stage documentation to install it on my laptop (just to make sure the documentation is correct).I also answered a few questions today. For one, a joystick is definitely optimal for the experiment. As described in yesterday's post, I was worried about the effectiveness of the joystick. This was mainly due to my presumption that a joystick, although having the necessary build for easy gloved control, lacked the amount of "buttons" to perform the tasks needed. However, as informed by Professor Remy (thank you again), I now see that there are multitudes of joysticks that not only are easy to maneuver, but also have plenty of wide spaced buttons that can perform all the necessary tasks. I also know now I will be most likely using the Python programming language to implement Stage.

I put in some thought on the question of "How will I receive information from the persons controlling the robot?" But as of yet, I haven't come to a conclusion on it. I believe this leans into how the data is measured as well.

Tomorrow is going to be a fast paced day because there is a meeting in the morning, I still have to find a dish (I was on the wrong bus), and then there's a cookout in the evening. In between those points I plan to be finishing coming up with specific questions to ask the experiment's participants, sharing a calendar with my summer goals, and answering a couple of data communication related questions.

Wednesday, May 29, 2013

Today I looked into a couple of questions. The first of which was: What is the best way to measure how effective each point of view is.The robots are being controlled by a human. So the best way to measure how effective each point of view is, is to evaluate each human participating in the experiment on which, in their opinion, was the best POV to work with. Also, the measure of "POV Effectiveness" should include the speed at which they complete their task and the efficiency level (how close they are to the intended task result) at which they complete their task. To sum up the overarching question, "What is each subject's point-of-view preference and what is their efficiency based on their preference?" The subjects should be surveyed in some way before the actual experimentation beings. This could be a recording of some questions answered by them or a questionnaire prior to the experiment. Although many variables can skew the results of this experiment, we cannot possibly ask each subject hundreds of questions before the experiment even commences. I believe that there are three main outlying variables that need to be looked out for using a preemptive survey of some sort:

1. POV Preference (Different POVs work better for different people. The fact that one subject may be perform better with a Third Person Camera View simply because he/she is more comfortable with it must be taken into consideration when calculating the data.)

2. Gamepad vs Joystick (As with the "POV Preference" variable, people are different. Some subjects may be better and more accustomed to using a gamepad while others may prefer to use a joystick. The controller and how the subject uses it to operate is an extremely important part of the experiment. Without a controller nothing will move in the first place.)

3. Gaming Experience (Under the assumption that the subjects in the experiment will be using either a joystick or a gamepad to control the robot, the subject's experience with that form of control scheme will need to be considered. This needs to be considered even if a universal controller (assume gamepad for this example) is picked. If there are two subjects that are more comfortable using a gamepad vs a joystick, but subject #1 has been using a gamepad for 20 years vs subject #2's 5 years, the data will yet again be skewed due to player #1's steep skill curve. It has to be kept in mind that the variable of "Gaming Experience" can be measured in multiple ways (years spent gaming, amount of games finished on a certain difficulty, competitions participated in...to list a few).

Controller Problem:

-Even with the variables mentioned previously (gaming experience and Gamepad vs Joystick), there is still a problem with a controller. The "Extravehicular Activity Direct Observation" point of view would require a subject to wear a mock space suit, gloves included. Although it would probably be much more efficient to use a gamepad (two 8 way directional joysticks vs one and more buttons to assign motions to), the gloves used by astronauts currently would not allow it. These gloves:

would be the most accurate representation of the gloves used by astronauts and the crew at the NSBE Mars Desert Research Station in Utah. They are made of multiple layers of Nylon and Nomex. It would be difficult enough for the subject to simply grip and press precise buttons. However, much more mobility than what the gloved hands offer is needed to utilize the 10 buttons, 2 joysticks, and directional pad on the average gamepad efficiently. With this in mind, it would seem that a joystick, although with less controls, would be the necessary controller to use.

What's First?:

-Another question that I addressed today was, "What would be the first step that I would take if I were to implement the experiment in question right now?" I decided that the first step would be selecting the subject that would be participating in the experiment. They would have to be selected as randomly as possible as to avoid potential result skewing. Then I would use the questions that I would have previously created to remove any major skewing variables from the experiment and setting up blocks to separate the different types of subjects. From there, the actual experiment’s implementation would commence making sure to use each point of view for each type of subject.

Picking up objects:

-Another one of my goals for today was to find evidence of people using Stage to code a robot that can pick up objects (this is one of the tasks that the robot has to be able to do). There were a couple of examples that I found o youtube and on sourceforge.

Current Hypothesis:

-Today I also put thought into my hypothesis of the experiment. I believe that using a joystick controller with the "Support Vehicle Direct Observation" point of view would be the best (best measure by easiest perspective to work with, fastest task completion, and most efficient completion of task). This is because a support vehicle would be able to give the subject a view of any piece of the experiment they want to see in real-time. There would be hardly any blind spots and it lacks the difficulty of controlling the robot using space suit gloves.

Question to think about:

-Is there no uniform on average best POV/method? Does it completely depend on the subject?

Wednesday, May 29, 2013 9:31 AM

I'm going to have two posts today with the first one focusing on what I did yesterday since I didn't have one yesterday due to not being able to set-up the blog (apparently it was as simple as the address being taken).I spent most of yesterday (Tuesday, May 28 2013) researching on what exactly the "Stage 2.5D Simulator" was. From what I gathered, stage is a plugin driver for the "Player Project" which is used to simulate a 2D environment. In this environment, hundreds of robots can be simulated. The robots in the environment respond to code inputted by the user.

Along with reading documentation on the implementation of "Stage," I also utilized the multimedia site "YouTube" as a method of finding past usages of Stage. Some of the videos that I watched are listed as follows (to list a few):

-Line Follower in Player/Stage

-Multi-Resolution Navigation: Apartment Example (Full Version):

-Robot Follows Another Red Robot in Stage 2D Simulator:

-Follow Human Simulation with Player/Stage:

-Player/Stage Simulation:

I also have started following a couple of different blogs dedicated to the installation of and the use of the Player/Stage software. It seems that a lot can be done with Stage, but there has not been very extensive use of all of its multiple features (at least not documented use).

Since I do not have a desktop at my station yet and my laptop has not be shipped to me yet (due to arrive Thursday, May 30th at 5:00 p.m.), I can not actually install and use Stage myself as of yet. However, I foresee that this week will be mainly spent familiarizing myself as much as I can with Stage and relating what I find on Stage to the experiment in question.