Reports:

The Digital Anatomist progject has various end user applications like the Fondational Model Browser, Image Manager for Anatomy, Query interfaces etc. All these applications greatly involve interaction of the user with Images.

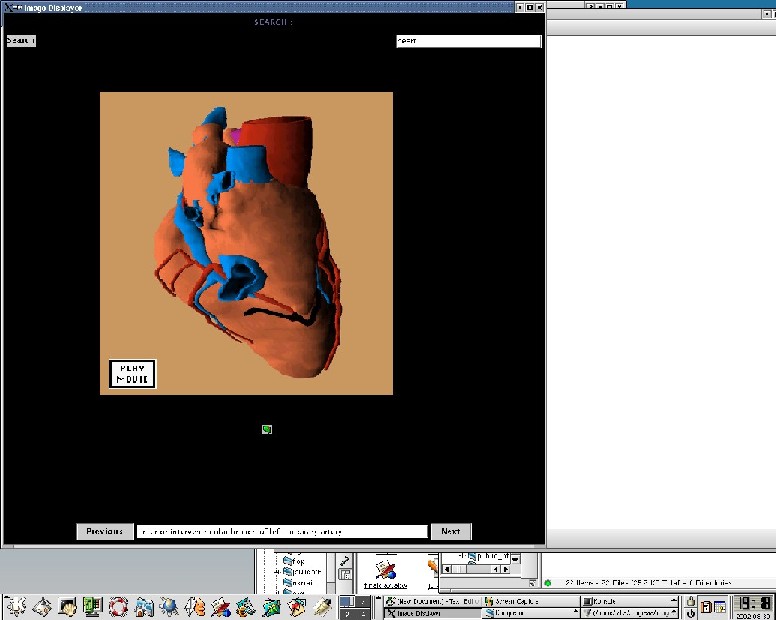

The Image Displayer is a Java interface to the Image repository.It provides the functionality of retrieving and displaying images from the image_repo using Content based image retrieval.

The Idea behind this program is to have an intelligent interface that retrieves images based on what exactly the user is looking for.

The interface currently is basic and does content based retrieval based on simple techniques.

Few notes about the program.

It's a simple java interface which retrieves images from the database 'image_repo', orders them using Content Based Image Retrieval echniques and displayes them. The images can be retrieved based on the Annotations, using exact match and also using the Captions(which is a more general query).

In case the images are being retrieved by the captions, the program also displayes the name of the annotation associated with each image.

To retrieve images based on the captions, the presence of a single non stopping word is enough to retrieve that image as a result for the query. All the words in the caption in the database are stemmed, the words in the query are also stemmed and a match is looked for.

After the images have been retrieved, they are ordered depending on the area occupied by that specific region. The larger the area the better the image. The coordinates of the region are retrieved from the database and they are parsed and finally the Vector notation (just the boundary coordinates for the region) is converted into Raster (each pixel lying in that region is calculated). Correspondingly a labeled image for all the retrieved images is also generated which is a 2D array of the size of the screen resolution, where each region is denoted by a label (Eg. The left atrium may be denoted by 1, and all the pixels that occupy that region are set in the array with the label value).

Please look for the documentation along with the code to see how things are actually working.

The database consists of duplicate images. Thus, a number of duplicates are retrieved each time. These duplicates could be removed by comparing the labeled images, and throwing out the duplicates.

In retrieving images based on their content-- images which have 2 or more components annotated in them can be displayed. This will be a fairly complex operation since, only images with single regions annotated exist in the database, therefore displaying images which have "the stomach and the esophagus" highlighted in them would require image processing and putting 2 images together.

The user can be given the flexibility to display images in colors of his choice and this would require a different method of storing the images as compared to the current one.

Various views of an image can be generated like the front, or rear.

Here's a snap shot of the Interface: